FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Calling all Data Engineers! Fabric Data Engineer (Exam DP-700) live sessions are back! Starting October 16th. Sign up.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Power BI - exclude the duration of overlapping...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Power BI - exclude the duration of overlapping events within a specific period

I have a table in power bi with the list of problems that cause downtime in specific machines. The structure of the table is as follows:

| machine_id | start_timestamp | downtime (sec.) |

| 1 | 10/10/2021 7:03:00 AM | 100 |

| 1 | 10/10/2021 7:04:00 AM | 30 |

| 1 | 10/10/2021 7:06:00 AM | 300 |

| 2 | 10/10/2021 7:08:00 AM | 20 |

- machine_id is the id of the machine affected by the problem in that row

- start_timestamp is Date/time that tells when the problem started

- downtime (sec.) is the total time in seconds that machine was down due to the problem in that row

What I want to calculate as a 'measure' is, for each specific machine and for a certain period of time, the total downtime without overlapping values. That means, if there is already a problem that caused a downtime in a certain time, the existence of a new problem that overlaps that one shouldn't be added in the calculation (or eventually added partially).

As an example, for machine_id = 1, between 10/10/2021 7:00:00AM and 10/10/2021 7:10:00AM I would obtain a total downtime of 100 + 240 = 340 seconds.

Can you please help me? I have tried many approaches without success. If I need to clarify anything else just say.

Thanks!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@GoingDigital123 OK, I adjusted Overlap a little and solved this. PBIX is attached below signature. Basically, added the following column to the table:

end_timestamp = [start_timestamp] + [downtime (sec.)]*1/24/60/60Then this measure:

Overlap =

VAR __Start = DATEVALUE("10/10/2021") + 7/24

VAR __End = DATEVALUE("10/10/2021") + 7/24 + 10/24/60

VAR __Table = GENERATESERIES(__Start,__End,1/24/60/60)

VAR __Table1 = ALL('Table')

VAR __Table2 = GENERATE(__Table,__Table1)

VAR __Table3 = ADDCOLUMNS(__Table2,"Include",IF([Value]>=[start_timestamp] && [Value] <= [end_timestamp],1,0))

VAR __Table4 = GROUPBY(__Table3,[Value],"Second",MAXX(CURRENTGROUP(),[Include]))

RETURN

SUMX(__Table4,[Second])

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@GoingDigital123 OK, I adjusted Overlap a little and solved this. PBIX is attached below signature. Basically, added the following column to the table:

end_timestamp = [start_timestamp] + [downtime (sec.)]*1/24/60/60Then this measure:

Overlap =

VAR __Start = DATEVALUE("10/10/2021") + 7/24

VAR __End = DATEVALUE("10/10/2021") + 7/24 + 10/24/60

VAR __Table = GENERATESERIES(__Start,__End,1/24/60/60)

VAR __Table1 = ALL('Table')

VAR __Table2 = GENERATE(__Table,__Table1)

VAR __Table3 = ADDCOLUMNS(__Table2,"Include",IF([Value]>=[start_timestamp] && [Value] <= [end_timestamp],1,0))

VAR __Table4 = GROUPBY(__Table3,[Value],"Second",MAXX(CURRENTGROUP(),[Include]))

RETURN

SUMX(__Table4,[Second])

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Greg_Deckler.

I was wondering if you could help me with this problem.

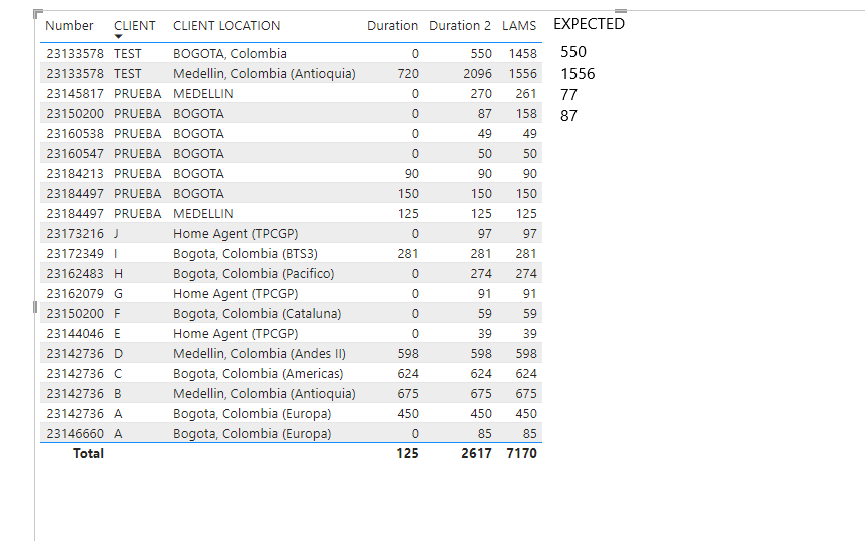

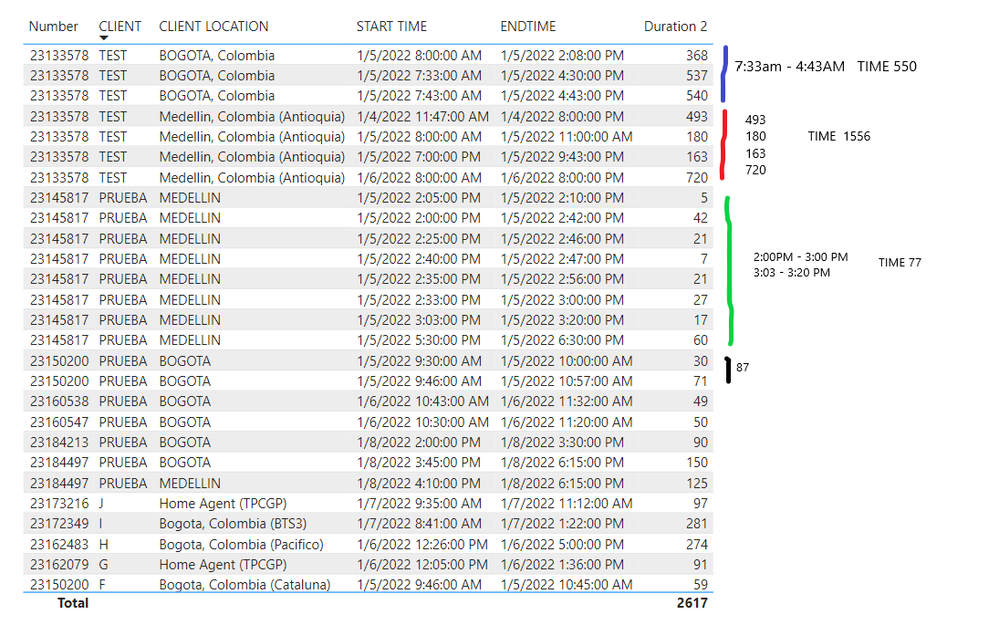

As preconditions, the data must be group by or filter by number, client, client location.

I need to take the difference between the highest value of end date and the lowest value of start date, of a continuous date time, that is, not to add several times if there is overlap, but I have a problem when I count time that is not actually continuous, I present the error in images to see if it is more understandable.

Duration is what I extract from your example, LAMS is a test of mine that isn´t working either.

Thanks in advance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Greg_Deckler ! It worked quite well!

The only problem I see is when I have many rows in the real dataset. In reality, I have several machines and the period covers several days. In that case, I believe it takes a lot of time to calculate the measure. I am quite new to Power BI but I was wondering if using python scripts might help with this in some way to facilitate the calculations?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@GoingDigital123 Yeah, it is not a particularly fast measure on large datasets there is no doubt about that. I tried a bunch of different methods when I was constructing that measure and finally gave up and took the brute force approach. One of the main issues in your case comes from the granularity, seconds versus minutes or hours. You would likely get an order of magnitude performance increase if you worked in minutes versus hours. So basically in Table drop the last /60 and then at the end multiply by 60 to get back to seconds. Might be slighly less accurate but would be significantly faster.

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@GoingDigital123 These should help:

Overlap - Microsoft Power BI Community

See my article on Mean Time Between Failure (MTBF) which uses EARLIER: http://community.powerbi.com/t5/Community-Blog/Mean-Time-Between-Failure-MTBF-and-Power-BI/ba-p/3395....

The basic pattern is:

Column =

VAR __Current = [Value]

VAR __PreviousDate = MAXX(FILTER('Table','Table'[Date] < EARLIER('Table'[Date])),[Date])

VAR __Previous = MAXX(FILTER('Table',[Date]=__PreviousDate),[Value])

RETURN

__Current - __Previous

Overall, it is going to be a messy calculation where you have to add an endtime column to each row and then exclude rows that fall within the start and end time of another row essentially. And that's the easy calculation comparatively as opposed to partial overlap. Might work on this some more since it is interesting.

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

Helpful resources

FabCon Global Hackathon

Join the Fabric FabCon Global Hackathon—running virtually through Nov 3. Open to all skill levels. $10,000 in prizes!

Power BI Monthly Update - October 2025

Check out the October 2025 Power BI update to learn about new features.