FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Power BI Incremental Refresh fails with Too Ma...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Power BI Incremental Refresh fails with Too Many Requests

Hi,

I'm trying to use the Incremental Refresh functionality in PBI Dataflows by connecting to 3 different tables via ODATA feed. All these tables are part of the same dataflow.

If I refresh the dataflow without using Incremental refresh, everything is refreshed in about 18 minutes. I'm trying to improve the performance as much as I can, so I enabled the Incremental Refresh. The initial refresh now fails with Too Many Request error message.

Just for test purposes, I split each table in a separate dataflow, and I noticed that one of them successfully completed, but the other 2 still fail. Below are the tables and # of rows.

Even though there is no big difference between Table 2 and Tables 3, Table 2 fails all the time when trying to read the same batch of data.

Entities | # of Rows | Incremental refresh status |

Table 1 | 902,960 | 429 – too many requests |

Table 2 | 202,130 | 429 – too many requests |

Table 3 | 171,890 | Completed in about 5 seconds |

Since the refresh works when not using incremental refresh, do you know what can cause getting Too Many Requests error message? Do you know what's the mechanism behind when using and when not using incremental refresh?

Thanks,

Teodora

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

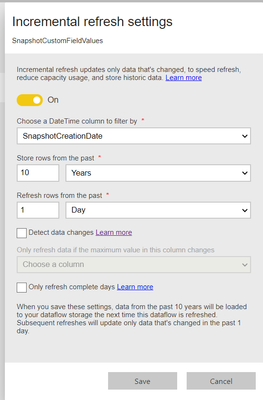

What do you have for store and refresh parameters? Incremental refresh will make a call for each date granularity in the refresh range--so if you have 10 days set, it'll make 10 calls, if you have 2 months, it'll make two--if you have 60 days, it'll make 60 calls. Theses may be executing concurrently.

can you move the queries to separate dataflows?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jeff,

I moved each table in a separate dataflow and noticed that one of them successfully completed. Even though there is no big size difference between Tables 2 and Table 3, Table 2 fails.

The parameters I currently set are to bring data from the last 10 years and the granularity is 1 day. I think that might be one of the issues, at least for Table 1. I will give it a try to store data only for the last 2-3 years and the granularity to be weekly. Will come back with the end result, thanks for your help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In my example here, initial refresh will query last 5 full years (so starting 2015-01-01), each refresh afterwards will clear out the previous 3 days and make three calls to refresh for each day.

DateTime column should be the create date for a record--don't use modified date. Incremental refresh is for appending new records, the only records updated will be those in the "refresh rows" range (since that data is cleared out and requeried).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm using Creation Date, I know that if I use Update Date I will start having duplicates.

This is the current configuration, the initital refresh is the one that fails. If I don't use incremental refresh, the initial refresh completes in about 18 minutes.

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!