Join us at FabCon Vienna from September 15-18, 2025

The ultimate Fabric, Power BI, SQL, and AI community-led learning event. Save €200 with code FABCOMM.

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Compete to become Power BI Data Viz World Champion! First round ends August 18th. Get started.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Output of a calculated measure not changing in res...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Output of a calculated measure not changing in response slicer selections

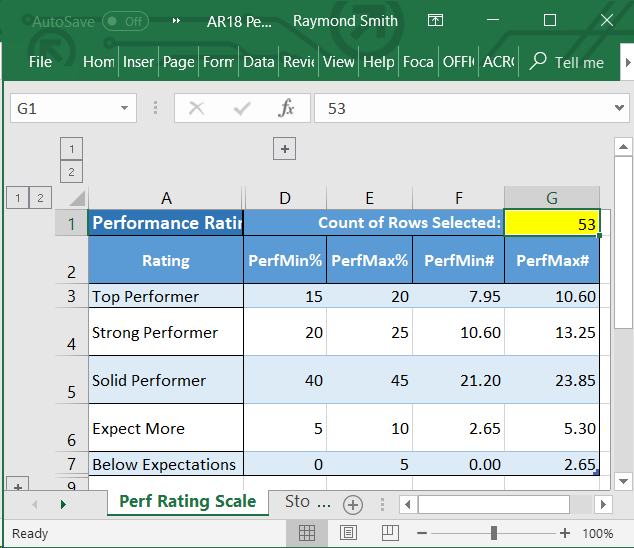

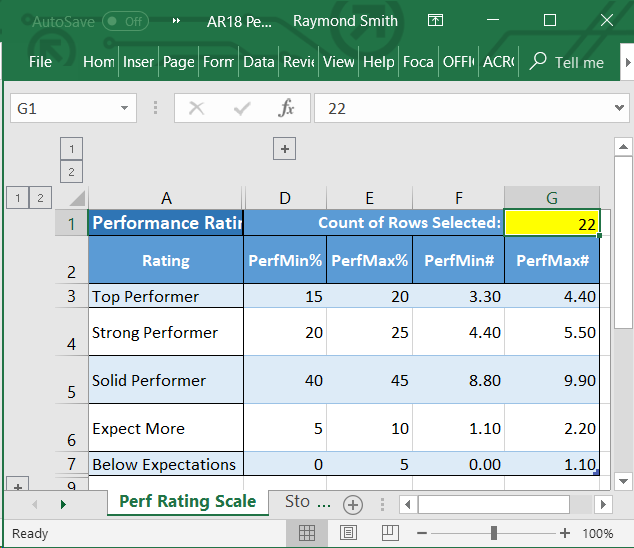

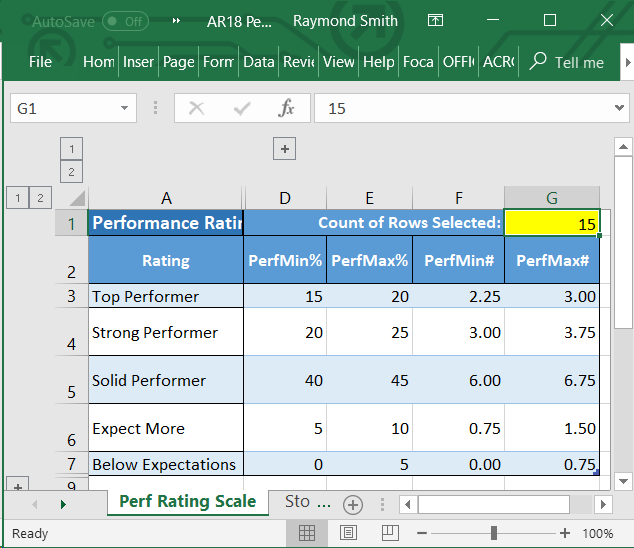

The output of a calculated measure (see Figure # 3 below) is not changing in response slicer selections in a visualization of a related (primary or central) table (see Figure #’s 4, 5, 6 below).

However, a different instantiation of the same measure using identical syntax in the visualization sheet (see Figure #4 below) and, presumably, a different context is changing in response to slicer selections (see Figure #’s 5, 6).

So clearly the context of the [PerfMin#] calculation in the related ‘Perf Rating Scale’ lookup table (see Figure #3) is not correct.

I’ve tried numerous options with both syntax (referencing or not referencing table and/or column, etc) and DAX functions (RELATEDTABLE, ALLEXCEPT, et al) without success.

Since we have different rating distribution targets for each performance rating level, the objective of the [PerfMin#] and [PerfMax#] measure is to calculate specific target range #’s based on the resultant count of employees (rows) for any given slicer selection.

So the final or desired [PerfMin#] measure would be as follows:

PerfMin# = CALCULATE( COUNTROWS('Calibration Detail Data'), ALLSELECTED('Calibration Detail Data') ) * ('Perf Rating Scale'[PerfMin%]/100)

[Note that the right operand, “… ('Perf Rating Scale'[PerfMin%]/100) …”, was dropped in the examples below to better highlight that the left operand result was not changing in response to slicer selections.]

The correct results for the selections, or lack of selections, shown in Figure #’s 4, 5, 6 are captured in Figure #’s 7, 8, 9 (see below).

The ultimate objective is to plot [PerfMin#] and [PerfMax#] on the Performance Rating Distribution graph to how the actual ratings compare to the target ratings.

Any suggestions on how to solve this problem?

Thanks,

![Figure #2.png Figure #2: Data relationship between ‘Perf Rating Scale’ and ‘Calibration Detail Data’ Tables (‘Perf Rating Scale’[Perf Rating] has a 1 to many relationship with ‘Calibration Detail Data’[Performance Rating])](https://community.fabric.microsoft.com/t5/image/serverpage/image-id/117543i5B05969DDDCDABAA/image-size/large?v=v2&px=999)

![Figure #3.png Figure #3: Calculated measure in ‘Perf Rating Scale’ Table, which is used to generate the ‘Perf Rating Scale’[PerfMin#] calculated column](https://community.fabric.microsoft.com/t5/image/serverpage/image-id/117541i436911D7F4681724/image-size/large?v=v2&px=999)

![Figure #4.png Figure #4: 2 data visualizations using the same calculated measure: one using [PerfMin#] from ‘Perf Rating Scale’ table and the other using [Rows] calculated directly on visualization (and, strictly speaking, a 3rd measure [Selected Employees] producing the same intended output by counting the number of distinct names in the ‘Calibration Detail Data’[Name] column)](https://community.fabric.microsoft.com/t5/image/serverpage/image-id/117540iE6FF18F31C66B3DB/image-size/large?v=v2&px=999)

![Figure #5.png Figure #5: Output of [PerfMin#] measure DOES NOT change but output of [Rows] (and [Selected Employees]) measure DOES change in response to selecting “Strong Performer” on Performance Rating Distribution graph](https://community.fabric.microsoft.com/t5/image/serverpage/image-id/117544i1A025C30C695DCA6/image-size/large?v=v2&px=999)

![Figure #6.png Figure #6: Output of [PerfMin#] measure DOES NOT change but output of [Rows] (and [Selected Employees]) measure DOES change in response to selecting “Engineer” in the Job Level slicer (filter)](https://community.fabric.microsoft.com/t5/image/serverpage/image-id/117545i83E876D53531A958/image-size/large?v=v2&px=999)

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for reply Cherie ![]()

Thought about this approach but wasn't sure it would use the correct percentages for each different performance rating.

After referencing the correct table, the calculated measure seems to generate the correct results!

Here's the updated expression:

PerfMin# =

VAR a =

CALCULATE (

COUNTROWS ( 'Calibration Detail Data' ),

ALLSELECTED ( 'Calibration Detail Data' )

)

RETURN

CALCULATE ( a * MAX ( 'Perf Rating Scale'[PerfMin%] ) / 100 )

Thanks for your help,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @raimund

You may try to create a measure instead of calculated column for PerfMin# as below:

PerfMin# =

VAR a =

CALCULATE (

COUNTROWS ( 'Calibration Detail Data' ),

ALLSELECTED ( 'Calibration Detail Data' )

)

RETURN

CALCULATE ( a * MAX ( 'Calibration Detail Data'[PerMin%] ) / 100 )Regards,

Cherie

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for reply Cherie ![]()

Thought about this approach but wasn't sure it would use the correct percentages for each different performance rating.

After referencing the correct table, the calculated measure seems to generate the correct results!

Here's the updated expression:

PerfMin# =

VAR a =

CALCULATE (

COUNTROWS ( 'Calibration Detail Data' ),

ALLSELECTED ( 'Calibration Detail Data' )

)

RETURN

CALCULATE ( a * MAX ( 'Perf Rating Scale'[PerfMin%] ) / 100 )

Thanks for your help,