FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Join the Fabric FabCon Global Hackathon—running virtually through Nov 3. Open to all skill levels. $10,000 in prizes! Register now.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: New to BI. Writing SQL queries to parse data. ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

New to BI. Writing SQL queries to parse data. I am obviously missing something.

Started using Power BI on the recommendation of a friend. It looks well made and powerful, so I hope to stick with it.

I am pretty decent with SQL and can write some lengthy queries. My goal is the following:

1) Pull data from out databse

2) Write queries in power BI

3) Publish dashboards / reports based on those queries

I have connected to our database, so step 1 is done.

What I am stuck on is where / how to write actual SQL queries. I want to be able to write a query such as SELECT * FROM shop WHERE X LIMIT 100 and just have it spit out the data, so I can manipulate it, put it into dashboards, and create charts, so later on I can push it to the cloud.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1) No, that SQL statement box is actually for SQL statements as you would normally write, not for the Power Query language (it's called M. I'm just going to call it that now). It's just not generally recommended to use that feature at all. You don't even have to write M language formulas yourself. The buttons generate them. If you feel ambitious you can write them yourself but it's rarely necessary unless you're doing really crazy stuff.

You are advisng against filtering the data via SQL before I load the data into Power BI? I should filter the data after it is in Power BI via M or the visual querying tools?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Personally, as a SQL person first and foremost who is now working in PowerBI, I would have to say, I take the opposite approach and also advise others to take the opposite approach as well if performance is at all importand.

When you do all fo the filtering after you bring in the data, it has a much higher impact on the SQL server every time you refresh the data. Plus, it has to transport everything across the network just to throw it away.

My rules personally would be as follows:

For a one time data pull - not something that will be regularly refreshing OR if you can't write decent SQL code OR if you database is on a strong server and is in the MB or low GB size and not TeraBytes:

1. Connect to SQL as the source from Power BI

2. Take the easy way, click all fo the tables that you want.

3. Click Edit and make and changes that you want via M (either point and click or with programming)

4. Apply and Save

5. If you need - add additional calculated columns or measures via DAX.

6. Design away

If this is a dashboard or report that will be getting refreshed AND can write select statements with SQL

1. Open your favorite query writing tool. You can do it in PowerBI, but no Intellisense, no formatting, no easy debugging, etc.

2. Write the query and play around with it until you are happy.

3. In PowerBI, Use SQL as the source and paste the query that you copied from the other tool. This nice thing is, you can continually refresh and get new data without having to start from scratch.

4. If you need to make changes easier done in M than SQL, make them after importing the query.

5. Continue as above.

Just my thoughts. I feel sorry for the DBAs trying to maintain performance on their servers as we start hitting them with more and more UserBI apps that are pulling everything on earth just to throw it away after.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is exacly what we have been doing with one additional step which may not be applicable for every one.

When we want to refresh/schedule the data and if we are using the first approach to import all the tables, the performance of the SQL server will be impacted.

1. I have written a query to pull all the filtered data from multiple tables with huge number of rows.

2. Schedule a job to retreive all the information and enter into a different table in a different database.

3. Schedule a refresh with in PowerBI to get the updated data.

Reasons to do this: The only SQL server we have is being used by multiple SQL developers to run reports every day. When we try to extract the data with either of these approaches, it impacts the performance of the SQL server. By doing this, the data will be retreived overnight and copied into a different database. We use this database to connect through PowerBI and providing datasets to the Finance team. It has been working so far. I am not saying this solution will work for everyone, but in order to avoid the perf, issues, we thought this might be the best way.

I would be happy to hear if anyone has any other ideas to make it more efficient. Thanks for reading!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I would like to add here, in Power BI Desktop, query Editor, you have the option to disable the load query so the query is not refreshed. When you disable the load, you will not slow down the performance of the servers and will improve the performance of Power BI Desktop report.

Cheers

Elizabeth Tachjian

Analytics Realtime

elizabeth@analyticsrealtime.com.au

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>You are advisng against filtering the data via SQL before I load the data into Power BI?

Yes I am, but not strongly. You can do it if you're more comfortable in SQL. However if you're going to use SQL I do recommend doing it the way @sdjensen demonstrated. That's how I do it too, but only when necessary. Basically I only do it when I've determined that my own SQL code will run significantly more efficiently than what Power BI would run on its own. For me that's mostly cases of really weird seven-table joins on multi-column criteria. Otherwise I usually just click buttons and let it do its thing.

You can write your own M code if you feel ambitious. There are a few things that can only really be done by writing the code, but they're rare and weird. Any regular query tasks can be accomplished by clicking buttons in the editor. You'll have to learn a little bit of M code if you want to start adding custom columns, but it's easy.

Moving from SQL to Power BI's query builder can feel a bit weird because they look very different and the order of operations seems backward. In SQL you tell it to select some columns from a table. In Power Query you tell it to connect to a table and keep the columns you want. The two do the same thing but they're written (or generated via the visual tools) in the opposite order. But that's not really how it runs. In the editor it's just loading a preview for you to play with. As I understand it when the query actually runs it isn't pre-loading an unfiltered table and then filtering it. It actually translates back to a regular SQL select statement. The nice thing about M and the query builder is that you can mashup all sorts of sources, so you can do wacky things like a left outer join of your SQL table with a table off a Wikipedia article. The query is all in one language, but the SQL query still happens behind the scenes.

It's worth learning to do things the regular query builder way even if you're going to embed your own SQL in most cases. It can do things that SQL can't, and learning to use it properly will give you a better handle on what's going on in your Power BI models in general.

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I select from a SQL source in PBI Desktop I always make my queries in the Query Editor like this. I prefer using the advanced editor to write the M# code myself, and use parameters to have my values for Server and Database. I do this for 2 reasons - I can easily create a new query by dublicating one of my other queries and then just replace the T-SQL code and I just have to change 2 values if my data source changes since I just change the parameters and then don't have to change all my queries.

let

Source = Sql.Database(Server, Database, [Query="

--SQL Query Start

SELECT

a.DWID_Customer AS 'CustomerKey'

, a.CustomerNo AS 'Customer No'

, a.Name AS 'Customer Name'

, a.CustomerLabel AS 'Customer'

, a.CustomerPostingGroup AS 'Customer Posting Group'

, a.Country AS 'Customer Country'

, a.City + ', ' + a.Country AS 'Customer City'

, a.SalespersonCode AS 'Salesperson Code'

, a.SalespersonName AS 'Salesperson Name'

, a.SalespersonName + ' - ' + a.SalespersonCode AS 'Salesperson'

FROM D_Customer a

--SQL Query End

"])

in

Source

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @sdjensen

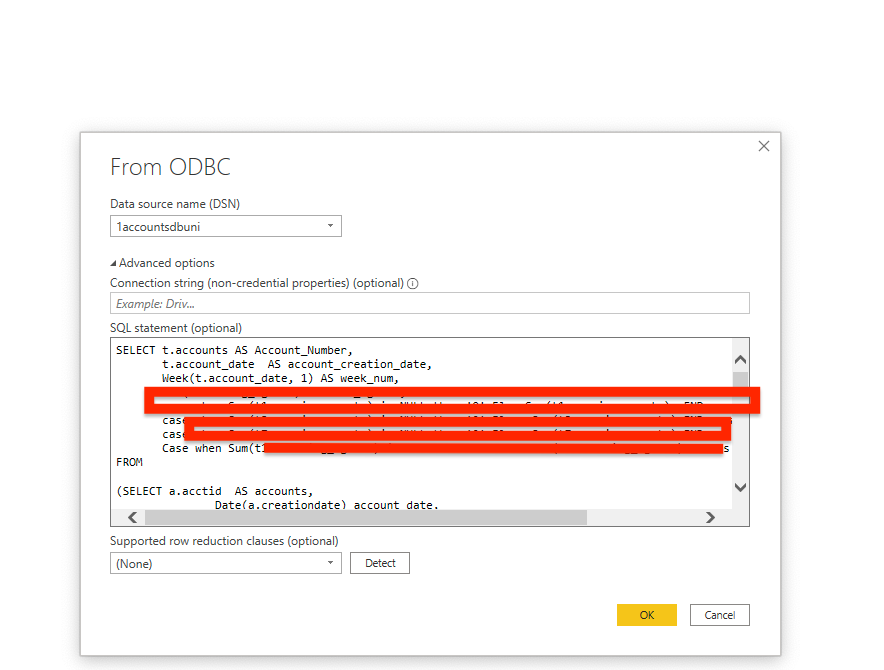

I'm using MYSQL and connecting to the server using ODBC connection.

I'm very new to power bi and I want to do something similar. Copy paste my query into power bi and load the data. How do I go about it? The syntax i'm using is similar to yours.

Source = Odbc.DataSource("dsn=1accountsdbuni", [Odbc.Query=

--- Query here ----])

Syntax works fine but I'm getting following error:

Expression.Error: The provided options are not valid. Details: [List]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you tell me what steps you are following to get to the point where you are receiving the error? I don't have a MySQL to play with, but I may be able to spot where there is a problem.

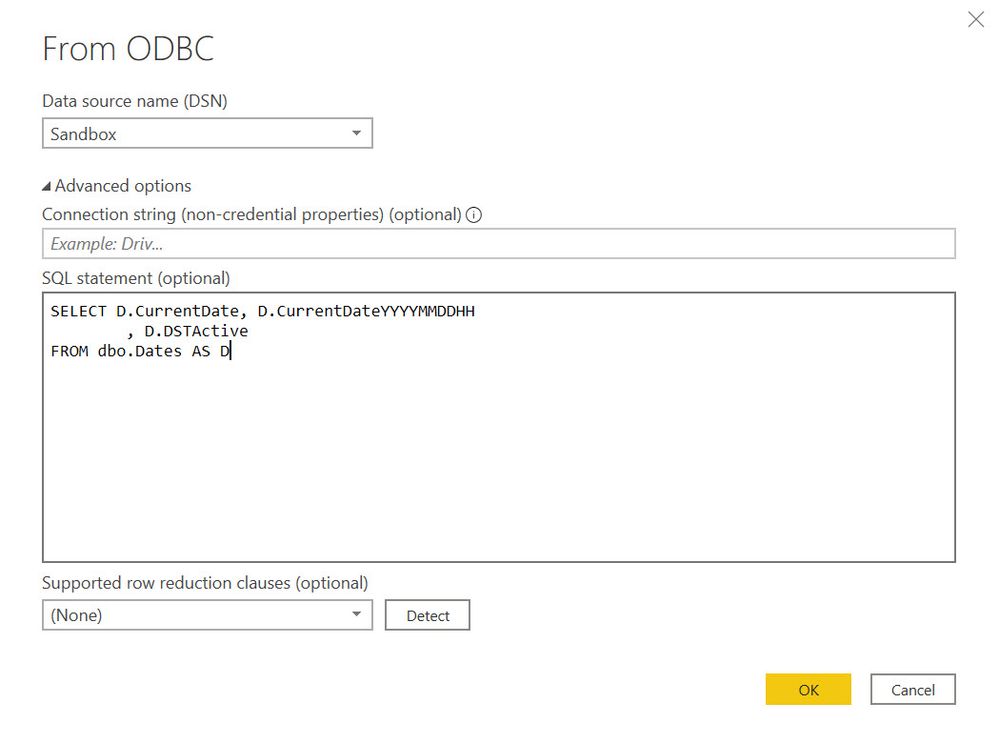

I assume when you connect to the ODBC source, after selection yoru DSN in the From ODBC window, then you add your query, exactly as it is in the MySQL editor into the SQL statement (optional) box, then get your error when you click OK. Is there anything else going on?

Have you tried using the MySQL connector?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@alwweb

I'm trying to run a MYSQL query within Power BI. I have connected to MYSQL using an ODBC connector. I was facing issues connecting directly with MYSQL and hence went the ODBC route and that worked for.

Now all I want to do is run a MYSQL query I have written with multiple joins and everything within Power BI. The query runs fine and gives me result in a MYSQL client but unable to make it run in Power BI.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@newpi Thank you for the additional info.

I just tested an ODBC connection to one of my Microsoft SQL databases (this is all I have right now) and it worked. I created an ODBC connection that said it was 32/64 bit and a User DSN. I would assume it needs to match the PowerBI "bitness" and the server bitness.

I then chose Import from ODBC and my DSN and query was as shown in the image below. It connected without any errors. On the security page, I picked Windows security, but make sure that you are connecting with a method supported by MySQL. ODBC connections can be weird, so I'm sorry that I don't have a MySQL server to test this with to help you out.

Please let me know if this helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

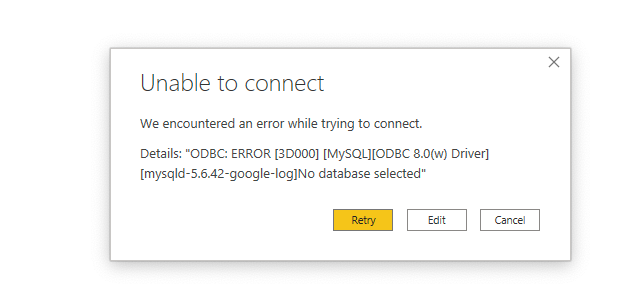

@alwweb Doing the same steps and when I click ok. I get the error of no database selected.

Whereas If I run without this query, I'm able to connect to the data from the pop up and selecting raw tables.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@newpi - my default database name is part of my DSN definition on a page with an option to change the default database.

If your databasename can't be defined in the DSN, is there a way to set it in the connection string above the SQL statement by using the database= option?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@alwweb Thanks. Just solved this. I just had to modify my query. In MYSQL client I was just writing and doing a select * from table name and that was working fine there but in power bi I had to do Select * from database.tablename and it worked.

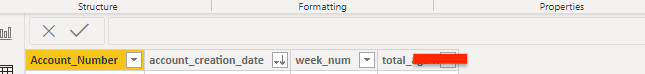

Also, but I'm facing another issue here. I have close 8 columns in my output but in the power bi after connecting with SQL optional statement I'm only seeing first 4 columns and missing the others. This query has multiple left joins so I don't know if that is the issue.

Power BI Columns

All columns in MYSQL client

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is now solved. The 4 missing columns data was loading as Binary and I converted it to text type in the query editor and could load all columns

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@newpi So glad you figured that out. I was not even considering that as one of the troubleshooting steps I was trying to put together for you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@sdjensen wrote:When I select from a SQL source in PBI Desktop I always make my queries in the Query Editor like this. I prefer using the advanced editor to write the M# code myself, and use parameters to have my values for Server and Database. I do this for 2 reasons - I can easily create a new query by dublicating one of my other queries and then just replace the T-SQL code and I just have to change 2 values if my data source changes since I just change the parameters and then don't have to change all my queries.

let Source = Sql.Database(Server, Database, [Query=" --SQL Query Start SELECT a.DWID_Customer AS 'CustomerKey' , a.CustomerNo AS 'Customer No' , a.Name AS 'Customer Name' , a.CustomerLabel AS 'Customer' , a.CustomerPostingGroup AS 'Customer Posting Group' , a.Country AS 'Customer Country' , a.City + ', ' + a.Country AS 'Customer City' , a.SalespersonCode AS 'Salesperson Code' , a.SalespersonName AS 'Salesperson Name' , a.SalespersonName + ' - ' + a.SalespersonCode AS 'Salesperson' FROM D_Customer a --SQL Query End "]) in Source

Could someone please spell this out easier for me? I am not a SQL guy but really need to go this route with my complex BI model. I have many tables loading (some with millions of rows that I would like to try and filter before they load into query editor first) and many editor steps that I feel could be reduced by SQL coding doing a lot of the joins and filters I need done.

My model times out and fails scheduled refresh during business hours a lot due to everything taxing on the SQL server.

Is there a good beginniners book to SQL I could pick up to maybe learn enough to join and filter as I need too?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If the SQL refreshes are timing out, you are probably correct that you are pulling too much data at once, but, doing all of the joins in the SQL may also slow it down more.

I am a SQL person first and a Power BI person second, so I look at things a little differently from most people on this forum.

1) I like bringing in all of the tables individually and using the relationships in Power BI rather than "flattening" my data source by doing all of the joins when pulling in the data. This gives me more power for creating my formulas, etc inside of Power BI.

2) I would prefer to bring in one table at a time with a query for each tables (some denormalization where it makes sense by doing the joins) if I need to remove a lot of columns or some of the rows. This way, if the SQL database is optimized properly, it will take much less time to refresh. Rather than pulling in all of the data and then removing a bunch, we only pull what we need.

On your comment from page 3 that I responded to earlier. I realized you missed where the other user was putting in the M code. It is after the import happens, by clicking Advanced Editor on the Home tab of the Power Query Editor.

For books, to understand the concept of normalization that I mentioned earleir in this comment, I recommend any of the edtitions of Jan Harrington's Relational Database Design Clearly Explained. I like the 1st edition best if you can find it in a used book store.

For writing T-SQL (MIcrosoft's flavor of the SQL language - stands for Transact-SQL) there are a huge number of books to choose from. If you are going to be doing a lot of query writing and don't have an IT team to create VIEWS that already have what you need in them, I recommend taking a 3 day course. If you like learning on your own, one of the most popular books are the ones by Itzik Ben-Gan. Either T-SQL Querying or SQL Fundamentals.

I hope some of this helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Where does the data reside?

Elizabeth Tachjian

Analytics Realtime

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Credentials will be entered in the next dialog, not the one in my screenshot. Yes, it'll be regular SQL.

- Yes

- Yes

- UI buttons are just an easier way of doing things, if you prefer writing your own SQL it's also fine 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK. The online service is pretty restricted as far as writing complex queries and measures. I just wanted to make sure that you were in the right place to see the tools I was describing.

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!