Join the #PBI10 DataViz contest

Power BI is turning 10, and we’re marking the occasion with a special community challenge. Use your creativity to tell a story, uncover trends, or highlight something unexpected.

Get started- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Webinars and Video Gallery

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- R Script Showcase

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Join us for an expert-led overview of the tools and concepts you'll need to become a Certified Power BI Data Analyst and pass exam PL-300. Register now.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- How to filter the count of rows using date column

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to filter the count of rows using date column

I am very new to powerBI , I am trying to calculate the percentge of my passing devices. Below is the sample of my data.

and this is what i am trying to translate from sql to power BI

Declare min_date DATETIME = '2020-1-13 00:00:00'

Declare max_date DATETIME = '2020-1-14 00:00

SELECT 100 *(

(SELECT

(SELECT count (distinct SerialNumber)

FROM TTable where

(CAST( RequestTime AS DATETIME) between CAST(min_date AS DATETIME) And CAST(max_date AS DATETIME))) -

(Select count(*) as retried

from ( SELECT SerialNumber,

count (*) - SUM (CASE when status = 'P' THEN 1 ELSE 0 END) as TotalRetries

FROM TTable where

(CAST( RequestTime AS DATETIME) between CAST(min_date AS DATETIME) And CAST(max_date AS DATETIME))

Group by SerialNumber

HAVING count (*) - SUM (CASE when status = 'P' THEN 1 ELSE 0 END) > 0 ) retried ) AS Difference ))

/ ((SELECT count (distinct SerialNumber)

FROM TTable where

(CAST( RequestTime AS DATETIME) between CAST(min_date AS DATETIME) And CAST(max_date AS DATETIME))) + 0.0

) as PassingData ;

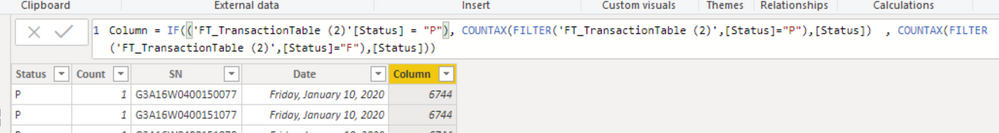

I have grouped the data in native query of power BI and able to get total count. I am also able to get the total count of all Pass and Fail by adding a new column, but when i filter the date the count doesnt change. i want the count to change based on date How can i do that.

Also how can i subtract the fail count from pass count and divide it by pass count and count should be be updated as per date

something like below :

(passcount - fail count) / pass count

| SerialNumber | DateTime | Status |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | F |

| G0002 | 2019-09-09T16:01:50.7030000 | F |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | F |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0002 | 2019-09-09T16:01:50.7030000 | P |

| G0001 | 2020-01-02T12:12:00.2370000 | P |

| G0001 | 2020-01-02T12:12:00.2370000 | P |

| G0001 | 2020-01-02T12:12:00.2370000 | P |

| G0001 | 2020-01-02T12:12:00.2370000 | P |

| G0001 | 2020-01-02T12:12:00.2370000 | P |

| G0001 | 2020-01-02T12:12:00.2370000 | F |

| G0001 | 2020-01-02T12:12:00.2370000 | P |

| G0001 | 2020-01-02T12:12:00.2370000 | P |

| G0001 | 2020-01-02T12:12:00.2370000 | P |

| G0001 | 2020-01-02T12:12:00.2370000 | P |

| G0001 | 2020-01-02T12:12:00.2370000 | P |

| G0001 | 2020-01-02T12:12:00.2370000 | P |

| G0001 | 2020-01-02T12:12:00.2370000 | P |

| G0001 | 2020-01-02T12:12:00.2370000 | P |

| G0001 | 2020-01-02T12:48:14.9470000 | P |

| G0001 | 2020-01-02T12:48:14.9470000 | P |

| G0001 | 2020-01-02T12:48:14.9470000 | P |

| G0001 | 2020-01-02T12:48:14.9470000 | P |

| G0001 | 2020-01-02T12:48:14.9470000 | P |

| G0001 | 2020-01-02T12:48:14.9470000 | P |

| G0001 | 2020-01-02T12:48:14.9470000 | F |

| G0001 | 2020-01-02T12:48:14.9470000 | P |

| G0001 | 2020-01-02T12:48:14.9470000 | P |

| G0001 | 2020-01-02T12:48:14.9470000 | P |

| G0001 | 2020-01-02T12:48:14.9470000 | P |

| G0001 | 2020-01-02T12:48:14.9470000 | P |

| G0001 | 2020-01-02T12:48:14.9470000 | P |

| G0001 | 2020-01-02T12:48:14.9470000 | P |

| G0001 | 2020-01-02T12:48:14.9470000 | P |

| G0001 | 2020-01-02T12:54:39.1630000 | P |

| G0001 | 2020-01-02T12:54:39.1630000 | P |

| G0001 | 2020-01-02T12:54:39.1630000 | P |

| G0001 | 2020-01-02T12:54:39.1630000 | P |

| G0001 | 2020-01-02T12:54:39.1630000 | P |

| G0001 | 2020-01-02T12:54:39.1630000 | P |

| G0001 | 2020-01-02T12:54:39.1630000 | F |

| G0001 | 2020-01-02T12:54:39.1630000 | P |

| G0001 | 2020-01-02T12:54:39.1630000 | P |

| G0001 | 2020-01-02T12:54:39.1630000 | P |

| G0001 | 2020-01-02T12:54:39.1630000 | P |

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

1) Just drag the date column to the dashboard.

2) Create those measures:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

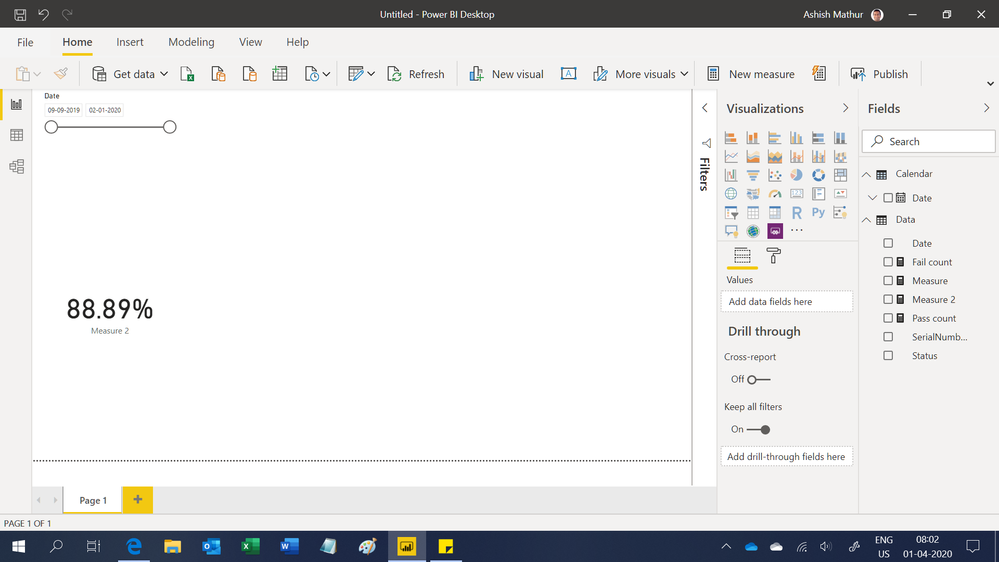

Hi,

You may download my PBI file from here.

Hope this helps.

Regards,

Ashish Mathur

http://www.ashishmathur.com

https://www.linkedin.com/in/excelenthusiasts/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous ,Try a new column like

divide(

(countx(filter(table,table[SerialNumber] = earlier(table[SerialNumber]) && table[status]="P"),table[status])

- countx(filter(table,table[SerialNumber] = earlier(table[SerialNumber]) && table[status]="P"),table[status])),

countx(filter(table,table[SerialNumber] = earlier(table[SerialNumber]) && table[status]="P"),table[status])

)

Or a measure like this

divide(

(countx(filter(table,table[status]="P"),table[status])

- countx(filter(table, && table[status]="P"),table[status])),

countx(filter(table, table[status]="P"),table[status])

)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@amitchandak I tried the measure by making few modifications and it worked like this

divide(

(countx(filter(table,table[status]="P"),table[status])

- countx(filter(table, table[status]="F"),table[status])),

countx(filter(table, table[status]="P"),table[status])

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

You may download my PBI file from here.

Hope this helps.

Regards,

Ashish Mathur

http://www.ashishmathur.com

https://www.linkedin.com/in/excelenthusiasts/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried linking the two tables and it gives the data between two days like i wanted.

Thank you all so much for your support. Really appreciate the help. Was stuck on this for 2 days and tried alot of tutorials.

You guys are magicians. Thank you 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are welcome. If my reply helped, please mark it as Answer.

Regards,

Ashish Mathur

http://www.ashishmathur.com

https://www.linkedin.com/in/excelenthusiasts/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

1) Just drag the date column to the dashboard.

2) Create those measures:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh Awesome , Thankyou it really works.

One more question. As of now it gives the per day division. What can i do if i want to find the division result between selected dates .

for example I am getting result at

div result on 3rd jan

div result on 4th jan

div result on 5th jan

what if i want one div result from 3rd - 5th jan

Helpful resources

Join our Fabric User Panel

This is your chance to engage directly with the engineering team behind Fabric and Power BI. Share your experiences and shape the future.

Power BI Monthly Update - June 2025

Check out the June 2025 Power BI update to learn about new features.

| User | Count |

|---|---|

| 65 | |

| 56 | |

| 54 | |

| 36 | |

| 34 |

| User | Count |

|---|---|

| 84 | |

| 73 | |

| 55 | |

| 45 | |

| 43 |