Join the Fabric User Panel to shape the future of Fabric.

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Sign up now- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric certified for FREE! Don't miss your chance! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Change pbix data source programmatically

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Change pbix data source programmatically

Hi everyone,

I want to know wheter is it possible to change the data source from a pbix file programmatically.

I want to use the same report for different customer that use different data bases, with the same schema.

Currently, I develop a report using an internal data base and then I change this report data source for every customer and I upload the pbix file to them power bi account. So, what I want to do is to make this process programmatically to do it faster and avoid possible errors.

Thanks in advance.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JorgeDiego,

As you said, power bi not support to direct modified the datasource without open the pbix file, perhaps you can submit your requirement to ideas.

In addition, you can try to store your connection string to a config file, then write a custom function to analysis this file. After these steps, you can change the datasource without open the pbix file.(After you modified the config file, you should refresh it at pbix report to get the newest data)

Sample:

let

xmlTable = Xml.Tables(File.Contents("C:\Users\xxxxx\Desktop\test.xml")),

server=xmlTable[Server]{0},

database=xmlTable[Database]{0},

Source= Sql.Database(server,database)

in

Source

Xml:

<config> <Server>"abc"</Server> <Database>"edd"</Database> </config>

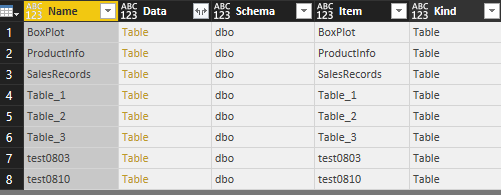

Screenshots:

Regards,

Xiaoxin Sheng

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also interested in this option; here's what I've found so far:

1. The pbit/pbix files are basic zip files, with the extension changed; you can unzip and rezip and PowerBI will recognize them

2. The DataMashup file within the archive is also an archive, but it is NOT a basic zip file; unzipping and rezipping will not be recognized by PowerBI... but this archive contains Section1.m which has the query source definitions (likely what we need to change programmatically to achieve Jorge's desired solution)

3. DataMashup seems to be a MS-QDEFF file: https://docs.microsoft.com/en-us/openspecs/office_file_formats/ms-qdeff/e6377721-700b-411c-9d4f-3fc0...

So at least the potential workflow for programmatically changing the source would be:

a. upzip the pbit/pbix archive

b. parse/unpack the DataMashup file, following the MS-QDEFF structure rules

c. change Section1.m

d. repackage DataMashup, following the MS-QDEFF structure rules

e. rezip the entire archive, including the new DataMashup and change the extension back to pbit or pbix

Python's zipfile library can do steps (a) & (e) easily, and basic python string manipulation can handle (c). Challenge is writing some functions to handle the MS-QDEFF parsing/writing...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have a lot of data in our company. Currently, we rely on manually creating ad hoc Power BI reports for each customer. Ideally, we could use programming languages such as Java, .NET, Python, or R to handle the ETL work and then use code to generate Power BI reports by inserting built-in visuals and publishing them automatically. I found that the REST API can push datasets into the workspace. There are also Power BI development tools for creating customized visuals. However, for our use case, the typical built-in visuals are good enough. My question is: is there any way I can use a programming language to insert built-in visuals in Power BI?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Got it I think.

Here's Python code to unpack, change and repack the *.pbit programmatically (haven't tested on *.pbix):

----

import zipfile

import io

main_pbit = r"target_full_path_to_pbit/file.pbit"

revised_pbit = r"target_full_path_to_save_pbit/file_new.pbit"

'''

Key Functions

'''

def edit_Section1(old_text,new_text,datamashup_byte_stream):

byte_dict = {}

with datamashup_byte_stream as f:

f.seek(0)

#parsed bytes based on the microsoft MS-QDEFF documentation (thank you MS!)

byte_dict['version'] = f.read(4)

byte_dict['pkg_parts_len'] = f.read(4)

byte_dict['pkg_parts'] = f.read(int.from_bytes(byte_dict['pkg_parts_len'],byteorder='little'))

byte_dict['perm_len'] = f.read(4)

byte_dict['perm_var'] = f.read(int.from_bytes(byte_dict['perm_len'],byteorder='little'))

byte_dict['meta_len'] = f.read(4)

byte_dict['meta_var'] = f.read(int.from_bytes(byte_dict['meta_len'],byteorder='little'))

byte_dict['perm_bind_len'] = f.read(4)

byte_dict['perm_bind_var'] = f.read(int.from_bytes(byte_dict['perm_bind_len'],byteorder='little'))

mashup_f = io.BytesIO(byte_dict['pkg_parts'] )

test_zip = zipfile.ZipFile(mashup_f,mode='r')

archive_contents = test_zip.filelist

#step into the package parts of the archive, which contains the query definitions

with test_zip.open('Formulas/Section1.m','r') as section1_f:

section1_text = section1_f.read()

'''

Do all your text swapping/manipulation here

'''

section1_revised = section1_text.replace(old_text,new_text)

#create a new zip file in memory

zip_buffer = io.BytesIO()

zip_archive = zipfile.ZipFile(zip_buffer,mode='w',compression=zipfile.ZIP_DEFLATED)

for ac in archive_contents:

if ac.filename == 'Formulas/Section1.m':

zip_archive.writestr('Formulas/Section1.m',data=section1_revised)

else:

with test_zip.open(ac,'r') as temp_f:

temp_bytes = temp_f.read()

zip_archive.writestr(ac,data=temp_bytes)

#close out streams

zip_archive.close()

test_zip.close()

#go back to start of bytestream and re-read it, finding new package parts and package parts length

zip_buffer.seek(0)

byte_dict['pkg_parts'] = zip_buffer.read()

byte_dict['pkg_parts_len'] = len(byte_dict['pkg_parts']).to_bytes(4,byteorder='little')

f.close()

new_mashup = io.BytesIO()

#write out the revised DataMashup file (in correct order, from ordered list)

for b in ['version','pkg_parts_len','pkg_parts','perm_len','perm_var','meta_len','meta_var','perm_bind_len','perm_bind_var']:

new_mashup.write(byte_dict[b])

return new_mashup

'''

Main Code

'''

main_zip = zipfile.ZipFile(main_pbit,mode='r')

main_archive_list = main_zip.filelist

new_zipfile = zipfile.ZipFile(revised_pbit,mode='w',compression=zipfile.ZIP_DEFLATED)

for al in main_archive_list:

if al.filename == 'DataMashup':

with main_zip.open(al.filename,'r') as datamashup_byte_stream:

#Do any text parsing on the source definitions here

revised_DataMashup = edit_Section1(b'CHANGETHISTOSOMETHINGELSE',b'Hello_world',datamashup_byte_stream)

revised_DataMashup.seek(0)

final_DataMashup = revised_DataMashup.read()

new_zipfile.writestr('DataMashup',data=final_DataMashup)

else:

with main_zip.open(al,'r') as temp_f:

temp_bytes = temp_f.read()

new_zipfile.writestr(al,data=temp_bytes)

#close out streams

main_zip.close()

new_zipfile.close()

----

For this demo I just made a very basic pbit file with a data source and a custom column:

let

Source = ...

...

#"Added Custom" = Table.AddColumn(#"Removed Other Columns", "Custom_Col", each "CHANGETHISTOSOMETHINGELSE")

in

#"Added Custom"

... and it looks like we can swap out the column text "CHANGETHISTOSOMETHINGELSE"-->"Hello_World" fine. To swap out other parts of the M query source, I think it's just a matter of the specific text parsing for section1.m

Next step will be to figure out how to pull/push these files to/from webservice, but hopefully this is a useful piece for others.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can we rename power bi embedded report using asp.net mvc

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi. I copy File pbix with the xlsx file to another computer and I want to refresh BI file using .net. How can I modify DataMashup file or another way to refresh connections? I do not want to use interface, to swap data source file. Some one of you try this before?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any luck with modifying the data mashup file ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Pbix is just a zip package. Can you open the package and change the connection file in it. All this can be done programmatically.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

public static class Misc{

public static void UpdateConnection(string filepath)

{

using (ZipArchive archive = new ZipArchive(File.Open(filepath, FileMode.Open), ZipArchiveMode.Update,false,null)){

ZipArchiveEntry entry = archive.GetEntry("Connections");

string newstring;

using (var sr = new StreamReader(entry.Open())){

var jsonText = sr.ReadToEnd();

newstring = UpdateServer("MyTestServer", jsonText);

}

using (var sw = new StreamWriter(entry.Open())){

sw.Write(newstring);

}

}

}

private string UpdateServer(string server,string jsonText){

var pattern = @"Data Source\s*=\s*\w+\b";

return Regex.Replace(jsonText, pattern, $"Data Source={server}");

}

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did this work ? I get an error message saying corrupted file or file created from unsupported version of Poewr BI.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Pbix is just a zip package. Can you open the package and change the connection file in it. All this can be done programmatically.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @JorgeDiego

Today I am srufing the web with the same reuqirement you need in 2017.

Just repharasng the requirment again that, I have multiple clients with their own Servers and Databases but the report schema is same. Right now I am manually handeling this by creating Worksapces for each client on app.powerbi.com and creating a copy of report for each client, updating its Datasource on PowerBi Desktop and publishing it again on the client specific worksapce.

I want to automate this process using PowerShell Script.

So I want to Update the Datasource of a PBIX file and according to client and publish it on client specific worksapce on app.powerbi.com

Please help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, any luck finding a solution? I have the same requirement.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No i am still strugling 😞

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JorgeDiego,

As you said, power bi not support to direct modified the datasource without open the pbix file, perhaps you can submit your requirement to ideas.

In addition, you can try to store your connection string to a config file, then write a custom function to analysis this file. After these steps, you can change the datasource without open the pbix file.(After you modified the config file, you should refresh it at pbix report to get the newest data)

Sample:

let

xmlTable = Xml.Tables(File.Contents("C:\Users\xxxxx\Desktop\test.xml")),

server=xmlTable[Server]{0},

database=xmlTable[Database]{0},

Source= Sql.Database(server,database)

in

Source

Xml:

<config> <Server>"abc"</Server> <Database>"edd"</Database> </config>

Screenshots:

Regards,

Xiaoxin Sheng

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you know solution for Direct Query ? As i have Error "2 datasource is not allowed"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous,

Can this method be used as a way to share templates / content packs to connect to different sources ?

The history behind this question is here.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was able to solve this problem using a paremeter in Power Query. The parameter is set to the users dropbox "home" local folder. Then, when I share the PBT file it prompts the user to enter their specific filepath before creating their user-specific pbix. This isn't quite automagical but it does the job.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you please elaborate the last part

Source= //invoke data connection method here

Bare with me please, bcoz i'm very new to this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It could be a good solution. It is not the best, but, currently, the solution I´m looking for doesn´t exist.

Many thanks.

Helpful resources

Join our Fabric User Panel

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

| User | Count |

|---|---|

| 63 | |

| 62 | |

| 42 | |

| 19 | |

| 16 |

| User | Count |

|---|---|

| 118 | |

| 106 | |

| 38 | |

| 28 | |

| 27 |