Fabric Data Days starts November 4th!

Advance your Data & AI career with 50 days of live learning, dataviz contests, hands-on challenges, study groups & certifications and more!

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Calculated Table causes performance issue and ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Calculated Table causes performance issue and dataset refresh fails

Hi,

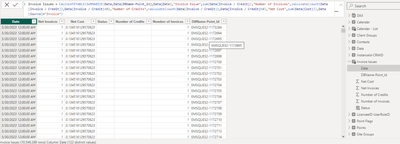

I have a calculated table called 'Invoice Issues' as below which is derived from the existing Data table:

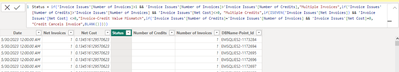

The main purpose of this table is to create a calculated column called 'Status' which is used in visual:

Calculated column:

visual:

This works perfectly and our report is liked by the customers.But this causes lot of performance issues and dataset report refresh fails in workspace.

Is there a way to creat this as a dax measure or any other alternate solutions is much appreciated?

PFA file herewith PR-419 - Data Coverage RLS ADB (1).pbix

@amitchandak @Ahmedx @marcorusso @Greg_Deckler @Ashish_Mathur @Anonymous

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When you create a calculated table, the entire uncompressed table must be materialized in memory and then compressed. This could be a memory-intensive operation that works on your PC and fails on Power BI Service.

You should reduce that table by removing unused columns and remove unnecessary rows - e.g. keep only the rows that have an issue.

Or compute the column outside of Power BI, so it's processed like other tables segment by segment, reducing the memory requirement at refresh time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Share a much smaller sample dataset with only the relevant tables. Explain your requirement and show the expected result.

Regards,

Ashish Mathur

http://www.ashishmathur.com

https://www.linkedin.com/in/excelenthusiasts/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When you create a calculated table, the entire uncompressed table must be materialized in memory and then compressed. This could be a memory-intensive operation that works on your PC and fails on Power BI Service.

You should reduce that table by removing unused columns and remove unnecessary rows - e.g. keep only the rows that have an issue.

Or compute the column outside of Power BI, so it's processed like other tables segment by segment, reducing the memory requirement at refresh time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @marcorusso

Many thanks Sir for your quick response!

Apologise for delay in response! we will follow this going forward in our reports

Just wanna confirm,

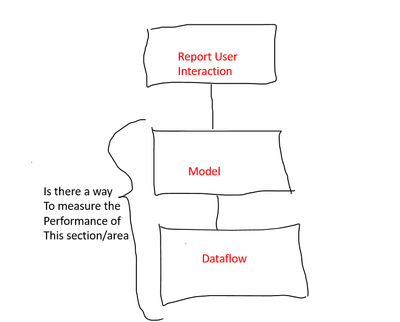

when you say outside of power bi, it means in our case it is Data Flow where transformations can be done?

when you say 'keep only the rows that have an issue.' that means applying Date range parameters in power query?

Is there a way to measure the performance of data model & dataflow similar to how dax studio is used for measuring the power bi report performance?

Thanks in advance!

@amitchandak @Ahmedx @marcorusso @Greg_Deckler @Ashish_Mathur @v-cgao-msft @Daniel29195

Helpful resources

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!

Power BI Monthly Update - October 2025

Check out the October 2025 Power BI update to learn about new features.