FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!Special holiday offer! You and a friend can attend FabCon with a BOGO code. Supplies are limited. Register now.

- Data Factory forums

- Forums

- Get Help with Data Factory

- Dataflow

- Re: Dataflow refresh started failing today MashupE...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflow refresh started failing today MashupException.Error

Hi!

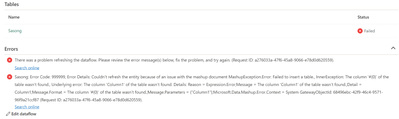

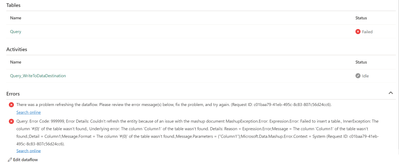

I've had about 90 dataflows set up for a few months now. Last night all but 2 of them failed with error MashupException.Error

The two that succeeded were very small tables with less than 100 rows of data.

I'm loading data from an on-prem SQL server, as well as an Excel-file from a Sharepoint folder (this one also failed).

On-prem-server:

Excel-file:

Has there been any change in Fabric in the last 36 hours?

best regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please do reach out to our support team by raising a support ticket so an engineer can take a look at this

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JE_test

I'm following up to see if you've had a chance to submit a support ticket. If you have, a reference to the ticket number would be greatly appreciated as it would help us to track for more information.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@v-cboorla-msft Do you have an answer to my question requesting clarification re whether or not there is a roundtrip to the on-prem GW?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just noticed that one of my dataflows were still running (or parts of it, before clicking into it it just said "failed"). trying to load 5 rows of data and it has been running for 36 hours now... and no easy way to cancel it.

When will you implement cancellation of dataflows?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JE_test

Thanks for using Microsoft Fabric Community.

Apologies for the inconvenience that you are facing here.

When using Microsoft Fabric Dataflow Gen2 with an on-premises data gateway, you might encounter issues with the dataflow refresh process. The underlying problem occurs when the gateway is unable to connect to the dataflow staging Lakehouse in order to read the data before copying it to the desired data destination. This issue can occur regardless of the type of data destination being used.

For more details, please refer : On-premises data gateway considerations for data destinations in Dataflow Gen2.

If you are using Microsoft Fabric through an on-premises data gateway, be sure to update to the latest version of the gateway. Some errors related to output destinations or queries might be resolved by upgrading the gateway and also this ensures that any updates to Fabric features and known issues are propagated through the gateway.

For details please refer : Currently supported monthly updates to the on-premises data gateways.

We're adding support to cancel ongoing Dataflow Gen2 refreshes from the workspace items view, timeline for the cancel feature, please refer : Cancel refresh support in Dataflow Gen2.

I hope this information helps. Please do let us know if you have any further questions.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hold on a sec... are you saying that the following is the path the data takes? This is the sentence that bothers me:

The underlying problem occurs when the gateway is unable to connect to the dataflow staging Lakehouse in order to read the data before copying it to the desired data destination.

1__on-prem DB-->GW-->DefaultStagingLakehouse

followed by

2__DefaultStagingLakehouse-->GW-->user lakehouse

Why is the data path instead not like this? Or is it?

1__on-prem DB-->GW-->DefaultStagingLakehouse

followed by

2__DefaultStagingLakehouse -->[ internal Fabric bulk copy mechanism ] --> user lakehouse

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the current setup:

1__on-prem DB-->GW-->DefaultStagingLakehouse

followed by

2__DefaultStagingLakehouse-->Notebook-->user lakehouse

followed by

3__user lakehouse-->(Stored Procedure)-->user Warehouse (this is where the transformations happen, from one schema to the next, using SPs)

But today I looked at my data in my warehouse and it seems I have new data there, even though the status of my Gateways says failed for the last 3 days. Very interesting/weird...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I aggree. Gremlins everywhere in the MS backend codebase, it seems. 😁

Good to know the architecture of your pipeline, but the question was directed @v-cboorla-msft because s/he wrote:

The underlying problem occurs when the gateway is unable to connect to the dataflow staging Lakehouse in order to read the data before copying it to the desired data destination.

sentence which, by its use of pronouns, means the data GW is trying to write to a Fabric destination after pulling data from the staging LH. In other words, he says this:

GW-->staging LH-->back to GW-->any type of destination , so a roundtrip to the on-prem data GW

which I find very very odd to do.

I thought the more logical and efficient path, after having loaded the data from the on-prem DB via the GW would be as follows:

GW-->staging LH-->pipeline or dataflow (no more going back to the on-prem GW)-->destination (LH or DW)

If the latter, then @v-cboorla-msft explanation makes no sense, no offense Boorla. 😉

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

GW-->staging LH-->back to GW-->any type of destination , so a roundtrip to the on-prem data GW

Yes this roundtrip is very weird. It seems to be that this second round to the GW is not working with our current firewall setup on the on-prem server, but the first load used to (and might still?) work fine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

TBH I'm not convinced there's a roundtrip... waiting for @v-cboorla-msft or someone else at Microsoft to confirm one way or the other.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When staging is enabled and On Prem Data Gateway is used, the data will flow as such:

1. [Data Source] ---OPDG---> [Staging Lakehouse]

2. [Staging Lakehouse] --- OPDG ---> [Final Output]

It may not be the most optimal path, hence if you do not need staging of entities, please turn it off. Without staging, the path should simply be

[Data Source] ---OPDG---> [Output]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@pqian_MSFT Thanks. Agreed. However, here's a question for you:

I am forced to use this Microsoft suggested workaround because atm using one DFg2 to extract data from an on-prem DB does not work (well, at least for us it doesn't--case# 2401190040000098). However, using the chained DFs workaround does work.

So my question is: When will this [Data Source] ---OPDG---> [Output] become available for on-prem datasources?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hmm, I'm not sure what is it that prevents it from working on your GW? It should work today.

I do not have access to support cases until they involve me. Can you share a little bit detail on what it is that prevents a direct load to output? (You can reply here or DM me)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

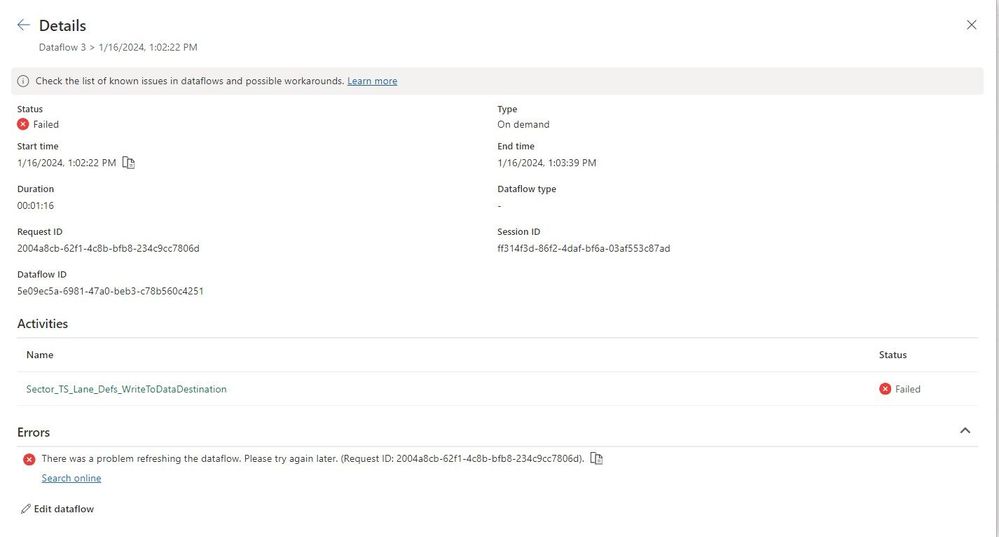

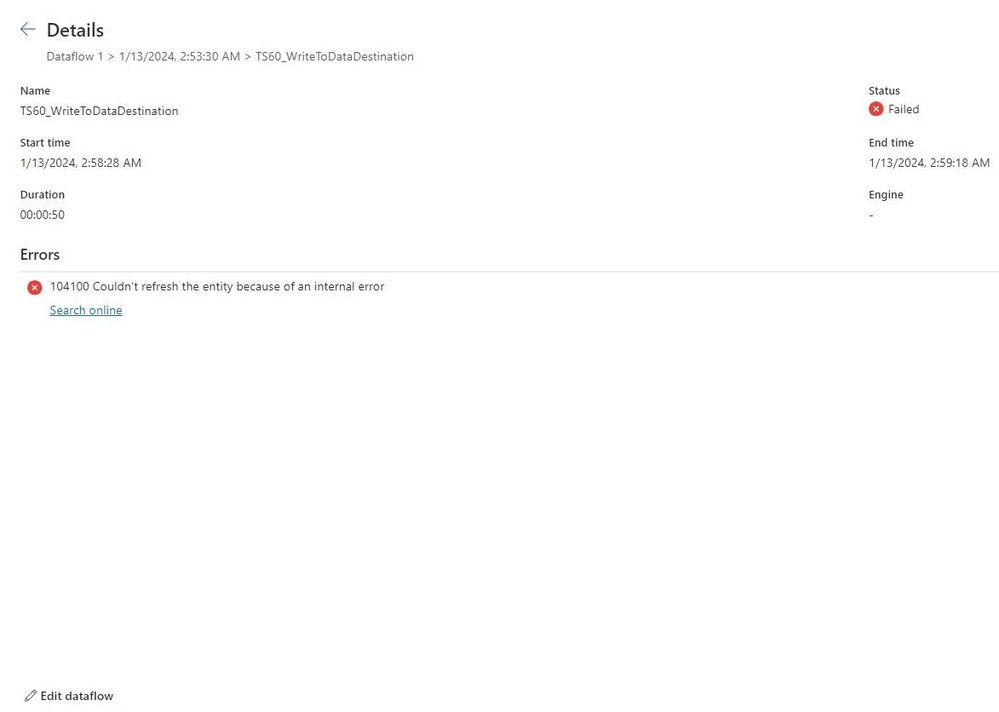

@pqian_MSFT If I only use one DF, what ends up happening is that after the publish succeeds, the refresh fails with this error:

OR this one or some variation thereof.

Could it have something to do with the version of SQL Server (we use an older version)?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can click this

to go into more details, hopefully it has the error text.

A refresh from Jan is too old for us to look into anyway (we only maintain logs for 28 days). If you can still reproduce this error, please do the following:

1. Turn on Additional Logging on the Gateway to obtain Mashup logs, then refresh and produce this error, then export the logs

2. Send me the logs and request ID of the failure.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

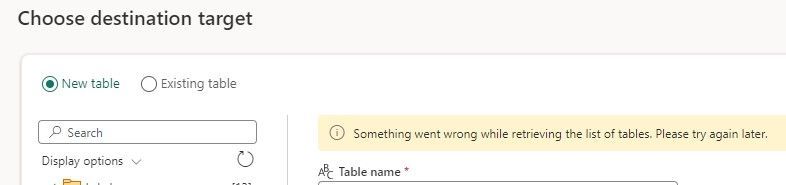

@pqian_MSFT What happens now, all the previous errors do not occur because I am now stopped in the 'Choose destination target' dialog with the following error:

To be clear:

0__create DF

1__connect to on-prem source (data comes in, can see table)

2__disable staging on the query

3__in Data destination, click + icon and select Lakehouse

4__in 'Choose destination target' dialog, select the lakehouse and click next (validation ensues for a couple of minutes)

5__error message above.

Do you still want me to proceed with getting the GW Mashup logs or is this enough info to start a different exploratory path?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes please, turn on GW logging is critical to see the real issue. Also, for this particular error, the correlation is a "session ID" from the Options -> Diagnostics dialog.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just sent you the Session ID in PM from my last try. sysadmin is busy atm, but will get to it today... i will need an upload link though, PM editor has not attach file button.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also, I should add that of the cases of "104100 Couldn't refresh an entity because of an internal error", they were all because the GW server could not establish connection to the SQL endpoint of the destination LH.

We'll need GW logs to confirm that, but you can quickly try this on the GW server too: make a TCP connection to the LH SQL endpoint, port 1433, and see if that's successful. If not, then you are inside some kind of firewall that has allowlisting only for staging lakehouse and not for the output.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content