Huge last-minute discounts for FabCon Vienna from September 15-18, 2025

Supplies are limited. Contact info@espc.tech right away to save your spot before the conference sells out.

Get your discountScore big with last-minute savings on the final tickets to FabCon Vienna. Secure your discount

- Data Factory forums

- Forums

- Get Help with Data Factory

- Data Pipeline

- Re: Need a Datavalidation part by using notebook a...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Need a Datavalidation part by using notebook activity

Hi Team,

there is one incremental load scenario and which is working fine.

input source:ADLS, every day one file comming to adls and appending that to table in lakehouse.

so 1st day i have one file and having 3 records

2nd day again one file comming to adls and having again 3 records so total 6 records in adls at the End of 2nd day

so same number of records i need in LH target table at the End of 2nd day.

so i need to count number of records in source and sink, i am able to do this by using lookup activity, but i need this data validation in notebook activity and i am able to write the pyspark query also.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @sudhav

Thanks for using the Fabric community.

As I undesrtand the ask right now is how to run an validation logic from a notebook and send an email.

Please correct me if my understanding is not right .

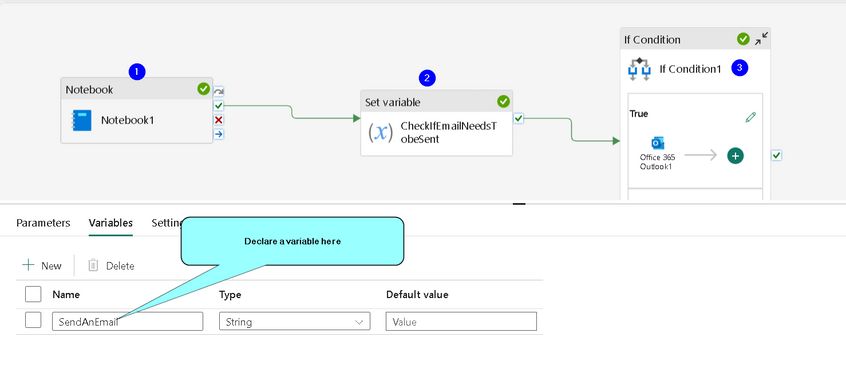

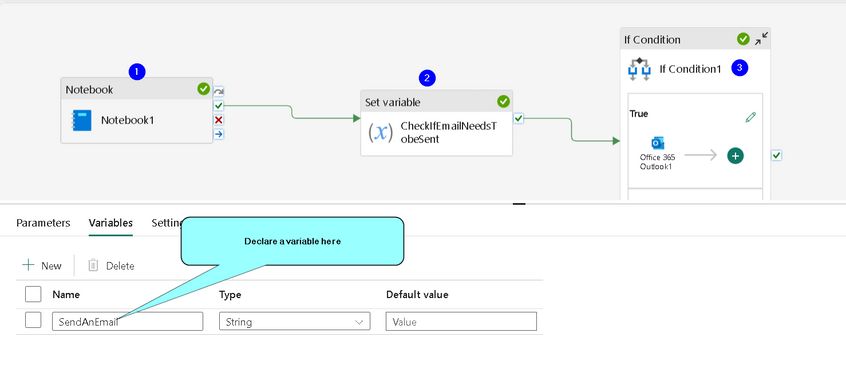

Step 1

We can achieve this my adding the mssparkutils.notebook.exit() in the notebook.

Step 2

When you run the the notebook from a notebook activity , please add a set variabe activity and read the exitcode

Expression should be somelike this

Step 3

Add a If activity and add the below expression

Hope this helps .

Thanks

HImanshu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @sudhav

Thanks for using the Fabric community.

As I undesrtand the ask right now is how to run an validation logic from a notebook and send an email.

Please correct me if my understanding is not right .

Step 1

We can achieve this my adding the mssparkutils.notebook.exit() in the notebook.

Step 2

When you run the the notebook from a notebook activity , please add a set variabe activity and read the exitcode

Expression should be somelike this

Step 3

Add a If activity and add the below expression

Hope this helps .

Thanks

HImanshu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great, thank you Himanshu. I thought no one will give answer to this lengthy question, but you proved that i am wrong and its working as expected. thankyou man.

Helpful resources

| User | Count |

|---|---|

| 2 | |

| 2 | |

| 1 | |

| 1 | |

| 1 |

| User | Count |

|---|---|

| 3 | |

| 3 | |

| 3 | |

| 3 | |

| 2 |