FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- DAX Commands and Tips

- Re: How to remove duplicate rows based on conditio...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to remove duplicate rows based on condition

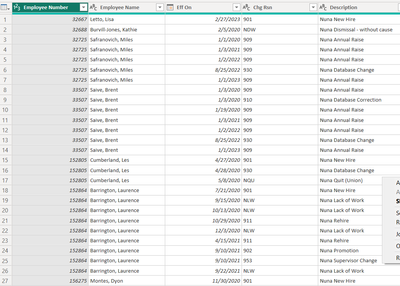

Hi all,

I have a employee history table. I noticed some duplicated rows. How can I remove the duplicated row, based on the condition:

1. The same Employee Number

2. Chg Rsn="901"? (901 means new hired employee, new hire must be unique)

Thank you in advance!

Bei

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Here is one way to do this:

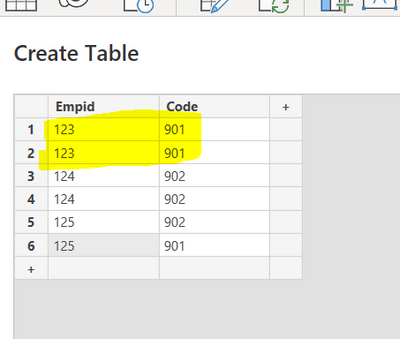

Example (we will remove one of the rows in yellow):

Here is the PQ used:

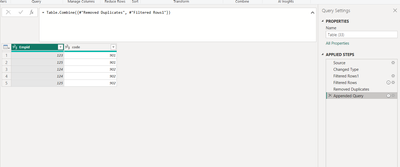

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjQyVtJRsjQwVIrVweSZgHlGWHmmWHhAfbEA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Empid = _t, code = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Empid", Int64.Type}, {"code", Int64.Type}}),

#"Filtered Rows1" = Table.SelectRows(#"Changed Type", each [code] <> 901), //this table contains non 901 rows

#"Filtered Rows" = Table.SelectRows(#"Changed Type", each [code] = 901),

#"Removed Duplicates" = Table.Distinct(#"Filtered Rows", {"Empid"}), //this table contains non unique 901 rows

#"Appended Query" = Table.Combine({#"Removed Duplicates", #"Filtered Rows1"}) //here we combine the two to get the desired result

in

#"Appended Query"

End result:

As we can see the non-desired row is now removed.

I hope this post helps to solve your issue and if it does consider accepting it as a solution and giving the post a thumbs up!

My LinkedIn: https://www.linkedin.com/in/n%C3%A4ttiahov-00001/

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Here is one way to do this:

Example (we will remove one of the rows in yellow):

Here is the PQ used:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjQyVtJRsjQwVIrVweSZgHlGWHmmWHhAfbEA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Empid = _t, code = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Empid", Int64.Type}, {"code", Int64.Type}}),

#"Filtered Rows1" = Table.SelectRows(#"Changed Type", each [code] <> 901), //this table contains non 901 rows

#"Filtered Rows" = Table.SelectRows(#"Changed Type", each [code] = 901),

#"Removed Duplicates" = Table.Distinct(#"Filtered Rows", {"Empid"}), //this table contains non unique 901 rows

#"Appended Query" = Table.Combine({#"Removed Duplicates", #"Filtered Rows1"}) //here we combine the two to get the desired result

in

#"Appended Query"

End result:

As we can see the non-desired row is now removed.

I hope this post helps to solve your issue and if it does consider accepting it as a solution and giving the post a thumbs up!

My LinkedIn: https://www.linkedin.com/in/n%C3%A4ttiahov-00001/

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This solution is genius! How did you come up with it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

@Anonymous

I created a flowchart with the categories required. In the end the problem is that we are trying to eliminate rows with certain conditions. So reversely, if we include all but the rows we want to eliminate we get the desired outcome.

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!

| User | Count |

|---|---|

| 19 | |

| 13 | |

| 9 | |

| 4 | |

| 4 |

| User | Count |

|---|---|

| 30 | |

| 26 | |

| 17 | |

| 11 | |

| 10 |