FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- DAX Commands and Tips

- Re: DAX Head Scratcher

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DAX Head Scratcher

Looking for some insight into why a DAX calculation coded in a particular way takes absolutely forever to return while a slightly different version returns quickly. I have the sample PBIX included below sig. The PBIX includes two versions of the same model, one that "works" and one that "doesn't" (in terms of speed of execution).

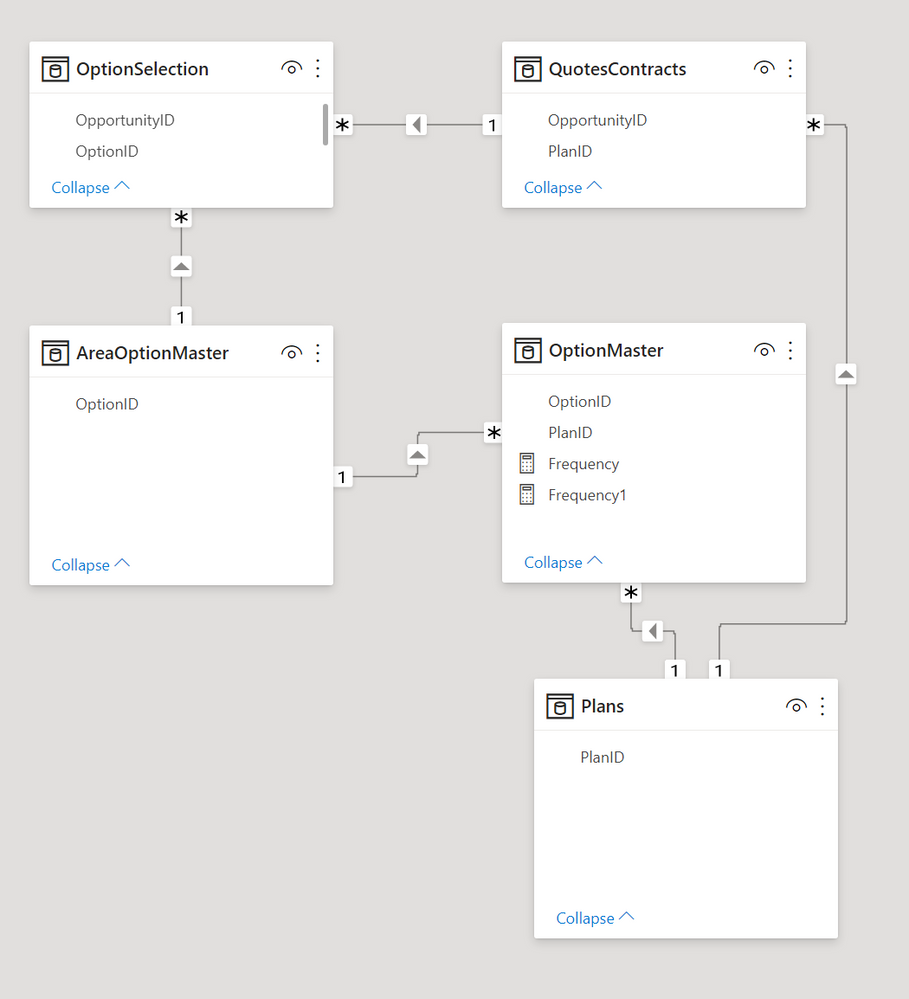

The data model is like this:

Plans 1-<>-* OptionMaster *-<>-1 AreaOptionMaster 1->-* OptionSelection *-<-1 QuotesContracts

Think of it this way, you have these widgets and each of these widgets has a blueprint (Plans). For each of these plans, you can add options. The same option might be applicable to multiple plans. AreaOptionMaster has your unique list of options (optionid). OptionSelection has the sales information for which options were purchased for different sales opportunities. The QuotesContracts includes your sales contract information, which includes which Plan was purchased.

OK, so the goal is to retrieve the frequency of purchases for a particular Option and Plan. So, we create a Table visual with OptionID and PlanID from the OptionMaster table and then we construct a measure like this:

Frequency =

VAR __PlanID = MAX('OptionMaster'[PlanID])

RETURN

CALCULATE(SUM(OptionSelection[Quantity]), QuotesContracts[PlanID] = __PlanID)

This works and is fast. However, let's say that you need something like the plan description from the Plans table in this visual. The minute you add this, the above measure suddently takes FOREVER to return if it doesn't run out of memory.

So, PBIX includes more details but the "fix" I came up with which is implemented in the duplicate data model in the PBIX with the tables prefixed by 1 is two fold:

- Change the relationship between QuotesContracts and OptionSelection to Both

- Change the measure to this:

1Frequency1 =

VAR __PlanID = MAX('1OptionMaster'[PlanID])

RETURN

CALCULATE(SUM('1OptionSelection'[Quantity]), FILTER('1QuotesContracts',[PlanID] = __PlanID))

So, for some odd reason, adding FILTER instead of using a straight filter clause makes a world of difference, which doesn't exactly make sense to me. Now, it's no secret that I am no fan of CALCULATE. However, I'm not exactly ready to throw CALCULATE under the bus for this one and was wondering if someone with a deep understanding of DAX could help me riddle out what is going on here. First, it doesn't make sense to me that adding a column from the Plans table would cause DAX to have conniption fits and then it doesn't make sense that an appendage table bi-directional relationship and adding FILTER would fix it.

I hate to bug you but @marcorusso, you are the best person that I can think of that could answer this connundrum.

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can easily say that:

- The FILTER is faster with bi-di because it filters a table starting from the current filter context, which is affected by the filters applied on other tables (restricting the number of combinations to evaluate)

- The bi-di applied to all the other relationships have side effects, pushing a lot of calculation to the formula engine

What I cannot say is what a better solution is, because I don't know the business goal and I am not able to evaluate the correctness of the data model.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@marcorusso @AlexisOlson Yeah, I don't really have a great deal of control over this particular data model, I was kind of handed what I was handed and asked to make it work. Obviously data model changes can always help things out but I was curious about the DAX for one main reason. From what I have generally read, a lot of the blog articles and other guidance out there basically state "don't use FILTER with CALCULATE". One of the main reasons being performance since FILTER tends to create an extra table, yadda yadda. For example: https://stackoverflow.com/questions/50506030/dax-calculate-function-with-and-without-filter

However, in this case, FILTER has the exact opposite effect, it greatly improves the speed of the measure instead of slowing it down. This is further intruiging to me because it doesn't make sense to me as to why. In theory, they should both be doing the same thing. Maybe I am thinking about this wrong, but my understanding of using FILTER in CALCULATE is that using FILTER adds to the existing filter context. Not using FILTER in CALCULATE has two outcomes:

- If the columns (or tables) aren't in the filter context, then new filters will be added to the filter context to evaluate the expression.

- If the columns (or tables) are already in the filter context, the existing filters will be overwritten by the new filters to evaluate the CALCULATE expression.

So, in my way of thinking, within that visual, QuotesContracts[PlanID] is not in the current filter context so both versions of the measure should be doing the same thing, simply adding filter context.

I'm going to try some more experimentation around this so appreciate the thoughts and inputs!!

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A filter in CALCULATE is always a table, conceptually.

Filter Arguments in CALCULATE - SQLBI

The filter context is a set of filters, where each filter is a table.

A filter can be on one, two, or more columns, it can also correspond to an entire table of the data model. For this reason, I use the name "filter" instead of "table filter", because a filter is always a table, just not necessarily the same table you have in the model.

When you apply a filter to a filter context, it overrides existing filters over the same column(s), unless you use KEEPFILTERS.

That's it.

That's really nothing else, even though there are many consequences for that.

Now, why do you see a performance issue? Because the syntax you wrote using FILTER ( XYZ, ... ) requires a different evaluation of the table XYZ in each cell of the result unless you have something that makes the expression (the FILTER expression) identical across all the cells of the result. For example, FILTER ( ALL ( XYZ ), ... ) will never change the iterator regardless of the filter context of the cell where you evaluate the measure, even though you might still have depending expressions in the second argument (it depends on the expression).

I hope this clarifies why you are seeing the performance difference.

To me, it's very clear. My problem with the performance of the formula is why you have to write such a condition and what are the requirements and the assumptions we can make to rewrite the formula (and/or the model) in a way that simplifies the work requested to the formula engine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@marcorusso Well, the phrase clear as mud comes to mind! 🙂

What is truly vexing to me is that both of the formulas result in the EXACT same DAX query from what I can tell yet one takes 100 times longer to return:

// DAX Query

DEFINE

VAR __DS0Core =

SUMMARIZECOLUMNS(

ROLLUPADDISSUBTOTAL(

ROLLUPGROUP('1OptionMaster'[OptionID], '1OptionMaster'[PlanID], '1Plans'[PlanID]), "IsGrandTotalRowTotal"

),

"v1Frequency", '1OptionMaster'[1Frequency]

)

VAR __DS0PrimaryWindowed =

TOPN(

502,

__DS0Core,

[IsGrandTotalRowTotal],

0,

'1OptionMaster'[OptionID],

1,

'1OptionMaster'[PlanID],

1,

'1Plans'[PlanID],

1

)

EVALUATE

__DS0PrimaryWindowed

ORDER BY

[IsGrandTotalRowTotal] DESC,

'1OptionMaster'[OptionID],

'1OptionMaster'[PlanID],

'1Plans'[PlanID]

If it is the same query, then...? If nothing else, this seems to expose a deficiency in the Performance Analyzer in that it is not capturing everything that is truly going on within DAX calculations?

My understanding of the data model is that this predicament comes about thusly:

- There is option data about plans with costs, prices, GM, etc. This is the OptionMaster table and is a fact table with options and plan dimension tables (AreaOptionMaster and Plans). This provides the GM for the options but is essentially "current". As in, there is no date with a history of costs/prices/etc. The same option can be sold across many different plans.

- However, they also want to see how often those options are sold. This comes from another fact table that has the option id in it as well as an "opportunity" ID that ties it back to the original opportunity. This is a fact table of option sales over time called OptionSelection. Furthermore, this "frequency" can come in different forms, a global frequency, how many times sold period, a "local freqency", how many times sold across a particular area and other types of frequency such as how many times sold for a particular plan across all areas. This fact table has a single dimension table, QuotesContracts that has additional information about the sale and includes the Plan ID that was sold.

So, at the end of the day, there are two fact tables involved and the customer wants to see information from both of them. I don't see a way to combine the two fact tables into a single fact table as they are at extremely different granularities, one historical sales over time and the other is just a list of options, plans and their current costs and prices. Perhaps we could combine the OptionSelection and QuotesContracts tables and then form a direct relationship between the fact tables using a combined key (option id & "|" & plan id) but, please remember, this is an extremely simplified view of the data model. There are actually about 20 tables involved in the data model and at least two or three additional fact tables (there is a fact table for plan costs and material costs for example). Think of it this way, plans and options are made up of "items" (raw materials) that have their own cost and thus a plan and an option cost are essentially a "roll-up" of these material costs. There are additional tables in the model that define for an option and a plan all of the materials that make up these plans and options. Then there is a budget fact table as well that details out the budget for the work required to manufacture the particular plan and option. Then, of course, consider that different vendors may provide the materials for the option and plan at different costs so that is also in the model.

There is actually a ton more complexity to this than I am conveying here but hopefully this provides some idea. That's why I wanted to focus this on the DAX and what they heck it is doing exactly versus the data model because it's just not so simple as to "fix" the data model when it is complex and working for literally everything else except this particular calculation.

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What's wrong with the following model?

If you use this model (removing all the bidirectional filters) and you filter/slice by using only the dimensions (Plans[PlanID], AreaOptionMaster[OptionID], and QuotesContracts[OpportunityID if you need]) you get super-fast results from both fact tables - without using any DAX measure, just using simple implicit measures.

But maybe I'm missing something.

The DAX code you wrote differs for the different evaluations of the filter in the measure because of one additional bidirectional filter. It is expected, my point is that I don't understand why the code is written the way it is written and the model is designed the way it is designed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@marcorusso For the simplified model, this likely works just fine, but the model isn't anywhere near this simple actually. Technically, there is no actual Plans table as shown in the model that directly links to OptionMaster. It gets complex because plans actually have and show up at two additional distinctions, we will call them area and "attribute". So the Plans table currently actually has a plan ID show up multiple times in the table for each area and for each "attribute" within that area. Again, I am sure that data model improvements and optimizations can help resolve this issue. Perhaps adding a table where the Plans table is truly unique. Althought, if this was done, it would result in duplicate paths between this new Plans dimension table and OptionSelection table, one through QuotesContracts and one through OptionMaster -> AreaOptionMaster.

However, in many ways all of that is beside the point. There is nothing that explains the difference in performance of the two measures operating against the same data model, which has the bi-directional filter:

- 1Frequency = Ues straight filter clause in CALCULATE (no FILTER function) 100 times slower

- 1Frequency1 = Uses FILTER in filter clause of CALCULATE is 100 times faster than 1Frequency

Both of these functions are in the model where the bi-directional cross-filter direction exists. Both result in the exact same DAX query. One is 100 times slower than the other. So, the question is why? What is the Performance Analyzer not telling us about what is really happening? Is the DAX query shown in the Performance Analyzer just the DAX query against the model engine but does not cover what is going on in the formula engine? If that is the case, what exactly is the difference about what is going on in the formula engine between those two calculations? There is an aspect of this of solving the problem of getting a fast calculation, sure. But, the question I really want to know about is what was just stated. Why the difference in speeds? What is CALCULATE without the FILTER doing that is different than CALCULATE with the FILTER? Seems like there is some fundamental difference here but it is not exposed in the DAX queries because those are identical. It's OK if the anwer is that it is just a black box and there is no way of knowing or "well, nobody can really explain or understand how CALCULATE works, kind of like a microwave oven or non-dairy creamer". It's just one of those things I am curious to understand in terms of increasing my understanding of DAX.

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

But the two expressions are not identical.

CALCULATE (

SUM ( OptionSelection[Quantity] ),

QuotesContracts[PlanID] = __PlanID

)

is equivalent to:

CALCULATE (

SUM ( OptionSelection[Quantity] ),

FILTER ( ALL ( QuotesContracts[PlanID] ), QuotesContracts[PlanID] = __PlanID )

)

which differs from

CALCULATE (

SUM ( '1OptionSelection'[Quantity] ),

FILTER ( '1QuotesContracts', [PlanID] = __PlanID )

)

in its inclusion of the ALL statement.

As in the example I gave in my previous post, it seems that, when employed in conjunction with cross-filtering from a related table, the evaluated tables resulting from the two seemingly similar expressions may not be of the same dimension (cf my previous example, in which the 'straight' CALCULATE version - that which incorporates an implicit ALL function - evaluates to a table comprising significantly more rows than the non-ALL, FILTER version).

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Greg_Deckler The answer provided by @Jos_Woolley is the same one I would have wrote.

In one case this filter is executed only once for every cell of the result that have the same result for the __PlanID variable (which is a cartesian product between columns that have millions of combinations):

FILTER ( ALL ( QuotesContracts[PlanID] ), QuotesContracts[PlanID] = __PlanID )The second filter must evaluate the first argument for each of the millions of combinations I mentioned before, because the filter context is potentially different in each cell:

FILTER ( '1QuotesContracts', [PlanID] = __PlanID )The query you see in Performance Analyzer is identical, but you should see a different query plan in DAX Studio.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For the sake of not hijacking this thread further (although I still have a feeling that my investigations relate to your issue, Greg), I have reproduced a simplified version of my model and posted it with my query here.

I'd be extremely grateful if anyone could take a look at it - most likely I'm missing something very obvious which you experts will see immediately.

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@marcorusso @Jos_Woolley I greatly appreciate the assistance here in understanding what is going on. I hate to ask for one additional favor, I am having trouble in DAX studio simulating my table visualization in order to get the Query plan. I thought that this would do the trick:

EVALUATE

ADDCOLUMNS('1OptionMaster',"__PlanID",MAX('1Plans'[PlanID]),"__Calc",[1Frequency1])However, I am not getting the same results in DAX Studio that I get in my table visualization. This is likely me just not understanding how to do what I want in DAX Studio. I'd appreciate any guidance I could get on how to emulate the table visualization in DAX Studio so that I can see what is really going on behind the scenes with this.

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Greg_Deckler you should execute in DAX Studio the same query you capture with the Performance Analyzer in Power BI.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@marcorusso Duh. Thanks. I'm going to write a blog article on this and give a big shout-out to DAX Studio and yourself!

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jos_Woolley OK, but where is that captured in the actual DAX query from Performance Analyzer? In both cases the query is the following and I don't see an ALL anywhere:

// DAX Query

DEFINE

VAR __DS0Core =

SUMMARIZECOLUMNS(

ROLLUPADDISSUBTOTAL(

ROLLUPGROUP('1OptionMaster'[OptionID], '1OptionMaster'[PlanID], '1Plans'[PlanID]), "IsGrandTotalRowTotal"

),

"v1Frequency", '1OptionMaster'[1Frequency]

)

VAR __DS0PrimaryWindowed =

TOPN(

502,

__DS0Core,

[IsGrandTotalRowTotal],

0,

'1OptionMaster'[OptionID],

1,

'1OptionMaster'[PlanID],

1,

'1Plans'[PlanID],

1

)

EVALUATE

__DS0PrimaryWindowed

ORDER BY

[IsGrandTotalRowTotal] DESC,

'1OptionMaster'[OptionID],

'1OptionMaster'[PlanID],

'1Plans'[PlanID]Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The difference is in the measure definition in this line

"v1Frequency", '1OptionMaster'[1Frequency]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apologies for jumping in on (even hijacking) this thread with my limited knowledge, but it appears that the issue here is related to a problem I was investigating recently.

If so, the issue appears to be related to the well-known fact that CALCULATE implicitly applies an ALL function to the column(s) being passed as the filter parameter(s). What I don't understand is how this is affected when a column from a related table is added to the filter context (e.g. by adding it to the Fields of the visual in question).

Take this simple example, which has two tables with a 1-to-Many relationship between the Genre and Films tables, based on GenreID.

I have also added in the Genre column to the Films table for demonstration purposes.

If you create a simple table visual with Title and Genre (both from the Films table), then both of these measures:

Box Office Dollars (CALCULATE) =

CALCULATE ( SUM ( Films[BoxOfficeDollars] ), Films[Title] > "C" )

and

Box Office Dollars (FILTER) =

CALCULATE (

SUM ( Films[BoxOfficeDollars] ),

FILTER ( Films, Films[Title] > "C" )

)

return identical tables, with no duplication in the Genre column:

However, if we remove the Genre column and instead use the Genre column from the Genre table, then the table visual returned using Box Office Dollars (FILTER) is perfectly correct:

However, that returned using

You can reproduce this behaviour using other functions which employ either implict or explicit use of ALL on the Films table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Consider what the DAX is doing if you have OptionsMaster[OptionID], OptionMaster[PlanID], and Plans[PlanID] as dimensions in your table visual.

Basically this:

SUMMARIZECOLUMNS (

'OptionMaster'[OptionID],

'OptionMaster'[PlanID],

'Plans'[PlanID],

"Frequency", [Frequency]

)

As pointed out in Indroducing SUMMARIZECOLUMNS, this is an optimized version of something more like this:

FILTER (

SUMMARIZE (

CROSSJOIN (

VALUES ( 'OptionsMaster'[OptionID] ),

VALUES ( 'OptionsMaster'[PlanID] ),

VALUES ( 'Plans'[PlansID] )

),

'OptionsMaster'[OptionID],

'OptionsMaster'[PlanID],

'Plans'[PlansID],

"Frequency", 'OptionsMaster'[Freqency]

),

NOT ( ISBLANK ( [Frequency] ) )

)

Since there are columns from separate tables, it's likely doing a Cartesian product with CROSSJOIN instead of greatly reducing the space with Auto-Exist, which means you are evaluating [Frequency] for 700,000 * 800 rows (the two columns from OptionMaster do get processed together). When you eliminate 'Plans'[PlansID], Auto-Exist does kick since you only have columns from the same table.

I would suggest that instead of using the dimension Plans[PlanID] in your table, you use a measure instead like SELECTEDVALUE ( Plans[PlanID] ) so that you get similar performance to what you see when that column isn't included.

As for explaining what's happing in your workaround, I'll just remind you that a Boolean CALCULATE argument removes and replaces the filter context on the column it modifies, so you've likely destroyed some filtering that is otherwise picked up traversing the bidirectional relationships. I haven't figured this out precisely though. Bidirectional filtering makes it harder to think about.

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!

| User | Count |

|---|---|

| 13 | |

| 5 | |

| 5 | |

| 3 | |

| 3 |

| User | Count |

|---|---|

| 25 | |

| 10 | |

| 10 | |

| 6 | |

| 6 |