FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- DAX Commands and Tips

- Re: Are the arguments of filter applied in an orde...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are the arguments of filter applied in an order?

Hi,

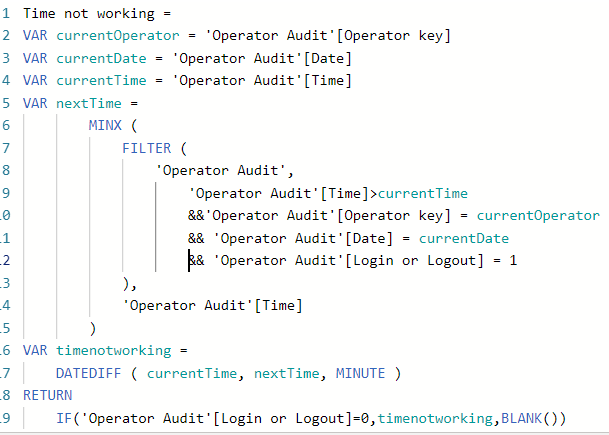

I recently ran into the following problem and I'd like to know how FILTER behaves. I needed to compute a calculated column which would give me the DATEDIFF between the time in the current row and another row. I therefore had to apply a set of filter to my table to perform the correct calculation. This is the calc column I initially created:

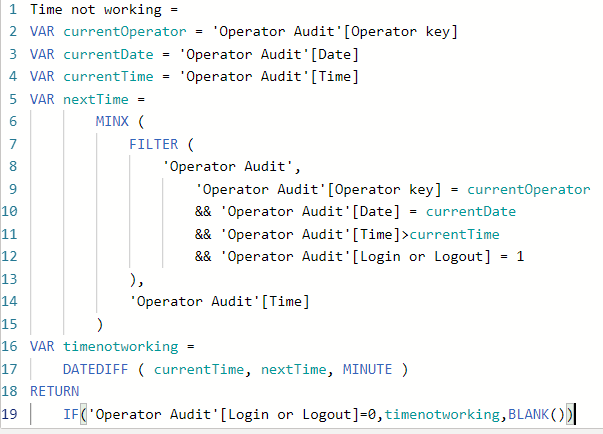

This column took a LONG time to compute and it could only work on a very limited subset of values (the table is 100k rows and I had to reduce it to 10k rows), otherwise it would give me the "there is not enough space to complete this operation" error. I tried to do it in many different ways and ultimately it would only work properly if I rewrote it like this:

This code is identical except for the order of the filter arguments. In this one, I put operator key and date filter arguments BEFORE the time one.

I thought filter arguments in a filter were applied "simultaneously" but I guess I was wrong. The way I see it this is what is happening: the second measure works because filters are applied in such an order that the iterations on the table are far less, because first it identifies the operator and the date, and only after that it computes the time>currenttime filter.

Am I correct in assuming this or is there another explanation? Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Recently, I've calculated the greatest common divisor in PQ for a list of values that were taken from the fact table for each row of a dimension.

Everything is possible in PQ when it comes to massaging data...

Best

Darek

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ciao @marcorusso

you're right, it *should* not behave like this with a mere 100k rows table. I've used similar functions in tables with million rows without issues.

However, there is something very suprising (to me) in your answer:

Overall, creating a calculated column over a large table is never a good idea.

This is very strange. Many people and even yourself said "the more you precalculate in advance, the faster the model will be" and it definitely makes sense. It's much better to let PBI to spend a couple of minutes more when loading the model than waiting seconds every refresh of filters.

So what do you mean by this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In a large table I would store columns computed outside of Power BI (in SQL or in Power Query / M) and not with a calculated column in DAX, because the cost is high and the compression not optimal (especially when the column produces a small number of unique values).

Calculated columns are very useful when you have to compute data in small tables using data of other tables, maybe aggregating large volume of data in other tables - something that is more expensive in SQL rather than in DAX.

The calculated column described in this thread is so easy (and efficient if you have proper indexes) to calculate in SQL...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ok thanks @marcorusso

that makes sense.

honestly, I was not thinking about SQL as this looked like a fairly simple scenario in DAX (and I've used similar methods countless times - with success).

Regarding M, I never used PowerQuery to refer other rows: I always used PQ with a "single row" mindset (I've done complex transformations, but never "lookups" like this). I'll investigate.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree - PQ is not ideal for this kind of query (from a performance perspective). SQL should work pretty well with good indexes.

DAX is an option, but I wouldn't use it for a few million rows. But for 100k it's strange it raises the memory issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The performance is not ideal using the FILTER that way and different query plans might produce different performances. You should try the code below.

Time not working =

IF (

'Operator Audit'[Login or Logout] = 0,

VAR currentTime = 'Operator Audit'[Time]

VAR nextTime =

CALCULATE (

MIN ( 'Operator Audit'[Time] ),

ALLEXCEPT (

'Operator Audit',

'Operator Audit'[Operator key],

'Operator Audit'[Date]

),

'Operator Audit'[Login or Logout] = 1,

'OperatorAudit'[Time] > currentTime

)

VAR timenotworking =

DATEDIFF ( currentTime, nextTime, MINUTE )

RETURN

timenotworking

)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried this code but it doesn't work, I keep getting the run out of memory error. As of now, the only piece of code that works is the second one from the original post, with that specific order of filter arguments. If I switch them, it doesn't work, because it goes out of memory.

I really don't get what is happening, tomorrow I'll try to check the model and the data again to see if it's some kind of mistake unrelated or only partially related with the code.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

But the best way to make calculated columns... is to use Power Query and in case it's taking a long time - manually tune the M code. This is by far the best and most performant solution.

Best

Darek

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Darlove,

thanks for the explanations. Yes, when possible I create my calc columns in Power Query, but I'm not an expert in the M language and also in PQ you can't access other rows as far as I know (which is very little so I might be wrong) therefore the kind of calc column I'm trying to compute wouldn't be possible. Am I correct in assuming this or is there a way to perform that kind of calculation in PQ? I'm not asking for the code, just "conceptually speaking"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's definitely possible that the CALCULATE solution have the same problem, I was just guessing. The problem is that we are discussing about an optimization issue. Which means that every month you might have a different behavior of the engine. Overall, creating a calculated column over a large table is never a good idea.

However, it is a little bit surprising having this kind of problem with just 100k rows. The FILTER solution doesn't have a context transition, but the engine could end up in a query plan that is similar to the one with CALCULATE. Maybe there is something else in the model we're not considering and that has some other side effect.

You should study the problem comparing the different query plans. In order to simulate the calculated column, use EVALUATE ADDCOLUMNS ( table, "new column", <expression> )

in DAX Studio and see what is the impact of changing the order of the FILTER operators. I would also test the query plan using CALCULATE. As I said, it's a small table to generate these problems, so there should be something else.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!

| User | Count |

|---|---|

| 19 | |

| 10 | |

| 9 | |

| 4 | |

| 4 |

| User | Count |

|---|---|

| 31 | |

| 31 | |

| 20 | |

| 12 | |

| 12 |