Join the #PBI10 DataViz contest

Power BI is turning 10, and we’re marking the occasion with a special community challenge. Use your creativity to tell a story, uncover trends, or highlight something unexpected.

Get startedJoin us at FabCon Vienna from September 15-18, 2025, for the ultimate Fabric, Power BI, SQL, and AI community-led learning event. Save €200 with code FABCOMM. Get registered

- Microsoft Fabric Community

- Fabric community blogs

- Power BI Community Blog

- Partitioning of Aggregate tables into partial Dire...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Partitioning of Aggregate tables into partial Direct Query or Import by age of data

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Problem statement

- Assumptions

- Introduction and Setup

- Why Bother?

- The Data Model

- Parameter Setup

- Create the parameter

- Load the parameter as a table

- Filter the Data

- The Calculation Group

- CONCLUSION

Problem statement

Even narrow aggregate tables can exceed capacity limits for memory storage. In scenarios where multiple aggregated fact tables are required, the constraints become even more problematic. Additionally, developers have to wait for the large import tables to refresh locally before they can continue their work. In many cases, it's not neccesary for all data to be included in the in-memory aggregation. Older data that is retrieved infrequently could be "archived," however PBI doesn't have this functionality built into it.

Assumptions

This article assumes the reader is already familiar with all PowerBI storage modes (Direct Query, Import and Dual) as well as Aggregation tables, Calculation Groups and Tabular Editor. All of these tools and functionalities augment each other to make the solution work.

Introduction and Setup

First off, this is a long and in-depth article. I didn't want to just throw the solution out there without explaining how I got to it and some of the gotcha's I encountered along the way. Overall, the steps are actually pretty basic and simple. For this demonstration I’m using AdvertureWorksDW2016_EXT, PowerBI Desktop 2.96 and Tabular Editor 2.13.2. My PowerBI file is very trim since I’m only pulling in enough data to demonstrate the solution. The AdventureWorks database is relatively small so this solution might seem pointless, however, the datasets many of us typically work with are huge. Even when our fact tables (in the 10’s-100's of millions of rows) are reduced to a handful of dimensions in aggregate, the PBIX files are still too large to publish to the PowerBI service. There are many ways one can partition their data but the common scenario demonstrated here is partitioning by a date of some sort. In other words, we aggregate the most current data in memory and defer to direct query for historical data. For this demonstration I'm doing everything in the PowerBI file so that it is a working demonstration in itself and only needs to be connected to an instance of AdvertureWorksDW2016_EXT to function.

Why Bother?

Some of you might be asking “Why do this at all? Can’t we just limit the data in our aggregate table and let PBI defer to Direct Query if needed?” Aggregation tables don’t work that way, in that, they rely on the combination of relationships defined and in scope, not the presence or absense of data within the aggregated table. I’ll demonstrate this in more detail later to clarify.

The Data Model

|

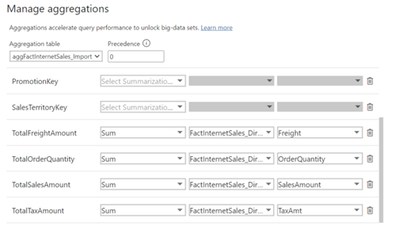

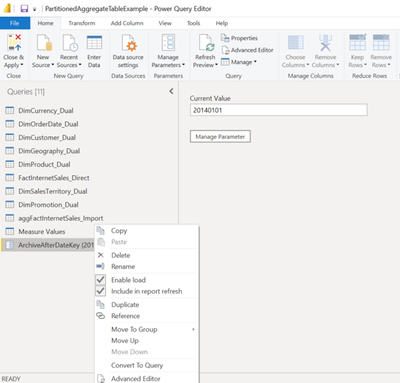

This is the data model for the demonstration. It’s pretty basic and I’ve added a suffix to each table to indicate it’s storage mode as Direct, Import or Dual. Please, take note that “DimCurrency_Dual” and “DimCustomer_Dual” are not related to the aggregated table “aggFactInternetSales_Import” “Measure Values” is an empty placeholder table for holding the measures in. “ArchiveAfterDateKey” table is actually our parameter with the “enable load” option set.

|

|

|

The model has an aggregate table defined as “aggFactInternetSales_Import” which is summary of “Freight”, “OrderQuantity”, “SalesAmount” and “TaxAmount” and are pre-aggregated in SQL by dimensions “Product”, “OrderDate”, “Sales Territory” and “Promotion.”

|

|

Parameter Setup

To define the point in time we’d like to switch from in-memory data to Direct Query, we need a way to pass a scalar value to all of the tables that need to be filtered. As mentioned before, this can be done completely on the database side using tables and views but for this demo I’m using a PowerBI parameter to keep it all self contained in the PBIX.

Create the parameterIn Power Query, create a new parameter. For this demo, I created "ArchiveAfterDateKey" and I'm using the interger natural key for filtering |

|

Load the parameter as a tableThis step makes our parameter available to the model which we'll need for a calculation group. |

|

Filter the Data

In the introduction I posed the "Why Bother?" question as to why we can’t just limit the data in the aggregation table and be done with it. Let’s explore that now. In the next several steps we are going to play with various filtering to show the different behaviors in action.

| To begin the partition process, we need to filter our imported aggregation table so that it only includes recent data as defined by our parameter. The image on the right will guide you if needed. |  |

|

No matter what we do we are only getting recent data. This demonstrates the behavior mentioned in the "Why Bother?" Because "Order Date" is the only dimension in scope, and it's part of the aggregate, PowerBI is perfectly happy using the aggregate table even though there are a lot of periods missing.

|

|

|

Let's convince PowerBI to ignore the aggregate by using one of our non-aggregated dimensions. Now that we are using a non-aggregated dimension, PowerBI ignores the aggregated table all together and choses Direct Query instead. All of our data is there now, including 2014 because we only filtered the data loaded into the aggregate table. The Direct Query table still has the full range of data.

|

|

|

I mentioned in the Teaser that there are caveots to this solution. Since we filtered the aggregate table to only import recent periods, it seems logical to filter the Direct Query table to exclude those periods. We can explore that by applying the opposite filter for our parameter to the Direct Query table. Given the warning below, keep this step in mind. We will circle back to it and undo it towards the end. WARNING! This will introduce a data quality issue. Don’t do this in your production or mission critical app!

|

|

|

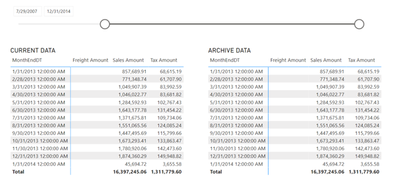

Let's add two matrix visuals to our page with MonthEndDT (instead of DateDT) on rows and some measures on columns. We also need a slicer on MonthEndDT to play with. I've also updated my parameter to include year 2013 in aggregate for more data. You'll notice that both matrices are displaying the same "recent" data. This is expected at this point.

|

|

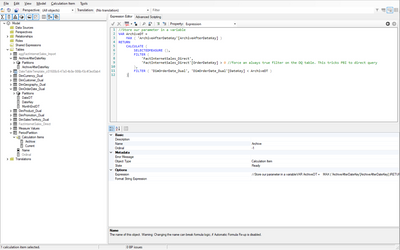

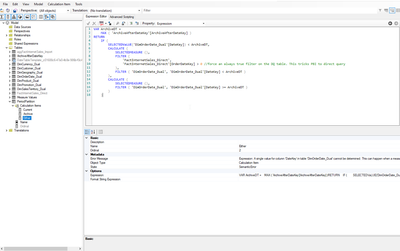

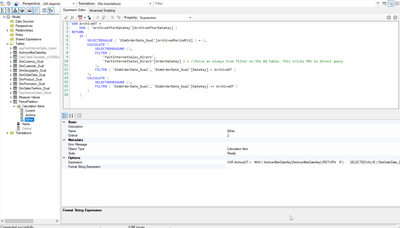

The Calculation GroupUsing Tabular Editor, create the calculation group. I've called mine "PeriodPartition" and created two Calculation Items, "Archive" and "Current" Rember how we set "Enable Load" on the PowerQuery parameter? This is where it comes in. We reference it as a variable in our DAX:

|

|

VAR ArchiveDT =

MAX ( 'ArchiveAfterDateKey'[ArchiveAfterDateKey] )

The first calculation group "Archive" is where the magic really happens, and all it takes is a simple filter on the Direct Query table that resolves to "True" but doesn't actually eliminate rows. This convinces PowerBI that it can't use the aggregate table and needs to Direct Query instead.

VAR ArchiveDT =

MAX ( 'ArchiveAfterDateKey'[ArchiveAfterDateKey] )

RETURN

CALCULATE (

SELECTEDMEASURE (),

FILTER (

'FactInternetSales_Direct',

'FactInternetSales_Direct'[OrderDateKey] > 0 //force an always true filter on the DQ table. This tricks PBI to direct query

),

FILTER ( 'DimOrderDate_Dual', 'DimOrderDate_Dual'[DateKey] < ArchiveDT )

)

The "Current" calculation item is pretty basic and doesn't do anything but filter the aggregated table, which probably isn't neccesary since the table is pre-filterd. This is another caveot we will come back to as well.

VAR ArchiveDT =

MAX ( 'ArchiveAfterDateKey'[ArchiveAfterDateKey] )

RETURN

CALCULATE (

SELECTEDMEASURE (),

FILTER ( 'DimOrderDate_Dual', 'DimOrderDate_Dual'[DateKey] >= ArchiveDT )

)

|

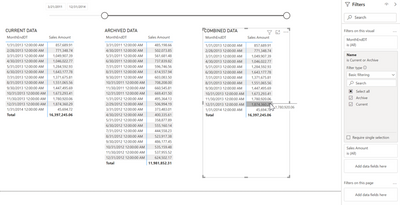

To apply this, we need to filter our matrices using the new calculation items as shown here. With the calculation groups applied as filters you can see in Perfromance analyzer that the Archive and Current matrices perform the way we expect and each matrix contains data in respect to it's filter.

|

|

|

What we really want though is a single set, with both archived and current data combined as if it were sourced from one query while being transparent to the user. To walk through this we will add a "Combined Data" matrix on the page. Now we start to see some of the caveots I mentioned. In the screen shot to the right, I've filtered "Combined Data" to use both values. I'd expected to see the union of both sets between 3/21/2011 and 12/31/2014. What's happening is that PowerBI is prefering the aggregate table because we have no non-aggregated dimensions in scope.

|

|

|

Instead of trying to combine the two calculation groups for now, we'll create a third calculated item. I've create a calculated item called "Either" with the following DAX:

|

|

VAR ArchiveDT =

MAX ( 'ArchiveAfterDateKey'[ArchiveAfterDateKey] )

RETURN

IF (

CALCULATE (

SELECTEDMEASURE (),

FILTER (

'FactInternetSales_Direct',

'FactInternetSales_Direct'[OrderDateKey] > 0 //force an always true filter on the DQ table.

),

FILTER ( 'DimOrderDate_Dual', 'DimOrderDate_Dual'[DateKey] < ArchiveDT )

),

CALCULATE (

SELECTEDMEASURE (),

FILTER ( 'DimOrderDate_Dual', 'DimOrderDate_Dual'[DateKey] >= ArchiveDT )

)

)

|

Here are the results after saving from Tabular editor and updating our "Combined Data" filter to use the "Either" calculation item instead of the other two. Notice we are only seeing "Archive" data now. I've tried various DAX in the "Either" calculation item with no success so the next steps are my workaround.

|

|

|

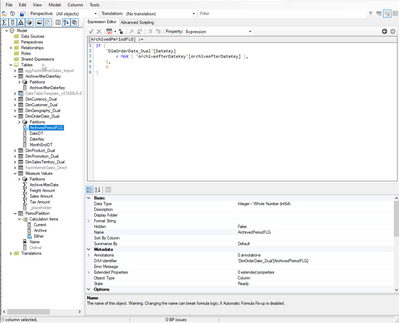

We need an indicator on the period to tell us if the period is archived or not. To keep everything for this demo in PowerBI, I'm doing it with a calculated column on the table with the following DAX:

|

|

IF (

'DimOrderDate_Dual'[DateKey]

< MAX ( 'ArchiveAfterDateKey'[ArchiveAfterDateKey] ),

1,

0

)

|

Now we just need to adjust the DAX in the "Either" calculation item to filter on the new calculated column instead. Adjust the DAX like so: |

|

VAR ArchiveDT =

MAX ( 'ArchiveAfterDateKey'[ArchiveAfterDateKey] )

RETURN

IF (

SELECTEDVALUE ( 'DimOrderDate_Dual'[ArchivedPeriodFLG] ) = 1,

CALCULATE (

SELECTEDMEASURE (),

FILTER (

'FactInternetSales_Direct',

'FactInternetSales_Direct'[OrderDateKey] > 0

),

FILTER ( 'DimOrderDate_Dual', 'DimOrderDate_Dual'[DateKey] < ArchiveDT )

),

CALCULATE (

SELECTEDMEASURE (),

FILTER ( 'DimOrderDate_Dual', 'DimOrderDate_Dual'[DateKey] >= ArchiveDT )

)

)

|

At this point, the combined set seems to be functioning just like we want it to. As the date slicer is modified, the data in the table adapts accordingly and it only does a Direct Query for dates outside of the "Current" partition. Everything seems fine, but we haven't added a non-aggregated dimension to the scope. To do that, let's add Customer Geography to each of the grids. Notice in both our "Combined Data" and "Current Data" tables the data disappears. Because we introduced a dimension that isn't part of the aggregate, PowerBI gives full preference to Direct Query, and we filtered those records out using the parameter

|

|

|

As you can see in the animation, our data is working as expected. Better yet, nothing is duplicated or doubled as a result.

|

|

|

Finally, we need to apply "SELECTEDMEASUREFORMATSTRING()" to the "Format String Expression" property of each calculation item to ensure those format strings carry through from the original measure.

|

|

CONCLUSION

If it seems like the solution is “breaking” PBI’s aggregation functionality you are justified in thinking that. That’s really what is happening in that simple DAX logic in the calc group. We are forcing PBI to ignore the aggregate table because it lacks the rows we need, even though the relationships are satisfied for purpose of aggregation. You can get by with just the "Either” calculation item and apply it globally or on a per page/visual basis. That is the result we are really after, although the other two calculated items are useful for debugging.

Another point of consideration is training. Since this will be part of your Dataset, any consumers that dataset in their reports, whether it's PowerBI, Excel etc., will need to be educated on it's existence and when and how to use it.

This has been a long and very detailed article for what amounts to a few steps:

- Identify the dimension and key that will “break” your aggregated table where you want it to. Period, Date, Sequence etc.

- Add a Boolean indicator on that dimension with logic to determine the true/false value

- Filter your aggregated table so it only has one side of the Boolean result

- Create a calculation group with the calculation item(s) and write your DAX similar to the “Either” example.

- Apply the calculation item as a filter to your visuals or pages accordingly.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- #PBI10 Dataviz Contest | Frequently Asked Question...

- How to Organize Measures Effectively in Power BI

- Power BI Enhancements You Need to Know - Part 6: P...

- #PBI10 Dataviz Contest | Meet the Judges

- Dataviz Contests - How to Submit

- #PBI10 Dataviz Contest

- Unlock Deeper Insights: Data Modeling Best Practic...

- How to Display Power BI Reports in a Continuous Sl...

- What Power BI Developers Often Miss and Why Does I...

- Power BI Enhancements You Need to Know – Part 5: O...

- warunad on: How to Organize Measures Effectively in Power BI

-

Magudeswaran_MR

on:

Power BI Enhancements You Need to Know - Part 6: P...

on:

Power BI Enhancements You Need to Know - Part 6: P...

-

technolog

on:

#PBI10 Dataviz Contest | Meet the Judges

technolog

on:

#PBI10 Dataviz Contest | Meet the Judges

-

slindsay

on:

Dataviz Contests - How to Submit

slindsay

on:

Dataviz Contests - How to Submit

-

slindsay

on:

#PBI10 Dataviz Contest

slindsay

on:

#PBI10 Dataviz Contest

- TharunChalla on: What Power BI Developers Often Miss and Why Does I...

-

Magudeswaran_MR

on:

Power BI Enhancements You Need to Know – Part 5: O...

on:

Power BI Enhancements You Need to Know – Part 5: O...

-

Magudeswaran_MR

on:

The Power of Automation: One-Click DAX Formulas an...

on:

The Power of Automation: One-Click DAX Formulas an...

-

Olayemi_Awofe

on:

Power BI Enhancements You Need to Know – Part 4: T...

on:

Power BI Enhancements You Need to Know – Part 4: T...

-

Ahmedx

on:

Custom Visualization of a 100% Stacked Bar Chart i...

Ahmedx

on:

Custom Visualization of a 100% Stacked Bar Chart i...

-

How to

714 -

Tips & Tricks

692 -

Events

142 -

Support insights

121 -

Opinion

97 -

DAX

66 -

Power BI

65 -

Power Query

62 -

Power BI Dev Camp

45 -

Power BI Desktop

40 -

Roundup

39 -

Dataflow

22 -

Power BI Embedded

20 -

Featured User Group Leader

20 -

Time Intelligence

19 -

Tips&Tricks

18 -

PowerBI REST API

12 -

Data Protection

10 -

Power BI Service

8 -

Power Query Tips & Tricks

8 -

finance

8 -

Direct Query

7 -

Power Automate

6 -

Data Visualization

6 -

Python

6 -

Power BI REST API

6 -

Auto ML

6 -

financial reporting

6 -

Data Analysis

6 -

service

5 -

Power BI PowerShell

5 -

Machine Learning

5 -

Income Statement

5 -

Dax studio

5 -

powerbi

5 -

PowerShell

4 -

Desktop

4 -

Bookmarks

4 -

Line chart

4 -

Group By

4 -

community

4 -

RLS

4 -

M language

4 -

Paginated Reports

4 -

External tool

4 -

Power BI Goals

4 -

Webinar

3 -

CALCULATE

3 -

R visual

3 -

Reports

3 -

PowerApps

3 -

Data Science

3 -

Azure

3 -

Data model

3 -

Conditional Formatting

3 -

Visualisation

3 -

Life Sciences

3 -

Administration

3 -

M code

3 -

Visuals

3 -

SQL Server 2017 Express Edition

3 -

R script

3 -

Aggregation

3 -

calendar

3 -

Gateways

3 -

R

3 -

M Query

3 -

index

2 -

RANKX

2 -

PBI Desktop

2 -

Date Dimension

2 -

Integer

2 -

Visualization

2 -

Power BI Challenge

2 -

Query Parameter

2 -

Date

2 -

SharePoint

2 -

Power BI Installation and Updates

2 -

How Things Work

2 -

Tabular Editor

2 -

rank

2 -

ladataweb

2 -

Troubleshooting

2 -

Date DIFF

2 -

Transform data

2 -

Healthcare

2 -

Tips and Tricks

2 -

Incremental Refresh

2 -

Number Ranges

2 -

Query Plans

2 -

Power BI & Power Apps

2 -

Random numbers

2 -

Day of the Week

2 -

Custom Visual

2 -

VLOOKUP

2 -

pivot

2 -

calculated column

2 -

M

2 -

hierarchies

2 -

Power BI Anniversary

2 -

Language M

2 -

inexact

2 -

Date Comparison

2 -

Power BI Premium Per user

2 -

Forecasting

2 -

REST API

2 -

Editor

2 -

Split

2 -

measure

2 -

Microsoft-flow

2 -

Paginated Report Builder

2 -

Working with Non Standatd Periods

2 -

powerbi.tips

2 -

Custom function

2 -

Reverse

2 -

PUG

2 -

Custom Measures

2 -

Filtering

2 -

Row and column conversion

2 -

Python script

2 -

Nulls

2 -

DVW Analytics

2 -

parameter

2 -

Industrial App Store

2 -

Week

2 -

Date duration

2 -

Formatting

2 -

Weekday Calendar

2 -

Support insights.

2 -

construct list

2 -

slicers

2 -

SAP

2 -

Power Platform

2 -

Workday

2 -

external tools

2 -

deployment

1 -

ssrs traffic light indicators

1 -

SQL

1 -

trick

1 -

Scripts

1 -

Color Map

1 -

Industrial

1 -

Weekday

1 -

Working Date

1 -

Space Issue

1 -

Emerson

1 -

Date Table

1 -

Cluster Analysis

1 -

Stacked Area Chart

1 -

union tables

1 -

Number

1 -

Start of Week

1 -

Tips& Tricks

1 -

Workspace

1 -

Theme Colours

1 -

Text

1 -

Flow

1 -

Publish to Web

1 -

Extract

1 -

Topper Color On Map

1 -

Historians

1 -

context transition

1 -

Custom textbox

1 -

OPC

1 -

Zabbix

1 -

Label: DAX

1 -

Business Analysis

1 -

Supporting Insight

1 -

rank value

1 -

Synapse

1 -

End of Week

1 -

Tips&Trick

1 -

Showcase

1 -

custom connector

1 -

Waterfall Chart

1 -

Power BI On-Premise Data Gateway

1 -

patch

1 -

Top Category Color

1 -

A&E data

1 -

Previous Order

1 -

Substring

1 -

Wonderware

1 -

Power M

1 -

Format DAX

1 -

Custom functions

1 -

accumulative

1 -

DAX&Power Query

1 -

Premium Per User

1 -

GENERATESERIES

1 -

Report Server

1 -

Audit Logs

1 -

analytics pane

1 -

step by step

1 -

Top Brand Color on Map

1 -

Tutorial

1 -

Previous Date

1 -

XMLA End point

1 -

color reference

1 -

Date Time

1 -

Marker

1 -

Lineage

1 -

CSV file

1 -

conditional accumulative

1 -

Matrix Subtotal

1 -

Check

1 -

null value

1 -

Excel

1 -

Cumulative Totals

1 -

Report Theme

1 -

Bookmarking

1 -

oracle

1 -

mahak

1 -

pandas

1 -

Networkdays

1 -

Button

1 -

Dataset list

1 -

Keyboard Shortcuts

1 -

Fill Function

1 -

LOOKUPVALUE()

1 -

Tips &Tricks

1 -

Plotly package

1 -

Sameperiodlastyear

1 -

Office Theme

1 -

matrix

1 -

bar chart

1 -

Measures

1 -

powerbi argentina

1 -

Canvas Apps

1 -

total

1 -

Filter context

1 -

Difference between two dates

1 -

get data

1 -

OSI

1 -

Query format convert

1 -

ETL

1 -

Json files

1 -

Merge Rows

1 -

CONCATENATEX()

1 -

take over Datasets;

1 -

Networkdays.Intl

1 -

refresh M language Python script Support Insights

1 -

Governance

1 -

Fun

1 -

Power BI gateway

1 -

gateway

1 -

Elementary

1 -

Custom filters

1 -

Vertipaq Analyzer

1 -

powerbi cordoba

1 -

Model Driven Apps

1 -

REMOVEFILTERS

1 -

XMLA endpoint

1 -

translations

1 -

OSI pi

1 -

Parquet

1 -

Change rows to columns

1 -

remove spaces

1 -

Get row and column totals

1 -

Retail

1 -

Power BI Report Server

1 -

School

1 -

Cost-Benefit Analysis

1 -

DIisconnected Tables

1 -

Sandbox

1 -

Honeywell

1 -

Combine queries

1 -

X axis at different granularity

1 -

ADLS

1 -

Primary Key

1 -

Microsoft 365 usage analytics data

1 -

Randomly filter

1 -

Week of the Day

1 -

Azure AAD

1 -

query

1 -

Dynamic Visuals

1 -

KPI

1 -

Intro

1 -

Icons

1 -

ISV

1 -

Ties

1 -

unpivot

1 -

Practice Model

1 -

Continuous streak

1 -

ProcessVue

1 -

Create function

1 -

Table.Schema

1 -

Acknowledging

1 -

Postman

1 -

Text.ContainsAny

1 -

Power BI Show

1 -

Get latest sign-in data for each user

1 -

API

1 -

Kingsley

1 -

Merge

1 -

variable

1 -

Issues

1 -

function

1 -

stacked column chart

1 -

ho

1 -

ABB

1 -

KNN algorithm

1 -

List.Zip

1 -

optimization

1 -

Artificial Intelligence

1 -

Map Visual

1 -

Text.ContainsAll

1 -

Tuesday

1 -

help

1 -

group

1 -

Scorecard

1 -

Json

1 -

Tops

1 -

financial reporting hierarchies RLS

1 -

Featured Data Stories

1 -

MQTT

1 -

Custom Periods

1 -

Partial group

1 -

Reduce Size

1 -

FBL3N

1 -

Wednesday

1 -

Power Pivot

1 -

Quick Tips

1 -

data

1 -

PBIRS

1 -

Usage Metrics in Power BI

1 -

Multivalued column

1 -

Pipeline

1 -

Path

1 -

Yokogawa

1 -

Dynamic calculation

1 -

Data Wrangling

1 -

native folded query

1 -

transform table

1 -

UX

1 -

Cell content

1 -

General Ledger

1 -

Thursday

1 -

Table

1 -

Natural Query Language

1 -

Infographic

1 -

automation

1 -

Prediction

1 -

newworkspacepowerbi

1 -

Performance KPIs

1 -

HR Analytics

1 -

keepfilters

1 -

Connect Data

1 -

Financial Year

1 -

Schneider

1 -

dynamically delete records

1 -

Copy Measures

1 -

Friday

1 -

Q&A

1 -

Event

1 -

Custom Visuals

1 -

Free vs Pro

1 -

Format

1 -

Active Employee

1 -

Custom Date Range on Date Slicer

1 -

refresh error

1 -

PAS

1 -

certain duration

1 -

DA-100

1 -

bulk renaming of columns

1 -

Single Date Picker

1 -

Monday

1 -

PCS

1 -

Saturday

1 -

update

1 -

Slicer

1 -

Visual

1 -

forecast

1 -

Regression

1 -

CICD

1 -

Current Employees

1 -

date hierarchy

1 -

relationship

1 -

SIEMENS

1 -

Multiple Currency

1 -

Power BI Premium

1 -

On-premises data gateway

1 -

Binary

1 -

Power BI Connector for SAP

1 -

Sunday

1 -

Training

1 -

Announcement

1 -

Features

1 -

domain

1 -

pbiviz

1 -

sport statistics

1 -

Intelligent Plant

1 -

Circular dependency

1 -

GE

1 -

Exchange rate

1 -

Dendrogram

1 -

range of values

1 -

activity log

1 -

Decimal

1 -

Charticulator Challenge

1 -

Field parameters

1

- 06-29-2025 - 07-01-2025

- 06-22-2025 - 06-28-2025

- 06-15-2025 - 06-21-2025

- 06-08-2025 - 06-14-2025

- 06-01-2025 - 06-07-2025

- 05-25-2025 - 05-31-2025

- 05-18-2025 - 05-24-2025

- 05-11-2025 - 05-17-2025

- 05-04-2025 - 05-10-2025

- 04-27-2025 - 05-03-2025

- 04-20-2025 - 04-26-2025

- 04-13-2025 - 04-19-2025

- 04-06-2025 - 04-12-2025

- 03-30-2025 - 04-05-2025

- View Complete Archives