FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Publishing a report with SDF in it never ends....

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Share your thoughts on streaming dataflows aka SDF (preview feature)

Back at MBAS, our CVP Arun Ulag gave everybody a sneak peek of streaming dataflows, the new experience for real-time data preparation and comsumption in Power BI. Later at BUILD, we showed everyone an extended demo of the current bits being tried by several of our customers as part of the private preview. And today, finally streaming dataflows is released to the world for public preview with even more updates a new UI.

We would love to hear your feedback and opinions to help us decide what comes next and how to improve this functionality in general. Thanks you so much in advance for your feedback and we will be alert to answer any questions you might have and listen to any ideas you might come up with.

Cheers

The streaming dataflows team

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello. When can we expect Streaming dataflows to move out of preview? The feature isn't yet included in "Trusted Microsoft Services" yet so it won't work for us since our firewall settings in Azure only allows trusted microsoft services. I assume it will be "Trusted" once it moves out of preview status?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

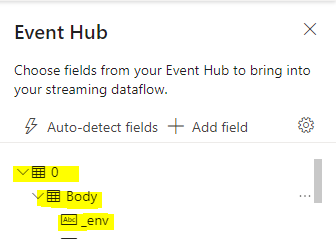

Hello, does not seem that the streaming dataflows work with deeper than 1 level nested items? F.e. trying to pull the _env field and it does not produce an output. Any ideas?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Neither of the time fields will appear in the input preview. You need to add them manually. is this also true for the deviceID (if there is more then one device connected to the IoThub)??? and how to do that??

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I'm using streaming dataflow. My data source has a Record field type, but it is not possible to bring it into an output table. It is normal?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is by design. You can use 'Manage Fields' and flatten the record using that operator to see it in output.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh my gosh, it's true! Many thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can not see cold table in Power BI Desktop, only hot table (which works perfect).

Is there any configuration? Retention is set to 7 days, could this be switched off in PowerBI Admin?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

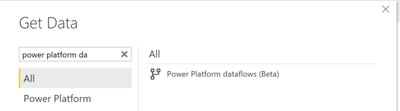

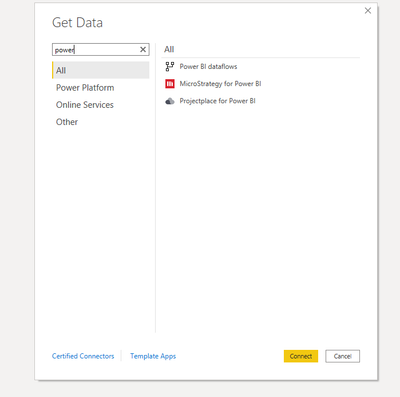

Can not see cold table in Power BI Desktop, only hot table (which works perfect).

Re: Hi @Anonymous, can you confirm that you're using "Power Platform dataflows" connector and not "Power BI dataflows"? If yes, is there an error that you see or it takes a long time to get data? Usually, loading cold entities take a little while since it pulls in all the historical data.

Retention is set to 7 days, could this be switched off in PowerBI Admin?

Re: Unfortunately no, the max retention policy that is allowed is 7 days (which only pertains to hot entities). You should still be able to tap into historical data using cold entities for data past that 7 day time window.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

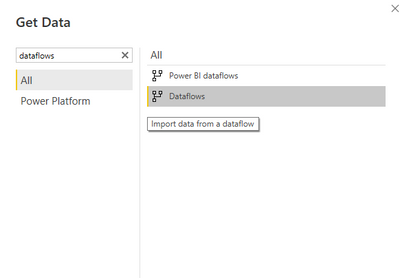

@Shivan, @MiguelMartinez , @Eklavya - I do not see the option for Power Platform Dataflows, is there a preview feature I am missing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It has now been renamed to "Dataflows"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Someone needs to update their documentation in that case 😂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

I receive all messaage fields from the IoT Hub, but not the internal attributes as "iothub-enqueuedtime"!

So you do not have any timestamp and is useless data! (when it is not in the message)

With Stream Analytics there is no probelm with that because all IoT-columns are provided.

Oliver

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, figured it out, you have to manually add this fields:

EventProcessedUtcTime

EventEnqueuedUtcTime

Preview does not show content, but it works on output table

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I'm trying to work out the "hot" and "cold" storage process. I have a Streaming Dataflow that I have set the Retention Duration in the Dataflow settings tab to 1 Day. I have then run that Streaming Dataflow and can see data in the Hot table when connected via Power BI Desktop. However, after several days I can certainly see the same data in the Cold storage table but the data remains in the Hot storage table. I have tried stopping/resuming the Streaming Dataflow but the data remains in the Hot table - I would have thought this would not be visible after being moved to Cold storage?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Andy,

The idea is slightly different:

Hot - Keeps data only for the retention duration. While refreshing, data is actively dropped from Hot entities as it moves out of retention.

Cold - Data is always kept in cold, regardless of retention.

Data is never moved from hot to cold.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The process still isn't very clear to me.

So does a message always exist in Cold storage no matter the retention period?

What defines the hot retention period? I would not expect to still see data in the hot table several days after the "1 Day" retention period setting in the Dataflow.

thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> So does a message always exist in Cold storage no matter the retention period?

Yes.

> I would not expect to still see data in the hot table several days after the "1 Day" retention period setting in the Dataflow.

Dataflows enforce retention policy only while refresh is running. So if your refresh is not running, you will still see data that is out of retention.

Do you have a use case where you need the data to be dropped out of retention even when the refresh is not running?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

OK I understand now after testing. I see both the same data in the hot and cold storage when I set-up a new Streaming Dataflow,

I have started the older Streaming Dataflow and after a few minutes I now see significantly less rows in the hot table - there are still around 90 rows (which is strange).

I don't have a specific use case but I do think the documentation could be clearer in terms of the differences between hot and cold and under what conditions data will be retained further than the retention point (eg when it's no running).

thanks for the responses, much appreciated

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Miguel, I'm just evaluating SDF in a premium per user workspace. I was able to create the dataflow based on IoT Hub, start it, create a report on top of it in Power BI Desktop, and the report refreshes automatically after I configured change detection. Great! Now when I try to publish this report to exactly the same (ppu) workspace, publishing never finishes. Same applies when I try to upload the pbix file to the workspace: "circle of death" forever, and no error messages. I can easily upload to other non-premium workspaces, but of course I lose the streaming functionality. Do you have any hints for me...?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, I have to modify my post a little, after all, this is preview cloud software, and it's changing fast! Today, publishing the report works fine. But as I try to access the report via browser in my ppu workspace, the error message is "The data source Extension is missing credentials and cannot be accessed". I was able to publish there, and I do have a Premium per User license. What can I do?

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!

| User | Count |

|---|---|

| 56 | |

| 55 | |

| 31 | |

| 17 | |

| 14 |