- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Resource Governing in Premium: 1GB model exce...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Resource Governing in Premium: 1GB model exceeding 25GB limit

I have a dataset that I can no longer reliably refresh. The data is fairly large - millions of rows in a wide table (I know - bad design. We're working on that but need to keep this alive in the meantime). When I check the model from DAX Studio Vertipaq Analyzer, it is showing as around 1 GB. Yet we keep getting this error:

"Resource Governing: This operation was canceled because there wasn't enough memory to finish running it. Either reduce the memory footprint of your dataset by doing things such as limiting the amount of imported data, or if using Power BI Premium, increase the memory of the Premium capacity where this dataset is hosted. More details: consumed memory 24973 MB, memory limit 24972 MB, database size before command execution 627 MB"

Is there any strategic way to figure out which part of the process is causing this error? I can't even narrow down if this is happening in the Power Query phase, or when building the model . But I am perplexed how a 1GB model can blow up to nearly 25GB during refresh.

Another oddity -- I've been able to publish this model to a different workspace and sometimes refresh it in the other workspace. Why would it behave differently in different workspaces on the same capacity?

Thank you!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A quick update. The "dataOnly" refreshes are the best way to simplify a refresh. It will allow you to isolate, and determine if the problem is with the calculations. There is a subsequent operation ("calculate") that you should use after the "dataOnly" and it will either work or fail.

There are actually several different types of table features that may consume 10's of GB and cause failures. I removed all the calculated columns from my model and still had memory errors. Eventually I had to remove all the custom hierarchies as well. Finally the memory errors stopped.

These problems were affecting me in a relatively small table with 2 partitions of about 1.5 GB each. My capacity is P1 (25 GB).

So the troubleshooting steps are to first use "dataOnly" and see if the problem is fixed. After that, you can incrementally delete all the interesting stuff from your table (calculated columns and hierarchies) until the problem finally goes away. It is a trial-and-error approach, unfortunately.

I have a support ticket open with CSS to see how we can monitor and quantify these memory problems. On my desktop things will always work fine. It seems unacceptable that my desktop works fine with 10 GB free, but the PBI service fails to refresh - even though it has 25 GB to work with!

These problems with RAM aren't simply related to ambient levels of RAM usage in my capacity. What is happening is a sudden spike that goes from almost no RAM usage to 25 GB RAM usage in just a couple minutes. In any other piece of software I would call this a bug. Even my MPP big data solutions running on Spark wouldn't consume this much RAM so aggressively!

The final result of a refresh is a relatively small partition. So why can't Microsoft find a way to get to that without blowing up? It doesn't seem like rocket science, and it appears that they already found a way to accomplish this on a developer's workstation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@GilbertQ

The dimension is degenerate in this case, so the attributes are found on the fact table.

I really have no idea what Power BI is doing. Perhaps the entire fact table needs to be materialized in memory, along with some sort of internal surrogate identifier of every single row in the fact table. On top of that, perhaps it pre-allocates the memory needed for the cross-product of every level-one attribute with every level-two attribute. I'm just guessing at what could possibly consume this amount of RAM. Whatever it is doing, it seems crazy. By looking at the size of corresponding one-level hierarchies in DAX studio, the final result should be stored as a relatively small amount of hierarchy data. (100 or 200 MB). The problem is that Power BI doesn't have a reasonable way to get from "point A" to "point B" without using an impractical amount of RAM.

As I mentioned, the cardinalities are pretty small (ie. two levels of ~500 members and ~5 million members). It didn't seem like I should be running into these types of problems at this scale.

I think the old multidimensional technology avoided this problem by leaning on the SQL source a bit more heavily, rather than consuming a massive amount of RAM resources on the OLAP side. SQL is a better place for complex algorithms. The query algorithms in SQL are also a *lot* better than whatever Power BI is doing, after "uncompressing" the columnstore data. You hinted at using PowerQuery for gathering this data together in advance, but I think the source (SQL) is better qualified for gathering a distinct set of hierarchy members and relationships. Sometimes I miss Microsoft's "multidimensional" technology which seemed better suited for large datasets as compared to "tabular".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @dbeavon3

Thanks for your explanation. You could look at using DAX studio to see how large the hierarchy size when you use the View metrics?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @GilbertQ

If/when the "calculate" is able to complete successfully, we would be able to see that hierarchy size.

... I'm only guessing that the final size of the hierarchy data would be small - based on the sizes of the corresponding one-level hierarchies which are created by default ("implicit" hierarchies for MDX). Those must use a different algorithm, as they are created and saved.

However the "calculate" will not succeed for my custom hierarchy. It seems impossible to complete the refresh of this hierarchy on a P1. (Who knows if it would even work on a P2?)

This particular topic doesn't appear in docs, or even in google searches. If custom hierarchies have these restrictions/limitations, there should be documentation to explain why. It is a bit frustrating that these "calculate" algorithms in Microsoft tabular are built in a way that requires customers to have an infinite amount of RAM.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI @dbeavon3

This can often happen due to the fact that it has to materialize the data into RAM, and because this is done AFTER the data is loaded into the sementic model it is not compressed. So if you have got millions of rows which are then being used to say create a calculated column it has to do all of that using uncompressed data into RAM which is why the error could occur. I hope that helps explain why it could happen.

Ideally calculated columns should be created in Power Query, so that the data can be compressed on the way into the semantic model.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the update @GilbertQ

In my case it was not the calculated column that was the problem, but rather it was the custom hierarchy. When I first googled for the resource governor messages, there were lots and lots of search results in the community, but all of it pointed me to review my calculated columns. I wasted a lot of time. Finally I opened a support ticket with CSS and we conclusively proved that custom hierarchies can cause a similar problem, especially in a large table ("degenerate dimension" on a fact table).

Oddly the implicit MDX hierarchies on a single column do not consume a ton of RAM while they are being caclulated. Nor do single-column custom hierarchies. Only the custom multi-level hierarchies do (ie. if you have two levels and the top one has ~500 members and the next has ~5 million members then this can consume all of a P1 capacity).

It seems extremely shocking to me. My past experience was with "multidimensional" cubes, and hierarchies were fundamental and reliable. It seems odd that his "tabular" technology can't calculate this hierarchy without filling up 25 GB ram.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@AMD0791 @dbeavon3 if it will not work, I would start kicking out calculated tables/columns and if this is not enough some actual columns.

You can use external tools like Measure Killer to tell you which parts of your data model is actually used across reports If you already have reports built on top of this obviously)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the tips. In my case, the question is more of a generalized question. Why is memory misreported? Or why is it being swallowed so quickly? How do I monitor? How do I prevent these problems from happening in my future models?

Based on your tips, and those from @GilbertQ it sounds like there are some well-known problems with memory usage for models that have a calculate column. Gilbert also said "yes this could very much be the reason ..".

Given that you folks are already aware of the problem, are you also aware of a KB article with Microsoft guidance? Or did you discover this yourself by trial-and-error? Did you ever report these problem to Microsoft support (CSS)?

Not to be too snarky , but developers shouldn't have to use a trial-and-error approach in order to figure out how they lost 25 GB of ram . It's not like you can lose 25 GB of ram in the cushions of your couch! That is a TON of memory. Worse yet, this problem ONLY seems to be manifested in the service, and NOT when I'm doing development work. Obviously developers don't want to spend a week building something, only to find that it doesn't work after deploying to the service. Unfortunately that happens far too often when I've worked on complex projects with Power BI. It always seems like a tool that works well for simple jobs, but has lots of trip-hazards after you reach ten or a hundred million rows. I would never have guessed that a calculated column would be so unreliable at scale. If anything, those should be even more scalable and even more reliable than anything coming from PQ/.Net. It is implemented entirely within the tabular engine itself (native code hosted in the "msmdsrv" process).

I'll look at "measure killer". I have never heard of it before. What I'd really like is some sort of a "perfmon counter" that would allow me to observe memory utilization in realtime. I might try to open a support case too. Is there any chance that the CSS support engineers would have direct visibility to see my RAM usage?

I really appreciate your tips. Thanks for sharing. I wish I could find a KB to go along with this. I sometimes find that the community is well aware of the bugs and shortcomings of Power BI, but rarely push Microsoft to create the necessary KB's/docs. When working with these types of problems, it is annoying to have to wade thru massive amounts of community posts (which may either help or hamper). If I open a support case about the missing 25 GB, I'll see if they can publish a new article or something.

So far, you (and GilbertQ) might be on to something. I tested a refresh of the "data only" and it appears to have succeeded:

{ "refresh": { "type": "dataOnly", "objects": [ ...

.. perhaps I will be able to recalculate my columns as a secondary step without running out of memory!

If that works, it is good and bad. It means I'm probably going to have to write a custom process to refresh any large datasets that have calculated columns. What a pain...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A quick update. The "dataOnly" refreshes are the best way to simplify a refresh. It will allow you to isolate, and determine if the problem is with the calculations. There is a subsequent operation ("calculate") that you should use after the "dataOnly" and it will either work or fail.

There are actually several different types of table features that may consume 10's of GB and cause failures. I removed all the calculated columns from my model and still had memory errors. Eventually I had to remove all the custom hierarchies as well. Finally the memory errors stopped.

These problems were affecting me in a relatively small table with 2 partitions of about 1.5 GB each. My capacity is P1 (25 GB).

So the troubleshooting steps are to first use "dataOnly" and see if the problem is fixed. After that, you can incrementally delete all the interesting stuff from your table (calculated columns and hierarchies) until the problem finally goes away. It is a trial-and-error approach, unfortunately.

I have a support ticket open with CSS to see how we can monitor and quantify these memory problems. On my desktop things will always work fine. It seems unacceptable that my desktop works fine with 10 GB free, but the PBI service fails to refresh - even though it has 25 GB to work with!

These problems with RAM aren't simply related to ambient levels of RAM usage in my capacity. What is happening is a sudden spike that goes from almost no RAM usage to 25 GB RAM usage in just a couple minutes. In any other piece of software I would call this a bug. Even my MPP big data solutions running on Spark wouldn't consume this much RAM so aggressively!

The final result of a refresh is a relatively small partition. So why can't Microsoft find a way to get to that without blowing up? It doesn't seem like rocket science, and it appears that they already found a way to accomplish this on a developer's workstation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've been working on a css ticket to try and troubleshoot a problem with the "calculate" phase of a refresh. In that phase, the Power BI service seems to demand a limitless amount of RAM.

In my latest scenario the "degenerate dimension" (on the fact table) consists of ~7 million distinct members at the second level, and only ~300 members at the first level. The fact table has a total of about ~100 million rows. Despite these relatively small numbers, I cannnot refresh ("calculate") the hierarchy without running out of RAM. I believe that Power BI is trying to use somewhere between 20GB and 100GB. But it cannot do so without failing. (I don't know exactly how much Ram it needs, since I only have a P1 capacity. The ambient memory usage is around ~5 GB, before any of my tests.)

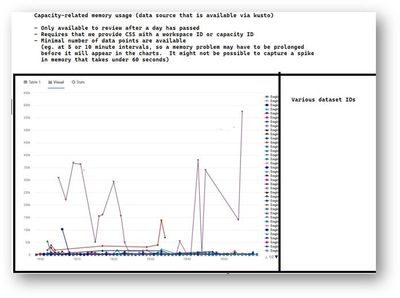

It turns out that the CSS organization is able to use some tools to investigate the RAM utilization of a PBI dataset. They can chart the dataset memory at intervals of ~5-10 minutes. This might be sufficient for a certain level of troubleshooting, to understand how much RAM is consumed during the refresh ("calculate"). Below is a chart that the CSS engineer shared, which provides memory by dataset ID. If there are one or more datasets that are misbehaving, then it should be easy to see that in the chart visualization.

By trial and error, we discovered that it was my custom hierarchies that were causing memory to spike, and not the calculated columns or calculated tables.

The next step is to attempt to ask Microsoft to create documentation - so that customers can learn why these custom hierarchies are comsuming so much RAM during a refresh.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AMD0791

The reason is that the size of your saved PBIX is the fully compressed size. Very often all those components need to be expanded into memory so it actually will use more memory than the PBIX file size.

When you refresh it needs to make a copy of the dataset to refresh it in the background. Now if you have a very wide table with a lot of data that could very easily consume a lot of memory.

One way to make it refresh successfully is to use incremental refreshing which would not refresh the entire dataset, and probably get under the 25GB limit.

Incremental refresh for datasets and real-time data in Power BI - Power BI | Microsoft Learn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the input. I'm not talking about the size of the pbix file. I'm looking at the "Total size in Memory" item in the Vertipaq Analyzer in DAX studio and when connecting to the dataset from SSMS via XMLA.

Unfortunately, the existing queries don't support query folding, so incremental refresh is not possible right now. This particular dataset needs a re-design, but that's not a quick fix.

I'd like to see if I can figure out if it's an issue with the Power Query while importing the data, or if it's blowing up while creating the model with a calculated table that's a DAX union of multiple tables. I strongly suspect it's the latter - which might not be fixed with incremental refresh anyways.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AMD0791

If you have a calculated table, then yes this could very much be the reason why the memory allocation is so large.

Could you not create this table in Power Query?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No - the reason this calculated table exists is to create a union between the historical data that's no longer refreshed and the current data that is refreshed.

I can't find good documentation on how PBI desktop processes this stuff. Does it fully process and load the data to the model before it tries to build the calculated table? Or is it building the calculated table as the source query is being refreshed?

I also find it bizarre that it refreshes in one workspace but not another.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AMD0791

It first has to load the data, then second it then creates the calculated table which it has to use even more memory to calculate.

If you have historical data you could give this an older date and use incremental refresh to not have to refresh it every day.

I think the reason it might work for now in another app workspace is that the metrics have not yet caught up and got the new details. In time i would to expect it also to start failing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your insight.

I've been puzzled by the refresh process a bit because I have a different experience in Power BI desktop depending on whether or not the model includes the calculated table.

The failing model has 3 tables:

Table OLD -- imported, not refreshed.

Table NEW -- imported, refreshed . Source is SQL table, with a bunch of PQ steps after, including merges to other data sources that break query folding.

Table UNION -- table that's a DAX union of Table OLD + Table New. It also has several calculated columns and measures.

If I try to manually refresh Table NEW on its own in the full model, it ticks away for a few hours and I see the record count go up to where I expect and it seems to finish moving the SQL data. Then it clocks away for a couple minutes more with no useful message, and then it just barfs with a memory error. I can't tell if that is coming from a later step in Power Query, or if it's coming from the build of the calculated table. The data in "Table-NEW" is not refreshed.

If I copy the "Table-NEW" query to an empty PBI Desktop file (so no calculated table, columns, or measures) and refresh that table, it takes about 30 minutes to refresh that table and finishes with no error.

The fact that it is so much slower to get the data from SQL in the model with the calculated table makes me think that it is trying to build that calculated table at the same time that it is pulling data from SQL.

I am aware there's significant design deficiencies here for a large data volume. I inherited this thing and it's widely used, so I'm just trying to keep it alive for a while so we can re-calibrate the actual requirements and re-design the dataset to be more sustainable.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have almost the exact same scenario. There is a very small 2GB partition of a model that cannot be refreshed in a P1 capacity (25 GB).

It retrieves about 100 MM rows from SQL.

I've enabled the "Large Dataset Support" (whatever that means) in the service, and it helped for a small amount of time and then I started seeing failures again.

I can fully refresh this locally on my machine, and the msmdsrv.exe process seems to show very minimal RAM usage (normally 5 GB or less). I'm struggling to understand how this small partition can be swallow up more than 25 GB of RAM in the Power BI service!

Most importantly, I haven't found any tool to monitor the memory when my stuff is hosted on the service. Are there any tools in the PBI portal that I'm missing? Memory doesn't seem to be well exposed in the "Fabric capacity dashboard" and even if it was, it probably wouldn't update those metrics fast enough to help me troubleshoot a refresh that is in progress. One thing I haven't tried is SSMS profiler. Will that show me events that explain the rapid loss of memory?

I'm probably going to open a CSS support case as well. It seems pretty annoying that the experience in the service is so much different than what happens locally. Also the service is totally opaque and there is no way for me to watch my memory get swallowed so rapidly.

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.