Fabric Data Days starts November 4th!

Advance your Data & AI career with 50 days of live learning, dataviz contests, hands-on challenges, study groups & certifications and more!

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Lakehouse table schema not updating at dataflo...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Lakehouse table schema not updating at dataflow refresh

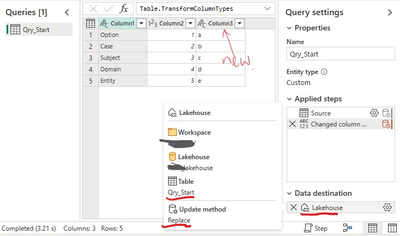

In online service, I have a simple dataflow gen2 that has a single 2-column, 3-row query (image below) that publishes (update method replace) to my lakehouse. Updates to the dataflow (i.e. modifying data in existing rows or new rows added) show up in the lakehouse table just fine when the dataflow and lakehouse are refreshed. All good so far.

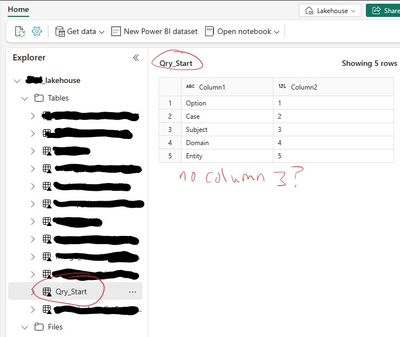

However, if I add a new column to my dataflow query (table structure/schema is changed, simple text column) - taking care to ensure successful publish and lakehouse refresh - the lakehouse table doesn't show the new 3rd column.

Refreshing the individual table in the lakehouse doesnt change anything either.

The relevant 'refresh-timestamped' parquet and json files for the lakehouse table do not reflect the new table schema either.

In the dataflow query editor, the schema view shows the newly added column so at least dataflow side everything is normal. Also in the dataflow query editor, going through the lakehouse data destination settings and 'refresh destination schema' dialogue makes no difference - despite the process acknowledging that, quote "Schema changed since you last set the output settings. Column mappings have been reset to their default". Strangely, if I select "Append" mode it adds the 3rd columns data to the bottom of the 1st and 2nd columns.

The only way to get the changed table to update correctly to the lakehouse is to delete the lakehouse table itself and then refresh the dataflow. Not ideal if you've got upstream dependencies and model relationships to consider.

Same behaviour no matter which of my many dataflow schemas change - the lakehouse always retains the original schema.

Any help greatly appreciated.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Solution: As at 13th Dec 2023 this fix works: When your query schema changes (e.g. add or delete new column ) before clicking 'Publish' to lakehouse, go into data destination settings (cog bottom right), click 'Next' into 'Choose Destination Target' - and this is the critical bit - ensure 'New table' is selected (even though you know that this table already exists in your Lakehouse! I know, so intuitive right?!). Ensure your destination lakehouse and table name are unchanged, click 'Next' which should display a message to the effect of 'your schema has changed', (you might need to check the box next to your new column(s) to include them in the schema) then save and publish. Please note this method does not work on my historical dataflows/tables - only new ones. To get this to work on your older tables you will need to delete them from the lakehouse, re-publish them from your dataflow, then from that point on you should be good for future schema changes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Solution: As at 13th Dec 2023 this fix works: When your query schema changes (e.g. add or delete new column ) before clicking 'Publish' to lakehouse, go into data destination settings (cog bottom right), click 'Next' into 'Choose Destination Target' - and this is the critical bit - ensure 'New table' is selected (even though you know that this table already exists in your Lakehouse! I know, so intuitive right?!). Ensure your destination lakehouse and table name are unchanged, click 'Next' which should display a message to the effect of 'your schema has changed', (you might need to check the box next to your new column(s) to include them in the schema) then save and publish. Please note this method does not work on my historical dataflows/tables - only new ones. To get this to work on your older tables you will need to delete them from the lakehouse, re-publish them from your dataflow, then from that point on you should be good for future schema changes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried this method but it doesn't work. Selecting "new table" does not allow me to click "next" hence I'm unable to proceed further. Any workarounds besides deleting the old table as stated by the author?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you opened the proxy to the required destinations?

Dataflow fails to read from the lakehouse: Solution:

The firewall rules on the gateway server and/or customer's proxy servers need to be updated to allow outbound traffic from the gateway server to the following:

Protocol: TCP

Endpoints: *.datawarehouse.pbidedicated.windows.net, *.datawarehouse.fabric.microsoft.com, *.dfs.fabric.microsoft.com

Port: 1433- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not sure I can do that as that would be managed by my IT department but I'm not sure that would determine if I can have the "next" button enabled for the solution stated above. There doesn't seem to be an issue of "dataflow fails to read from lakehouse" here, it's just the part of having the new column being displayed in the destinatoin editor.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the same problem. Are we missing something?

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!