FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Join the Fabric FabCon Global Hackathon—running virtually through Nov 3. Open to all skill levels. $10,000 in prizes! Register now.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Data source credentials (SSA token) for Azure Blob...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Data source credentials (SSA token) for Azure Blob Storage only on storage account level?

Hello to the community,

and thank you for reading this!

We have an Azure storage account of type Data Lake Gen 2, which contains a large number of individual blob containers. We grant access to the blob containers via SAS tokens. The SAS tokens allow access to a specific container, NOT to the complete storage account. When we author PowerBI files with PBI Desktop, using these SAS tokens to access data in a specific blob container in Azure works just fine.

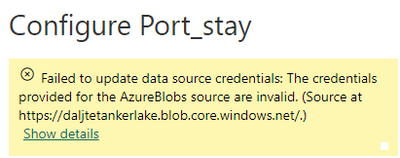

Then, when we publish a PowerBI report to a workspace in our tenant (meaning to app.powerbi.com), the data sources in the Azure storage account cannot be updated/refreshed, as PowerBI says that the credentials supplied are invalid:

If we try an SAS token that is authorized for the full storage account, the credentials are accepted, and the data sources can be updated/refreshed.

Our question is (we cannot find documentation on that): is it currently NOT possible to use SAS tokens for blob containers in PowerBI for the web/Power BI workspaces? It seems that way to us.

If you have any experience with this use case, and/or any Microsoft documentation on it, please share it with us.

Thank you 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are just coming across this now. Doesn't look like anyone has come up with a solution or workaround to this on this post. Has it been reported to MS at all, even as a possible future development?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know if it was reported, to be honest.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there anyone who can shed some light on this matter? Maybe in the meantime, the functionality in the workspace has changed so that other options for the SAS tokens are viable? We would REALLY appreciate some kind of documentation on what kind of SAS tokens can be used in PowerBI workspaces.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does anyone have any info on this matter?

Is it really not possible to use SAS tokens for specific blob storage containers in a PowerBI workspace?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Rongtie,

and thank you for your answer.

1. The SAS token for the container is valid. We use it in other applications, where it works.

2. The SAS token is passed correctly. As explained above, when we use, with the same syntax, an SAS token that is authorized for the full storage account, everything works.

3. We did that (see my post above, sentence below the screenshot), and it works. BUT: We do NOT want everybody to have access to the full storage account, but only to the data in a specific container.

As I wrote above, my question is:

Is it possible to use an SAS token authorized only on a container level in PowerBI for the Web/Power BI Workspace, or are only SAS tokens authorized on a storage account level accepted? To us, the latter seems to be the case. Is there any kind of documentation on this?

Best regards,

Jan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Having the same problem as you describe.

It seems like as soon as you upload the report to the service, the power bi service seems to only recognize the top level storage account as a datasource, and not the container.

Feels like this is some kind of a bug as it works fine on Power BI desktop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you find any workarounds, please let me know in here! Thanks 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JanSchuppius ,

Are you facing the same issue on dataflow?

Please refer this -

https://learn.microsoft.com/en-us/power-query/connectors/data-lake-storage

https://learn.microsoft.com/en-us/power-query/connectors/azure-blob-storage

I hope it will be helpful.

Thanks,

Sai Teja

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Sai, this is NOT an issue with PowerQuery. There, everything works as intended. This is an issue with the automated updating feature for a report in a PowerBI workspace. The documentation links you gave are not helpful, unfortunately.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JanSchuppius ,

It might be due to access you are providing to that blob. Try to switch access levels and check.

Thanks,

Sai Teja

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

We have been experiencing this as well. It looks like the SAS token is set on container level and that is fine in Desktop, but when dataset is published to the service, the service cannot replicate that. On the service the connection seems to be looking at the higher blob storage level, not the container. And it does not look to be possible to tell it you want a specific container only either.

In link you kindly provided the suggestion for getting it to work on the service seems to be saying that you need to create dataflow/dataset on the service to begin with, not in desktop and publishing to a workspace.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JanSchuppius ,

One possible reason why the credentials supplied are invalid could be that the SAS token used for the specific blob container has expired or has not been granted sufficient permissions to access the data sources in the Azure storage account. Another possibility could be that the SAS token is not being passed correctly to PowerBI when publishing the report to the workspace.

I suggest you check the following:

1. Make sure the SAS token used for the specific blob container is still valid and has the necessary permissions to access the data sources in the Azure storage account.

2. Check if the SAS token is being passed correctly to PowerBI when publishing the report to the workspace.

3. You can also try using a shared access signature (SAS) token that is authorized for the full storage account to see if it resolves the issue.

How to Get Your Question Answered Quickly

If it does not help, please provide more details .

Best Regards

Community Support Team _ Rongtie

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.