FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Update MySQL dataset with only additional rows (no...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Update MySQL dataset with only additional rows (not reload the entire thing)

This is on Power BI desktop...

I have 40 million rows (and counting) in a MySQL database and one column is a JSON package which has to be parsed. This isn't an issue, but it does take a while to load. (it takes about 2 minutes per million records to load - about 1.5 hours now and it keeps growing)

I'd like to just add the rows that are new, but I can't figure out how to just add for id > 40000000 without getting rid of all the rows with id < 40m. Is there a way to do this?

I'm pondering a workaround by adding separate queries for each month (or chunk of id numbers) and then associate them, but I fear I'll have to work harder to get it all working as one dataset like it should.

Can I do this easily and if not, is there a hard but effective way?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are some suggestions for you.

a. Write specific SQL query to import required columns and rows from MySQL database to Power BI Desktop.

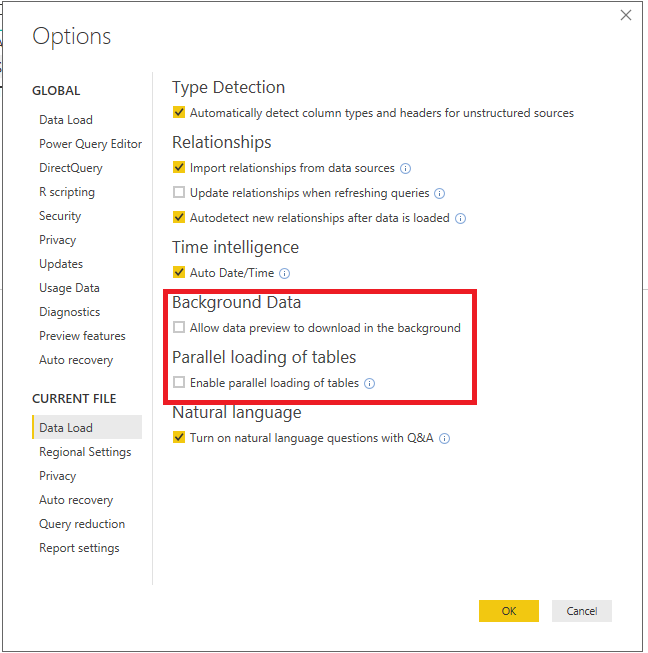

b. During the import process, disable the following highlighted options in Power BI Desktop.

c. Create views for different date time in MySQL database if necessary, and then import the views in Power BI Desktop.

Regards,

Lydia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are some suggestions for you.

a. Write specific SQL query to import required columns and rows from MySQL database to Power BI Desktop.

b. During the import process, disable the following highlighted options in Power BI Desktop.

c. Create views for different date time in MySQL database if necessary, and then import the views in Power BI Desktop.

Regards,

Lydia

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!