Join us at the 2025 Microsoft Fabric Community Conference

March 31 - April 2, 2025, in Las Vegas, Nevada. Use code MSCUST for a $150 discount! Early bird discount ends December 31.

Register Now- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

Be one of the first to start using Fabric Databases. View on-demand sessions with database experts and the Microsoft product team to learn just how easy it is to get started. Watch now

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Power Query to Match Bank vs OR amounts within...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Power Query to Match Bank vs OR amounts within each Branch

Hello Everyone,

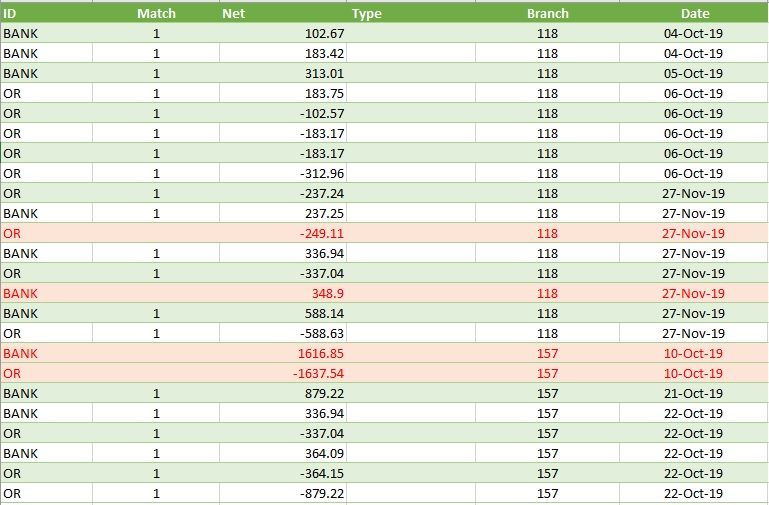

I have a situation where I am trying to use Power Query to match a list of data, see table below.

We have Columns ID, Match, Net, Branch & Dates which are important in this process:

Bank has Positive Numbers in Net Column

OR has Negative Numbers in Net Column

I would like to make a one to one match of Bank Numbers against OR Numbers and populate in Column B Match with value 1 for all those amounts matching.

If the numbers match exactly with each other that's fantastic, but in general it is not the case,

and I am prepared to allow a tolerance limit for the difference from minus 2 to plus 2.

So if the compared amounts throw out a difference within this range it can be assumed they are matched.

Some Rules

the matching should be between Bank vs OR on absolute values eg plus 100 against minus 100 assumed matched

numbers matching within each branch not against another branch number

for the moment we can leave out date criteria

| ID | Match | Net | Type | Branch | Date |

| BANK | 102.67 | 118 | 04-Oct-19 | ||

| BANK | 183.42 | 118 | 04-Oct-19 | ||

| BANK | 313.01 | 118 | 05-Oct-19 | ||

| OR | 183.75 | 118 | 06-Oct-19 | ||

| OR | -102.57 | 118 | 06-Oct-19 | ||

| OR | -183.17 | 118 | 06-Oct-19 | ||

| OR | -183.17 | 118 | 06-Oct-19 | ||

| OR | -312.96 | 118 | 06-Oct-19 | ||

| OR | -237.24 | 118 | 27-Nov-19 | ||

| BANK | 237.25 | 118 | 27-Nov-19 | ||

| OR | -249.11 | 118 | 27-Nov-19 | ||

| BANK | 336.94 | 118 | 27-Nov-19 | ||

| OR | -337.04 | 118 | 27-Nov-19 | ||

| BANK | 348.9 | 118 | 27-Nov-19 | ||

| BANK | 588.14 | 118 | 27-Nov-19 | ||

| OR | -588.63 | 118 | 27-Nov-19 | ||

| BANK | 1616.85 | 157 | 10-Oct-19 | ||

| OR | -1637.54 | 157 | 10-Oct-19 | ||

| BANK | 879.22 | 157 | 21-Oct-19 | ||

| BANK | 336.94 | 157 | 22-Oct-19 | ||

| OR | -337.04 | 157 | 22-Oct-19 | ||

| BANK | 364.09 | 157 | 22-Oct-19 | ||

| OR | -364.15 | 157 | 22-Oct-19 | ||

| OR | -879.22 | 157 | 22-Oct-19 |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @mbuhary

Please see the attached file with a solution.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("rdLBDsIgDAbgVzE7j4a2UOCoV5Mt8brs5AN4MT6/bE4nSlYPXggkX/7SwjA0h313bNoGLYGEvNlNB4x5tc7056vB1Izt6iKDI90xMlgsnX93/WlJC75U8qXMdDkfdJbT8G+MkSCJyogDkCsYBdNdbh8DmZ3fcEucS4CoxzELpK2ySxO5qv3hduwiJJ35GAH1qhMT1uNQUCC+hjI/MdrKi0luw7sNtwTGkICocIS131lM7+Go8gWK6VXYM04c2KTHZYZeZbUmVjbeAQ==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [ID = _t, #"Net " = _t, #" Type" = _t, Branch = _t, #" Date" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"ID", type text}, {"Net ", type number}, {" Type", type text}, {"Branch", Int64.Type}, {" Date", type date}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"ID", "Branch"}, {{"tbl", each _, type table [ID=text, #"Net "=number, #" Type"=text, Branch=number, #" Date"=date]}, {"sum", each List.Sum([#"Net "]), type number}}),

#"Grouped Rows1" = Table.Group(#"Grouped Rows", {"Branch"}, {{"tbl", each _, type table [ID=text, Branch=number, tbl=table, sum=number]}, {"sum", each List.Sum([sum]), type number}}),

#"Added Custom" = Table.AddColumn(#"Grouped Rows1", "Match", each if [sum] <= 100 and [sum] >= -100 then 1 else 0),

#"Changed Type1" = Table.TransformColumnTypes(#"Added Custom",{{"Match", Int64.Type}}),

#"Removed Other Columns" = Table.SelectColumns(#"Changed Type1",{"Branch", "tbl", "Match"}),

#"Expanded tbl" = Table.ExpandTableColumn(#"Removed Other Columns", "tbl", {"ID", "tbl"}, {"ID", "tbl.1"}),

#"Expanded tbl.1" = Table.ExpandTableColumn(#"Expanded tbl", "tbl.1", {"Net ", " Type", " Date"}, {"Net ", " Type", " Date"}),

#"Changed Type2" = Table.TransformColumnTypes(#"Expanded tbl.1",{{" Date", type date}, {"Net ", type number}})

in

#"Changed Type2"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In your query you are allowing a difference of up to +/- 100 by grouped sum by Branch. That does't give us the required accuracy level at each distinct amount.

That is why I mentioned in my post that at each match the difference can only go up to + / - 2.

What we expect from the posted data is similar to the attached picture, we are left with four rows highlighted in RED not matched:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @mbuhary

Try This

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("rdLBDsIgDAbgVzE7j4a2UOCoV5Mt8brs5AN4MT6/bE4nSlYPXggkX/7SwjA0h313bNoGLYGEvNlNB4x5tc7056vB1Izt6iKDI90xMlgsnX93/WlJC75U8qXMdDkfdJbT8G+MkSCJyogDkCsYBdNdbh8DmZ3fcEucS4CoxzELpK2ySxO5qv3hduwiJJ35GAH1qhMT1uNQUCC+hjI/MdrKi0luw7sNtwTGkICocIS131lM7+Go8gWK6VXYM04c2KTHZYZeZbUmVjbeAQ==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [ID = _t, Net = _t, Type = _t, Branch = _t, Date = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"ID", type text}, {"Net", type number}, {"Type", type text}, {"Branch", Int64.Type}, {"Date", type date}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"ID", "Branch"}, {{"_tbl", each _, type table [ID=text, Net=number, Type=text, Branch=number, Date=date]}}),

#"Pivoted Column" = Table.Pivot(#"Grouped Rows", List.Distinct(#"Grouped Rows"[ID]), "ID", "_tbl"),

#"Renamed Columns1" = Table.RenameColumns(#"Pivoted Column",{{"BANK", "M"}, {"OR", "Records"}}),

#"Renamed Columns2" = Table.RenameColumns(#"Pivoted Column",{{"BANK", "Records"}, {"OR", "M"}}),

#"Appended Query" = Table.Combine({#"Renamed Columns1", #"Renamed Columns2"}),

#"Added Custom" = Table.AddColumn(#"Appended Query", "MM", each ( [M] )[Net], type list),

#"Removed Other Columns" = Table.SelectColumns(#"Added Custom",{"Branch", "Records", "MM"}),

#"Expanded Records" = Table.ExpandTableColumn(#"Removed Other Columns", "Records", {"ID", "Net", "Type", "Date"}, {"ID", "Net", "Type", "Date"}),

#"Added Custom1" = Table.AddColumn(#"Expanded Records", "Match", each List.Count( List.Select( [MM], (c) => let c1 = c * -1 in c1 -2 <= [Net] and c1 +2 >= [Net] )) > 0 ),

#"Changed Type1" = Table.TransformColumnTypes(#"Added Custom1",{{"Match", Int64.Type}}),

#"Removed Columns" = Table.RemoveColumns(#"Changed Type1",{"MM"})

in

#"Removed Columns"

Mariusz

If this post helps, then please consider Accepting it as the solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sorry my reply got deleted

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @mbuhary

Very sorry, I'm struggling to understand the requirement, can you explain why this 4 not matched?

Is there any rule to how you match Bank and Or?

Thanks

Mariusz

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I will try to explain more.

If you see first amount in Red -249.11

-there is no exact match of a positive amount (or Bank amount) within the same branch (118);

- also there isn't any other positive amount (or Bank amount) within the same branch (118) which would come as close as it would be only differ by plus or minus 2.

An exact match is like for example in Branch 118 if we had Bank & Net 25.5 matched against OR & Net -25.5.

But in the data sheet we dont have this situation. All amounts come close but doesn't match to the 2nd decimal point.

To give you a an of examples where we matched with allowing our threshold for difference (+/- 2) is you can look at:

data row 1 +102.67 & 5 -102.57 (1 is "Bank" & 5 is "OR" while both are within same branch (118) and difference of the sum of both is within +/- 2 ( 102.57 - 102.67 = -0.10).

I hope its cleat enough.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @mbuhary

and what should happen when a row can match 2 items form the table? BANK: 102 / OR: 101 OR:102?

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We can choose the most close one if possible.

Meaning which could give the smallest difference.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @mbuhary

check out this solution. It gives you every row that is within the threshold of +/-2.

Basically I select only the BANK-rows on add a column where do I select all OR-rows that are within your threshold.

You think this could suit your needs?

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("rdLBDsIgDAbgVzE7j4a2UOCoV5Mt8brs5AN4MT6/bE4nSlYPXggkX/7SwjA0h313bNoGLYGEvNlNB4x5tc7056vB1Izt6iKDI90xMlgsnX93/WlJC75U8qXMdDkfdJbT8G+MkSCJyogDkCsYBdNdbh8DmZ3fcEucS4CoxzELpK2ySxO5qv3hduwiJJ35GAH1qhMT1uNQUCC+hjI/MdrKi0luw7sNtwTGkICocIS131lM7+Go8gWK6VXYM04c2KTHZYZeZbUmVjbeAQ==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [ID = _t, #"Net " = _t, #" Type" = _t, Branch = _t, #" Date" = _t]),

#"Geänderter Typ" = Table.TransformColumns(Source,{{"Net ",each Number.Abs(Number.From(_,"en-US")), type number}}),

OrTable = Table.SelectRows(#"Geänderter Typ", each [ID]="OR"),

BankTable = Table.SelectRows(#"Geänderter Typ", each [ID]="BANK"),

AddColumn = Table.AddColumn

(

BankTable,

"Or-table",

(add)=>

Table.SelectRows

(

OrTable,

(select)=>

select[#"Net "]>= add[#"Net "]-2 and

select[#"Net "]<= add[#"Net "] + 2 and

select[Branch]= add[Branch]

)

)

in

AddColumnCopy paste this code to the advanced editor to see how the solution works

If this post helps or solves your problem, please mark it as solution.

Kudos are nice to - thanks

Have fun

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It gives me the base for some idea.

Let me try to work more on your ways.

We might see some light at the end of the Tunnel : )

I will keep you posted !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I managed to proceed with your codes to a certain extent.

We are missing something here very important. "One to One" & should not be "ONE to MANY"

Please see the below table output from your query (I have Filtered Branch 118 only)

BANK has 6 rows as matched and OR has 7 rows as matched. The expectation is it should be equal a one to one match. Once an amount is matched with another (Bank & OR) they cannot be used to match another. I think this is the hardest part of this process.

| ID | Match | Net | Type | Branch | Date |

| BANK | 1 | 102.67 | 118 | 04-Oct-19 | |

| BANK | 1 | 183.42 | 118 | 04-Oct-19 | |

| BANK | 1 | 313.01 | 118 | 05-Oct-19 | |

| OR | 0 | 183.75 | 118 | 06-Oct-19 | |

| OR | 1 | -102.57 | 118 | 06-Oct-19 | |

| OR | 1 | -183.17 | 118 | 06-Oct-19 | |

| OR | 1 | -183.17 | 118 | 06-Oct-19 | |

| OR | 1 | -312.96 | 118 | 06-Oct-19 | |

| OR | 1 | -237.24 | 118 | 27-Nov-19 | |

| BANK | 1 | 237.25 | 118 | 27-Nov-19 | |

| OR | 0 | -249.11 | 118 | 27-Nov-19 | |

| BANK | 1 | 336.94 | 118 | 27-Nov-19 | |

| OR | 1 | -337.04 | 118 | 27-Nov-19 | |

| BANK | 0 | 348.9 | 118 | 27-Nov-19 | |

| BANK | 1 | 588.14 | 118 | 27-Nov-19 | |

| OR | 1 | -588.63 | 118 | 27-Nov-19 |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @mbuhary

this is what i meant when I asked you about what happens when 2 rows are found. Keep only one and keep the other one for matching to other...

but in such a scenario it all would depend what row is processed first... so depending on your sort order there would be different outcomes. I don't think that would in your interested. The solution I provided is a kind of fuzzy join where a row of table B can suit multiple (or none) rows from table A.

For the result your are asking for I have no solution right away and this behavior was not specified in your initial request.

How important is this for you having this? Is this a one time shot or a daily process? How big is your database?

If this post helps or solves your problem, please mark it as solution.

Kudos are nice to - thanks

Have fun

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am sorry if I have not made it clear enough earlier.

But the one to one match is very important for us.

And the data is processed at the moment manually on a monthly basis.

Each month's data can accumulate up to 20,000 to 30,000 rows on average.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much. The codes are working fine in the test file.

Now I am trying to apply the same in my live data. I am facing couple of issues:

1. In some rows I have Branch Name as Text or Empty fields, so I changed the column data type to Text and replaced the Empty fields with "Blanks". I hoep this doesn't affect any of the steps to follow.

2. There are some times the Pivoted Column Tables are null, so it returns an error in the later step where we try to list each ( [M] )[Net ], type list. So I tried to embed an if statement before this to execute if RECORDS<>null and M<>null still I get an error. I am sure I am doing something wrong here.

Please advise. If i can attach my file here it would be best, But I dont know how to do it : (

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @mbuhary,

the blank-rows shouldn't be of any problem. In my solution I filter for Blanks and ORs and try to match the ORs to the blank (so no full outer join!!).

About your second question.. pivoting was never part of the question. So I would suggest to mark the post as solution that is working for you and create for other question a new topic.

It would also always help if you try to explain your whole problem / goal at the beginning, what exactly you want to achieve. Otherwise we are proposing a micro step help without nowing what the real goal and then we get stuck on every step. 🙂 This only as general advice.

If this post helps or solves your problem, please mark it as solution.

Kudos are nice to - thanks

Have fun

Jimmy

Helpful resources

Join us at the Microsoft Fabric Community Conference

March 31 - April 2, 2025, in Las Vegas, Nevada. Use code MSCUST for a $150 discount!

We want your feedback!

Your insights matter. That’s why we created a quick survey to learn about your experience finding answers to technical questions.

Microsoft Fabric Community Conference 2025

Arun Ulag shares exciting details about the Microsoft Fabric Conference 2025, which will be held in Las Vegas, NV.