FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Performance issue in List.Contains Step - 30 minut...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Performance issue in List.Contains Step - 30 minutes for 500 records

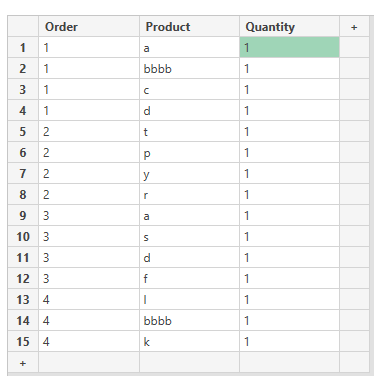

In a previous post I asked how to get all the records where orders includes an specific product.

Example:

Expected result if I'm looking for orders that include 'bbbb'

Thanks to @AlienSx for the answer.

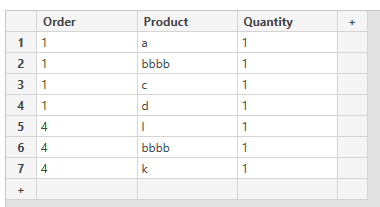

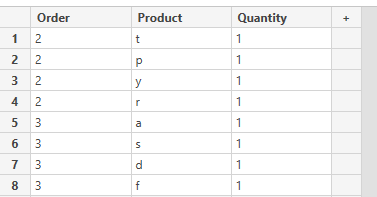

But then I tried to filter all the table to get records where orders don't have the product 'bbbb'. This is the expected result

and I achive this with the following code:

let

Source = table,

ordersToExclude = Table.SelectRows(Origen, (x) => x[Product] = "bbbbb")[Order],

allOrders = Table.SelectRows(Origen, (x) => Text.Start(x[Order],1)="#" )[Order],

ordersIwant = List.RemoveItems(allOrders,OrdersToExclude),

removeDuplicates = List.Distinct(OrdersIwant),

finalTable = Table.SelectRows(Source, (x) => List.Contains(removeDuplicates,x[Order]) )

in

finalTable

The problem with that code is that it takes a lot of Time and resources for excecution. I run it with a file that only have 515 rows and it takes more than 30 minutes and almost 20GB of memory (not sure which memory). Insane

My thoughts is that there is like a big loop in the finalTable step. That's why I apply the remove duplicates step but it still takes a lot of time.

With my real data of 515 rows, after remove duplicates there is only like 90 unique orders without the 'bbbb' product.

So my questions are:

- My assumption with the loop is correct and thats why is taking so much time or what is happening??

- Do you see another posible solution?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, @luigiPulido_ my 2c. While suggestion made by @Anonymous should definitely boost performance, I would also try alternative way to filter orders using Table.Group. Group your data by [Order] and create 2 aggregated columns:

1. all order items as {"all", each _}

2. logical test if products list contains unwanted item as {"test", each List.Contains(_[Product], "bbbb")}

Then filter test and expand all.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Buffer those List.Contains, lad. Always buffer your List.Contains lists.

each List.Contains((List.Buffer(removeDuplicates, [Order])

You won't believe the difference.

--Nate

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks much, it works well for me !

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!

| User | Count |

|---|---|

| 11 | |

| 7 | |

| 5 | |

| 5 | |

| 3 |