Join the Fabric User Panel to shape the future of Fabric.

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Sign up now- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric certified for FREE! Don't miss your chance! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Help, about setting up data.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Help, about setting up data.

Hello everyone,

I'm pretty new at PBI, so basically what I need help for is setting up my data so i can do scheduled refresh without problem.

I have a lot of rows 4-5 mil. I'm pulling some of them from postgre sql database. So what I'm doing is making a csv file (an arhive) straight from data base, for example from 1st of Jan to 31st of May, and then import from 1st of June straight from database.

So that's 2 same tables with same col. I'm doing this becouse i don't want to put too much on my database for refresh.

After that, I would appenend those 2 query's in one, so i have everything in one place (it's easier for measures and visual etc..)

So now i have for example 3 mil rows in archive file(csv), 2mil straight from database(getting larger every day after refresh) and then when I append its 5 more mil of rows.

Some tables cannot auto refresh(scheduled refresh) becouse it either too much, or timeout. I dont know. Becouse every time it refreshes all transactions 3 queryes. When i need only for example last 3 days to be refreshed, becouse everything else is the same.

Does anyone have sugestions, what should I do? And should I set up my data loads different? Or incremental refresh(which i didnt manage to set up in first place)

Thanks in advanced!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is no need to use separate tables. Have a single table, and partition it according to your data volume (20M rows per partition is a good rule of thumb). The maximum partition range is years, the minimum is days.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

But if i get data from 2 tables. As i do, What then?

I should put incremental on append table?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is no point in using append and incremental refresh at the same time. By appending you are emulating the partitions that are used for incremental refresh. Do one or the other but not both together.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks everyone,

but i'm still kinda stuck.

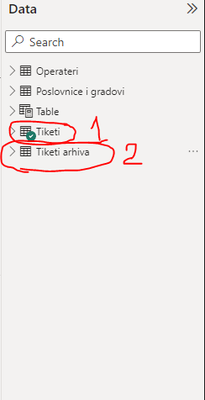

So this is my data. "Tiketi arhiva " (2) is archive from 01-Jan 2023 until 01-June 2023

And "Tiketi" (1) is postgresql Import from 1-June 2023 until today. Then I append 2 in 1.

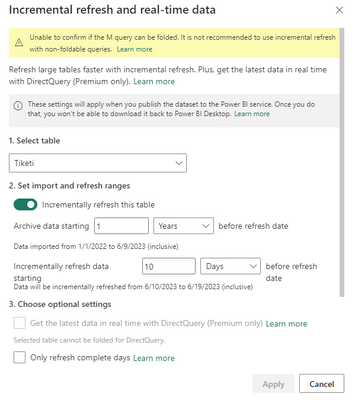

What should my RangeStart and RangeEnd be? What dates?

On which i should put incremental refresh after that? On both or only one? I put on both and now my refresh time is 2x slower then without incremental.

And one last thing. My incremental setup looks like this. Is it alright? Or I should set it up different? This is causing me a lot of problems. Becouse without incremental i have a timeout in refresh on few datasets. And would really love to solve this with your help.

Thank you guys !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does the Power Query for your "Tiketi" table have a RangeStart/RangeEnd filter? Which field is that filter based on?

Can you show the Power Query code?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's what I'm asking, should my filters and incremental refresh be on "Tiketi" or "Tiketi arhiva"? And what dates should I put in incremental refresh? Based on the data I said are stored in tables?

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, that is exactly what i'm doing.

I have 5-6 csv files in same sharepoint, one as sample file and the rest of them load based on sample file.

So now, if I understood correctly i have to set up parameters for date filters. Which is going to be what? If you can help me?

My data starts from 01-Jan-2023, and in csv files are transactions until 01-Jun-2023. From 1st of June it goes true postgre sql import.

So it's 2 querys one from sharepoint and one from postgre. And I appended that in one query.

Basically I need last 10-15 days to be refreshed when i schedule refresh in the night and the rest remains the same.

I need to set up incremental only on Appened table is that correct?

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

one as sample file and the rest of them load based on sample file. Don't do that, the generic process is inefficient. Create your own process via Table.AddColumn and with a fixed list of column Headers.

Partitions in Power BI are controlled by datetime fields RangeStart and RangeEnd. You need to find a data point that is usable for this, for example the file names. You can't use the File Modified date as that can change.

So let's say your file is named "Data 01-Jan-2023.csv" (which is a horrible name, by the way - much better to use ISO8601 format). In your Power Query you need to have steps that convert that to 2023-01-01T00:00:00.00 for RangeStart and 2023-02-01T00:00:00.00 for RangeEnd (for example).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please see this video for the incremental refresh from files part.

https://www.youtube.com/watch?v=IVMdg16yBKE

Pat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, sounds like incremental refresh is the better option here. It would eliminate your intermediate step with the CSV files.

You can also emulate incremental refresh by creating your own "partitions" with the CSV files. As long as all CSV files are in the same sharepoint/Onedrive folder and have the same structure you can write Power Query code that automatically appends them all into a single data source.

Helpful resources

Join our Community Sticker Challenge 2026

If you love stickers, then you will definitely want to check out our Community Sticker Challenge!

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 7 | |

| 5 | |

| 4 | |

| 4 | |

| 3 |

| User | Count |

|---|---|

| 13 | |

| 11 | |

| 11 | |

| 7 | |

| 6 |