Party with Power BI’s own Guy in a Cube

Power BI is turning 10! Tune in for a special live episode on July 24 with behind-the-scenes stories, product evolution highlights, and a sneak peek at what’s in store for the future.

Save the date- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Webinars and Video Gallery

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- R Script Showcase

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Enhance your career with this limited time 50% discount on Fabric and Power BI exams. Ends August 31st. Request your voucher.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Extracting elapsed time from multiple related reco...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Extracting elapsed time from multiple related records in a table using Power Query

I wish to do this as part of a data transformation, the data will be consumed in Power BI but will also be consumed directly in other platforms and thus I want to avoid any transformation in Power BI

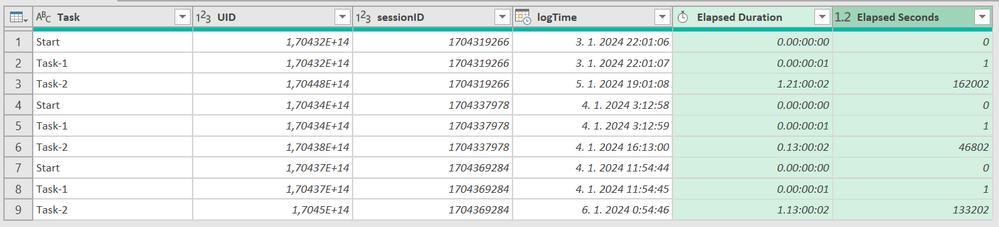

I have a raw data coming from an external system that captures information about a set of events for multiple objects. The intent is to add column to the table to faclitate profiling. Here is an example snippet of the table:

| Task | UID | sessionID | logTime |

| Start | 170431926600021 | 1704319266 | 2024-01-03 22:01:06 |

| Task-1 | 170431926700021 | 1704319266 | 2024-01-03 22:01:07 |

| Start | 170433797800098 | 1704337978 | 2024-01-04 03:12:58 |

| Task-1 | 170433797900098 | 1704337978 | 2024-01-04 03:12:59 |

| Start | 170436928400009 | 1704369284 | 2024-01-04 11:54:44 |

| Task-1 | 170436928500009 | 1704369284 | 2024-01-04 11:54:45 |

| Task-2 | 170438477900098 | 1704337978 | 2024-01-04 16:13:00 |

| Task-2 | 170448126700021 | 1704319266 | 2024-01-05 19:01:08 |

| Task-2 | 170450248500009 | 1704369284 | 2024-01-06 00:54:46 |

To help profiling I want to add two columns:

- Elapsed time in seconds for the specific task (the time difference in seconds between the logTime of the current task and the logTime of the next task if it exists, otherwise null)

- Elapsed time in seconds from the very start (the first task is always Start), 0 for the fisrt task.

The sessionID is what groups related items together, the logTime orders the items correctly. Tasks can repeat, Task 2 can send back to Task 1, there is only one Start task for the related group.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

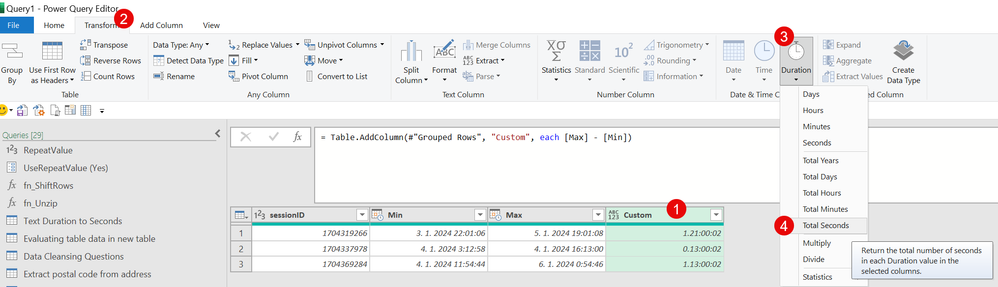

Hi @situ30144, like this? (Elapsed seconds for each task from Start).

Result

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("jZGxCsMwDER/xXhu4CTLtqTfaLeQIXO3Nv9PnUBpTQLJ6OM97pDHMd6X+bXEW6QKSWRcCgCmLmkPBssAGpACs4McJU63MT7m93Po6HrRr5vf1adqVZtu2iX/ugQkJ/asB/UrbRd929cXYxWsfpf0OpFncZGD+pXOF/388/lLq9Tz+VSckgN7X5ROr58D2XZ93fu5QWf7SwC2/e33pw8=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Task = _t, UID = _t, sessionID = _t, logTime = _t]),

ChangedType = Table.TransformColumnTypes(Source,{{"Task", type text}, {"UID", Int64.Type}, {"sessionID", Int64.Type}, {"logTime", type datetime}}),

GroupedRows = Table.Group(ChangedType, {"sessionID"}, {{"All", each

[ a = List.Min([logTime]),

b = Table.AddColumn(_, "Elapsed Duration", (x)=> x[logTime] - a, type duration)

][b], type table}}),

CombinedAll = Table.Combine(GroupedRows[All]),

Ad_ElapsedSeconds = Table.AddColumn(CombinedAll, "Elapsed Seconds", each Duration.TotalSeconds([Elapsed Duration]), type number)

in

Ad_ElapsedSeconds

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why not just move the session id, followed by user id, and then pivot the remaining columns, using the log times as the values? Then you can just do the row math.

--Nate

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@situ30144 Maybe something like this:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("jZGxCsMwDER/xXhu4CTLtqTfaLeQIXO3Nv9PnUBpTQLJ6OM97pDHMd6X+bXEW6QKSWRcCgCmLmkPBssAGpACs4McJU63MT7m93Po6HrRr5vf1adqVZtu2iX/ugQkJ/asB/UrbRd929cXYxWsfpf0OpFncZGD+pXOF/388/lLq9Tz+VSckgN7X5ROr58D2XZ93fu5QWf7SwC2/e33pw8=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Task = _t, UID = _t, sessionID = _t, logTime = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Task", type text}, {"UID", Int64.Type}, {"sessionID", Int64.Type}, {"logTime", type datetime}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"sessionID"}, {{"Min", each List.Min([logTime]), type nullable datetime}, {"Max", each List.Max([logTime]), type nullable datetime}}),

#"Added Custom" = Table.AddColumn(#"Grouped Rows", "Custom", each [Max] - [Min])

in

#"Added Custom"In DAX this would be pretty simple: See my article on Mean Time Between Failure (MTBF) which uses EARLIER: http://community.powerbi.com/t5/Community-Blog/Mean-Time-Between-Failure-MTBF-and-Power-BI/ba-p/3395....

The basic pattern is:

Column =

VAR __Current = [Value]

VAR __PreviousDate = MAXX(FILTER('Table','Table'[Date] < EARLIER('Table'[Date])),[Date])

VAR __Previous = MAXX(FILTER('Table',[Date]=__PreviousDate),[Value])

RETURN

( __Current - __Previous ) * 1.

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is awesome... almost what I need. The result you have groups by sessionID. I want avoid grouping so I can also get ealsped time of individuial tasks. The result would be the same number of records as the source with 0 for the elased time of the Start task.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @situ30144, like this? (Elapsed seconds for each task from Start).

Result

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("jZGxCsMwDER/xXhu4CTLtqTfaLeQIXO3Nv9PnUBpTQLJ6OM97pDHMd6X+bXEW6QKSWRcCgCmLmkPBssAGpACs4McJU63MT7m93Po6HrRr5vf1adqVZtu2iX/ugQkJ/asB/UrbRd929cXYxWsfpf0OpFncZGD+pXOF/388/lLq9Tz+VSckgN7X5ROr58D2XZ93fu5QWf7SwC2/e33pw8=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Task = _t, UID = _t, sessionID = _t, logTime = _t]),

ChangedType = Table.TransformColumnTypes(Source,{{"Task", type text}, {"UID", Int64.Type}, {"sessionID", Int64.Type}, {"logTime", type datetime}}),

GroupedRows = Table.Group(ChangedType, {"sessionID"}, {{"All", each

[ a = List.Min([logTime]),

b = Table.AddColumn(_, "Elapsed Duration", (x)=> x[logTime] - a, type duration)

][b], type table}}),

CombinedAll = Table.Combine(GroupedRows[All]),

Ad_ElapsedSeconds = Table.AddColumn(CombinedAll, "Elapsed Seconds", each Duration.TotalSeconds([Elapsed Duration]), type number)

in

Ad_ElapsedSeconds

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Perfect, exactly what I needed.

Appreciate your help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @situ30144

You wrote you don't want to use PBI to transform the data in the intro Pera again in title you wanna use PQ. It's confusing, can you clarify?

Also, what are you using as Transformation tool? Data Factory or DataFlow? I assume dataFlow as you mentioned PQ

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!

Helpful resources

Power BI Monthly Update - July 2025

Check out the July 2025 Power BI update to learn about new features.

Join our Fabric User Panel

This is your chance to engage directly with the engineering team behind Fabric and Power BI. Share your experiences and shape the future.

| User | Count |

|---|---|

| 10 | |

| 7 | |

| 7 | |

| 6 | |

| 6 |