Fabric Data Days starts November 4th!

Advance your Data & AI career with 50 days of live learning, dataviz contests, hands-on challenges, study groups & certifications and more!

Get registeredGet Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Learn more

- Fabric platform forums

- Forums

- Get Help

- Fabric platform

- Re: Dataflow Gen2 to Lakehouse fails with data des...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflow Gen2 to Lakehouse fails with data destination

Hi everyone,

i have created a Dataflow Gen2 building up on a Dataflow.

when i do not add a Data destination, the loading process works (and the tables are loaded in the Lakehouse which is automatically created (DataflowsStagingLakehouse).

However if i add a destination the load fails.

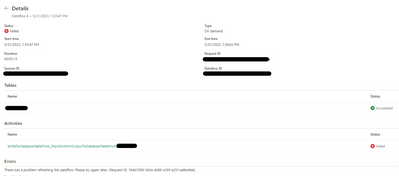

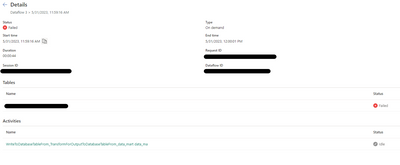

Error message:

null Error: Couldn't refresh the entity because of an issue with the mashup document MashupException.Error: PowerBIEntityNotFound: PowerBIEntityNotFound Details: Error = PowerBIEntityNotFound

unfortunately i do not really understand the issue.

Can anyone help?

My actuall goal was to load data from an on-premises SQL server into the lakehouse.

I have faced a similar issue like in this post:

Error Details: Error: Couldn't refresh the entity because of an issue with the mashup document MashupException.Error: We don't support creating directories in Azure Storage unless they are empty. Details: #table({"Content", "Name", "Extension", "Date accessed", "Date modified", "Date created", "Attributes", "Folder Path"},

Any help is appreciated.

kind regards

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I was experiencing the same issue, this post helped me to solve the problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I was experiencing the same issue, this post helped me to solve the problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

changing the datatype of the date columns to datetime solved my problem (for the first problem: Dataflow Gen2 building up on a Dataflow) - thanks for the link

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Bazi,

I just tried changing the column to datetime, but no luck here.

Received the usual fail message.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi SFDucati,

this is the error message you receive when you try to connect the "dataflow gen2" with your on-premises data source, right?

try to create an "old" dataflow and connect this one to your on premise table

after that create a Dataflow Gen2 and connect it to your "old" Dataflow.

there you should change the column type of the date-columns to datetime with the destination to your Lakehouse

so the road of the data is:

dataflow - dataflow Gen2 - Lakehouse

this worked for me.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok,

I tried your suggestion. I created a new dataflow, not a dataflow gen2. Using the same queiry, it created the table. I saved and published. Then I refreshed it, and it worked. Then I created a new dataflow gen2 that accessed the new dataflow. The same table content was created in the dataflow gen2. I then made the data destination my Lakehouse. Then published.

Then the same error.

I am wondering if i have to delete everything and start over. The only thing that differs from Microsoft's tutorial page is I am using web sources and the tutorial uses their sample data on Github.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Bazi,

I can try that workaround and see if it works. I am actually connected to web based sources not on prem data. Interestingly, the dataflow gen2 power queries are the exact same queriesI have been using in the Power Apps Dataflows. Everythng was fine there. So when I started the Fabric Trial, I just cut and pasted them into the dataflow gen2's. They data appears fine during the online power query experience, it's just when I publish...it fails. I worked with support for about 4 days, and they had no answers. They just said it's a known bug and they are working on a solution. My issues is whey did they not test this before the rollout? And why are they continuing to count down on our Trial Days when we can't even get it to work?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

unfortunately i cannot help you in this case.

i guess if Microsoft says it is a known bug we probably have to wait until they fix it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

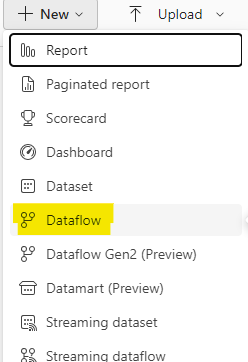

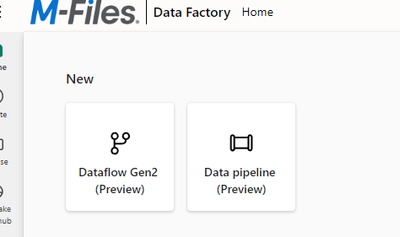

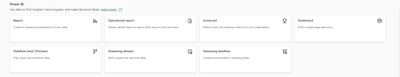

Aghh...This is so confusing. I don't even see an option in 'fabric' to create something like an 'old' Dataflow. Is this a MAPPING Dataflow as in DataFactory or a PowerBI Dataflow?

I see these as the options to create 'NEW' stuff in either DataFactory area..or whatever it is called...and PowerBI area, Does anyone else find this layout VERY confusing or am I just a curmodgeon. Don't answer that. 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

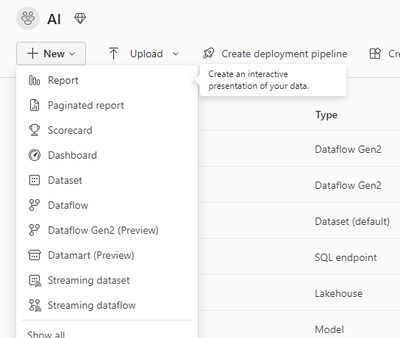

just enter your workspace and click on the plus, there you can see Dataflow and Dataflow Gen2 (Preview).

Dataflow is what i was refering to as the "old" Dataflow

i agree, it is kind of consufing at the beginning

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am having the same issue. I opened a ticket with Support and they indicated there is a known bug on Dataflows Gen2 causing it not to load to the Lakehouse. Has anyone solved this issue? I have been waiting since Monday to find a solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

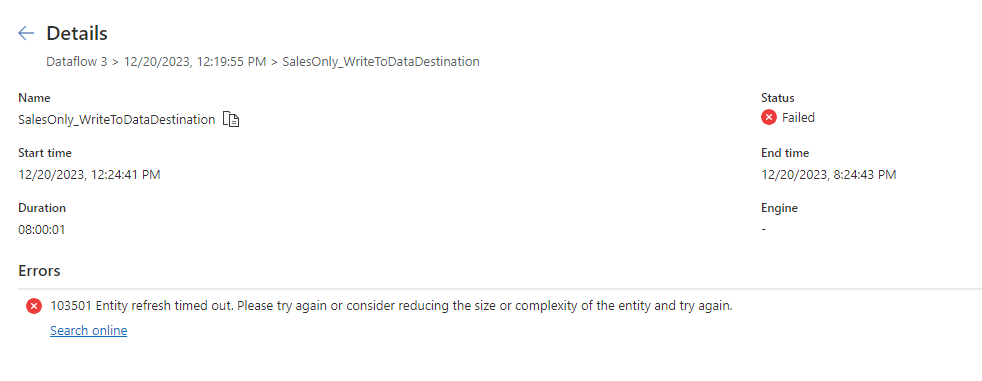

Hi did you ever solve this? It still appears to be an issue. I have a query that took less than a minute to run but the WriteToDataDestination ran for 8 hours before failing

Helpful resources

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!

Fabric Monthly Update - October 2025

Check out the October 2025 Fabric update to learn about new features.