Join us at FabCon Vienna from September 15-18, 2025

The ultimate Fabric, Power BI, SQL, and AI community-led learning event. Save €200 with code FABCOMM.

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Enhance your career with this limited time 50% discount on Fabric and Power BI exams. Ends September 15. Request your voucher.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Workflow best practices - dataflows, and datas...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Workflow best practices - dataflows, and datasets, and RLS, oh my!

Hello Power BI Community!

I don't know that I see many topics here looking for a discussion, but that's what I'm hoping for here. I work for a university that has been trying to adopt Power BI as an enterprise solution. We're going for a mostly self-service model, but we do have some data that we expect to model within IT and deliver to users. If context helps, maybe think about a centralized HR dataset within an IT workspace and we publish a report with RLS to another workspace that others have access to. I have a few questions I'd like to hear others' opinions on:

- Does the above sound like a best practice? As in, dataset in a secure workspace, reports based on that dataset to workspace(s) with more users. If not, what would you do?

- Would you use a dataflow for something like this? As in dataflow, create a dataset off the dataflow, create a report off the dataset. Or would you skip the dataflow step? I tried to take the definitions in this basic concepts article from Microsoft docs into consideration.

- What are some of your workflow best practices? Do you make a new dataset with every report? Do you try to reuse? How do you make sure updates don't break related reports/dashboards/apps? How do you handle documentation/collaboration?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The approach you described makes sense. Creating golden datasets is a good way to achieve a self-service model (see article below). Dataflows are an excellent tool for harvesting and transforming data, and offer numerous advantages including reusability. Keep in mind that not all connectors in PBI Desktop are available in PBI Service, and in some cases the PBI Desktop connectors are more robust than those in PBI Service (e.g., in the PBI Service Snowflake connector, there's no gear icon to edit custom SQL). The workaround is to create the M code in PBI Desktop, copy it, and paste it into a blank query in PBI Service.

It's recommended to do report development in PBI Desktop. You can develop reports in PBI Service (based on a published dataset), but if the dataset gets deleted, reports created in PBI Service become permanently broken (see article below). PBI Service is a great option for ad hoc reports, but enterprise reports should be developed in PBI Desktop.

Row-level security can be implemented in various ways. The article below describes how to implement it dynamically, with a solution to the many-to-many relationship that may exist.

These are great discussion points, and I look forward to hearing from the community. 🙂

https://exceleratorbi.com.au/new-power-bi-reports-golden-dataset/

https://nisorness.com/data/2020/11/19/how-to-prevent-the-deletion-of-datasets-in-power-bi

https://radacad.com/dynamic-row-level-security-with-profiles-and-users-in-power-bi

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello! Thanks for your thoughtful and detailed reply.

The linked article on golden datasets does seem to be the route I would like to adopt. Long-term I think having datasets that users can re-use where we already have the tables modeled correctly and some well-formulated measures premade is a great way to ensure data is reported correctly across departments.

One thing I am struggling with in adopting this model is permissions. Given the size of our university, we could have hundreds to thousands of employees trying to access reports/dashboards/apps based on these underlying datasets. What is the best practice is setting up these permissions? I had thought it would be to:

- Have a workspace housing the golden datasets. The only people with access to this workspace would be our IT data folks creating/edit those.

- Have workspaces for functional teams

- Reports would be created for those functional teams that use the underlying dataset (published across workspaces)

- Users are unable to access these reports because it says they don't have permissions on the underlying dataset

Is the only way to ensure access to reports is to allow access to all? Is there an easy way to do this without opening the workspace up to everyone?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

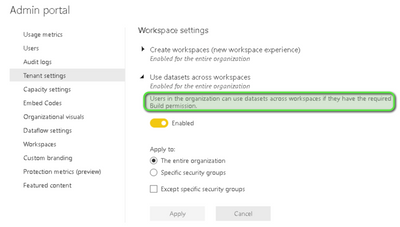

Having a golden workspace and multiple workspaces for functional teams is the approach I use. You'll need to grant Build permission to users so they can access datasets in the golden workspace.

https://docs.microsoft.com/en-US/power-bi/connect-data/service-datasets-build-permissions

Did I answer your question? Mark my post as a solution!

Proud to be a Super User!