FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

To celebrate FabCon Vienna, we are offering 50% off select exams. Ends October 3rd. Request your discount now.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Union two datasets to circumvent Salesforce report...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Union two datasets to circumvent Salesforce report 2000 row API

Hi,

I have a Salesforce report that I would like to connect to Power BI, but it is over 25K rows, so the direct Salesforce report API is not an option as the limit is 2K rows. I had an idea to download the current report as a CSV, use that as my data source, and then refresh the Salesforce report API each day to add any new rows to my original dataset that have been added that day. Is there a clever way to do this within PowerBI? Thank you

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@amitchandak , @Anonymous - Well, I think the easy way to do this would be to import the CSV file once, turn off refresh. Create another table using the API. Append the two tables, remove duplicates. You could run that until all 2000 API entries are duplicates and at that point you just update your CSV file.

Not pretty but functional. Could definitely be improved upon but that would at least be a start.

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I know this reply is very late lol, but were you able to find a solution? As a workaround, maybe you can try to test your connection with a 3rd party connector and you won't have to unite 2 datasets later. I've tried windsor.ai, supermetrics and funnel.io. I stayed with windsor because it is much cheaper so just to let you know other options. In case you wonder, to make the connection first search for the Salesforce connector in the data sources list:

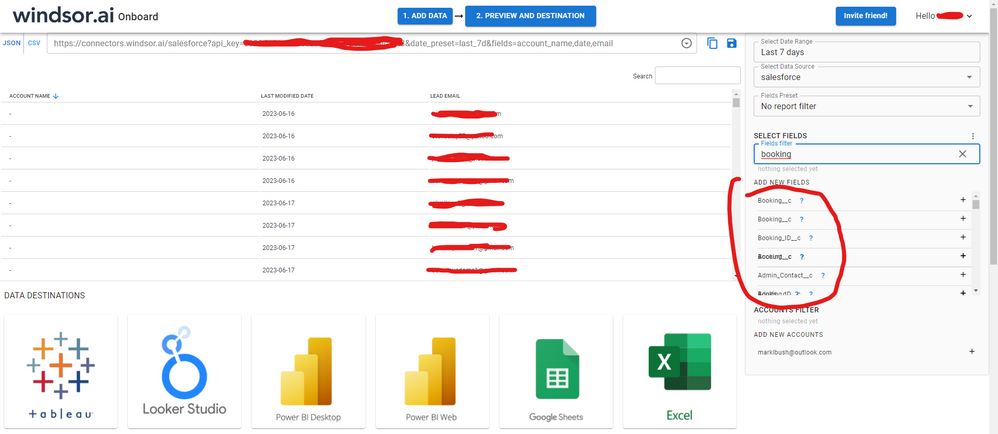

After that, just grant access to your Salesforce account using your credentials, then on preview and destination page you will see a preview of your Salesforce fields:

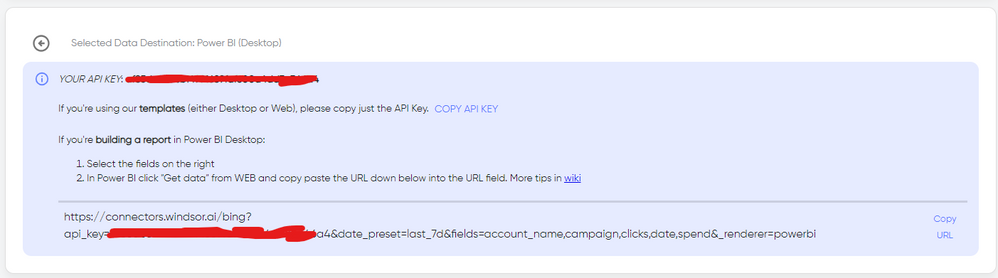

There just select the fields you need. It is also compatible with custom fields and custom objects, so you'll be able to export them through windsor. Finally, just select PBI as your data destination and finally just copy and paste the url on PBI --> Get Data --> Web --> Paste the url.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@amitchandak , @Anonymous - Well, I think the easy way to do this would be to import the CSV file once, turn off refresh. Create another table using the API. Append the two tables, remove duplicates. You could run that until all 2000 API entries are duplicates and at that point you just update your CSV file.

Not pretty but functional. Could definitely be improved upon but that would at least be a start.

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks! I'll try this