FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: SharePoint list query alternative or optimizat...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

SharePoint list query alternative or optimization

Hello everyone!

Because sharepoint list queries quickly comes too slow to work with in Power BI, I have experimented with the query that sharepoint generates for excel. This is vastly more effective in Excel compared to getting the same information using the sharepoint query in Power BI. I think the excel export query is basically a query of the list view, where all the lookup values are flattened. I can't seem to find the way to recreate this query in Power BI, It looks like its using a OLE DB method, but not sure how to continue from there, and some posts suggest that this is not supported by PowerBI.

Connectionstring for excel: "Provider=Microsoft.Office.List.OLEDB.2.0;Data Source="";ApplicationName=Excel;Version=12.0.0.0"

Anyone have experience dealing with this?

I think the reason for queries to sharepoint-resources become extremely slow, is due to all the lookup and choice columns, to get the value of the column I have to expand them. An alternative to exploring these view queries is optimizing the queries, suggestions are most welcome! 😄

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@hjaf FYI that I finally made a video to describe this approach, and am adding it here for others that may find this post. It also gets the count of items and makes the right number of API calls.

Get SharePoint List Data with Power BI ... Fast - YouTube

Also, a reminder to mark one of these as the solution.

Regards,

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The problem I am having is our datasets have a column called FieldValuesAsText, which seems to contain nested records. Unfortunately, this is the data that I need to get to.

I believe this is a re-ocurring theme. Whilst all of the columns are available to be expanded with the sharepoint connectors, this does not appear to be the case with the api / json method.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm loving this approach to use the paged response to get 5000-item chunks of list items quickly, but I'm wondering if anyone has gotten filters working with that in the same query?

I realize I can retrieve all items and then filter in the query editor, but I'd love to get a pre-filtered output from sharepoint.

I tried adding $filter=Modified ge datetime'2021-05-08T09:59:32Z' to my URL string and at first I thought it was working, but then I realized my final results were showing a lot of duplication (the same items appearing many times). I think maybe the combination of filtering and pagination is causing the issue, but I'm not sure.

CmdrKeene

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @mahoneypat ... I am using your approach to get the items using REST API and it works really fast. But I have published the file to the power bi service and I cannot set the schedule refresh because I have this error: "You can't schedule refresh for this dataset because the following data sources currently don't support refresh".

Do you know how to overcome this problem?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

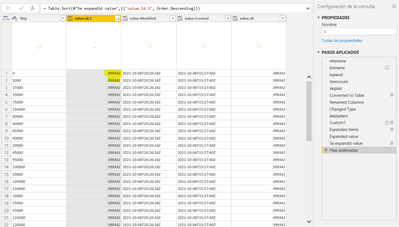

This fixed it for me. It was not refreshing as PBI App was recognising it as dynamid data source. Replaced Custom1 step with this: Custom1 = Table.AddColumn(#"Changed Type", "Items", each Json.Document(Web.Contents(baseurl, [RelativePath = "/items?$skipToken=Paged=TRUE%26p_ID=" & [Skip] & fieldselect, Headers=[Accept="application/json"]]))),

It works all right for me

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I got this to work.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Someone in this forum said to look up a solution here: https://blog.crossjoin.co.uk/2016/08/16/using-the-relativepath-and-query-options-with-web-contents-i...

I did so, and after reading a number of posts on the subject I was able to get it working in PowerBI online service. I won't bore you with a lot of details, here's just a simple modified M Code you need to use instead.

What this does is uses a static URL for the first parameter of the Web.Contents() function, then uses a little known (apparently) 2nd parameter to actually pass the rest of the URL (relative path) and query/value pairs into the query string.

You should be able to just modify the bold parts of this and be ready to go. I modified this from the original on the blog post and it even has the original commented-out bits. You can remove those if you want.

I actually hard-coded most of the paths instead of using baseurl or other variables. I probably could have still used them but I wanted immediate success 🙂

This has been an aboslute lifesaver to me. Now my list with 120K list items only takes 15 seconds to refresh.

let

baseurl = "https://TENANT.sharepoint.com/sites/SITE/_api/web/lists/GetByTitle('LIST')/",

itemcount = Json.Document(Web.Contents("https://TENANT.sharepoint.com/sites/SITE/_api/web/lists/GetByTitle('LIST')/"&"ItemCount", [Headers=[Accept="application/json"]]))[value],

skiplist = List.Numbers(0, Number.RoundUp(itemcount/5000), 5000),

#"Converted to Table" = Table.FromList(skiplist, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Renamed Columns" = Table.RenameColumns(#"Converted to Table",{{"Column1", "Skip"}}),

#"Changed Type" = Table.TransformColumnTypes(#"Renamed Columns",{{"Skip", type text}}),

fieldselect = "&$top=5000", // all fields with no expansion

//fieldselect = "&$top=5000&$select = Id,Title,Person,Date", // list desired fields (no expansion)

//fieldselect = "&$top=5000&$select=Id,Title,Choice,LookupColumn/Title,LookupColumn/Project,LookupColumn/ProjectStatus,Date,Person/LastName,Person/FirstName,Person/EMail&$expand=LookupColumn,Person",

//Custom1 = Table.AddColumn(#"Changed Type", "Items", each Json.Document(Web.Contents("baseurl" & "/items?$skipToken=Paged=TRUE%26p_ID=" & [Skip] & fieldselect, [Headers=[Accept="application/json"]]))),

Custom1 = Table.AddColumn(#"Changed Type", "Items", each Json.Document(Web.Contents(

"https://TENANT.sharepoint.com/sites/SITE/_api/web/lists/GetByTitle('LIST')/",

[

RelativePath="/items?$skipToken=Paged=TRUE%26p_ID=" & [Skip] & fieldselect,

Headers=[Accept="application/json"]

]

)

)

),

#"Expanded Items" = Table.ExpandRecordColumn(Custom1, "Items", {"value"}, {"value"}),

#"Expanded value" = Table.ExpandListColumn(#"Expanded Items", "value")

in

#"Expanded value"

CmdrKeene

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am new to trying share point connections which is required since I have to utilize an Enterprise Gateway.

Also I work at a large Corporation. So the share point path goes very deep.

It looks like the only way to get connected to the correct file is to utilize filters.

Refreshing that data set takes a real long time.

So I have 2 questions. Is filtering the way to go or is there another approach.

Would this proposed solution work for my situation?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @CmdrKeene ,

I followed the process described, and I published it to the service. Set the refresh. No issues!

Only to find out that no new data is actually pushed onto the report, not even on the PBI desktop the data refreshed.

I only get new data if I create a new report.

I double-check and I have no filters. The item count is accurate, but that is not the number I get on the visuals.

I've cleared the cache but it did not make a difference.

Do you have any suggestions to fix this issue?

Thank you for your help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @CmdrKeene for responding!

Just in case this helps someone else.

I was able to resolve the issue by changing from top 5000 to 2000. I'm getting all new records with no issues now.

This is a great solution and I can't thank you all enough for posting 👍

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since The item count is accurate, that's not the number I get on the visuals... I think there must be a simple issue like a page or report-wide filter is at play, or perhaps the aggregation is set to count instead of sum (something like that nature).

However I want to also mention something I considered it could be -- this uses the ID number of the sharepoint records. If list entries ever get deleted from your sharepoint list, the ID numbers could be the issue.

CmdrKeene

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

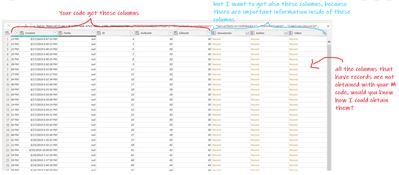

Hi CmdrKeene, first of all, thank you for your code, it worked in Power Bi Service, however I have a question, your code get all the values of the SharePoint List but i want to get also the records (see my picture), do you know how i could do that?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

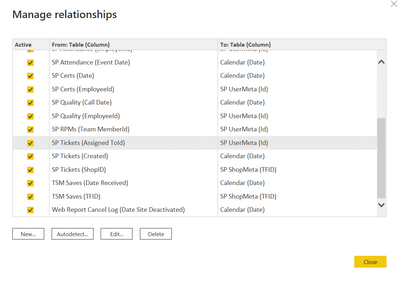

Great question. For the Author/Editor, those are really just references to another SharePoint table/list called "User Information List" that exists on all SharePoint sites. So for my purposes I retreive this list as well and then setup a relationship in my PowerBI report between the two.

SP Tickets (my main giant list with 200,000 records and growing)

SP UserMeta (just the User Information List with a custom name)

This could be handled in later steps of the query instead but I went this path.

CmdrKeene

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello CmdrKeene,

Where do I paste this code in Power BI? Do I just paste it intop a Blank query?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello lcasey,

Yes that's basically the process. Create a new blank query, go to the Advanced Editor from the view toolbar, delete everything and paste in my code instead.

CmdrKeene

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Glad you found that approach. I was about to post a link to same article.

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@hjaf FYI that I finally made a video to describe this approach, and am adding it here for others that may find this post. It also gets the count of items and makes the right number of API calls.

Get SharePoint List Data with Power BI ... Fast - YouTube

Also, a reminder to mark one of these as the solution.

Regards,

Pat

Did I answer your question? Mark my post as a solution! Kudos are also appreciated!

To learn more about Power BI, follow me on Twitter or subscribe on YouTube.

@mahoneypa HoosierBI on YouTube

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I am facing some issue using this method. My SP list has around 50000 rows with a date range from 01/06/2022 to 15/12/2022. However, when I check in the date column, I am only able to see data for June & July, for other months it is not displaying anything. Could you let me know what could I be doing wrong here?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you just looking in the preview, or some other filter applied? Can you share your query?

CmdrKeene

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I checked in both Query Editor and Data view. It is the same. There are no filters applied. I am using the query pasted below:

let

baseurl = "SHAREPOINT LIST LINK_api/web/lists/getbytitle('LIST NAME')/",

itemcount = Json.Document(Web.Contents("SHAREPOINT LIST LINK_api/web/lists/getbytitle('LIST NAME')/"&"ItemCount", [Headers=[Accept="application/json"]]))[value],

skiplist = List.Numbers(0, Number.RoundUp(itemcount/5000), 5000),

#"Converted to Table" = Table.FromList(skiplist, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Renamed Columns" = Table.RenameColumns(#"Converted to Table",{{"Column1", "Skip"}}),

#"Changed Type" = Table.TransformColumnTypes(#"Renamed Columns",{{"Skip", type text}}),

fieldselect = "&$top=2000", // all fields with no expansion

//fieldselect = "&$top=5000&$select = Id,Title,Person,Date", // list desired fields (no expansion)

//fieldselect = "&$top=5000&$select=Id,Title,Choice,LookupColumn/Title,LookupColumn/Project,LookupColumn/ProjectStatus,Date,Person/LastName,Person/FirstName,Person/EMail&$expand=LookupColumn,Person",

//Custom1 = Table.AddColumn(#"Changed Type", "Items", each Json.Document(Web.Contents("baseurl" & "/items?$skipToken=Paged=TRUE%26p_ID=" & [Skip] & fieldselect, [Headers=[Accept="application/json"]]))),

Custom1 = Table.AddColumn(#"Changed Type", "Items", each Json.Document(Web.Contents(

"SHAREPOINT LIST LINK_api/web/lists/getbytitle('LIST NAME')/",

[

RelativePath="/items?$skipToken=Paged=TRUE%26p_ID=" & [Skip] & fieldselect,

Headers=[Accept="application/json"]

]

)

)

),

#"Expanded Items" = Table.ExpandRecordColumn(Custom1, "Items", {"value"}, {"value"}),

#"Expanded value" = Table.ExpandListColumn(#"Expanded Items", "value"),

#"Removed Other Columns" = Table.SelectColumns(#"Expanded value",{"value"}),

#"Expanded value1" = Table.ExpandRecordColumn(#"Removed Other Columns", "value", {"EmployeeEmailID", "WeekStart", "WeekStartNum", "DayOfWeek", "DayOfWeekNum", "EmployeeReportingToEmailID", "ActualWorkHours", "ApprovalStatus", "MasterID_Weekly", "TotalHr", "Modified", "Created", "ID"}, {"EmployeeEmailID", "WeekStart", "WeekStartNum", "DayOfWeek", "DayOfWeekNum", "EmployeeReportingToEmailID", "ActualWorkHours", "ApprovalStatus", "MasterID_Weekly", "TotalHr", "Modified", "Created", "ID"}),

#"Changed Type2" = Table.TransformColumnTypes(#"Expanded value1",{{"WeekStart", type datetime}, {"DayOfWeek", type datetime}, {"Created", type datetime}, {"Modified", type datetime}}),

#"Changed Type1" = Table.TransformColumnTypes(#"Changed Type2",{{"ID", type number}, {"WeekStart", type date}, {"WeekStartNum", type number}, {"DayOfWeek", type date}, {"DayOfWeekNum", type number}, {"ActualWorkHours", type number}, {"Modified", type date}, {"Created", type date}})

in

#"Changed Type1"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

first of all you are a crack, My query is the following, I am applying your code and I have a very large sp list.... and it returns me 150000 elements but they are duplicates please could you help me

let

sitename ="<your site>", // if a subsite use "Site/SubSite"

listname = "BigList",

baseurl = "https://<your SharePoint URL>/sites/" & sitename & "/_api/web/lists/GetByTitle('" & listname & "')/",

itemcount = Json.Document(Web.Contents(baseurl&"ItemCount", [Headers=[Accept="application/json"]]))[value],

skiplist = List.Numbers(0, Number.RoundUp(itemcount/5000), 5000),

#"Converted to Table" = Table.FromList(skiplist, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Renamed Columns" = Table.RenameColumns(#"Converted to Table",{{"Column1", "Skip"}}),

#"Changed Type" = Table.TransformColumnTypes(#"Renamed Columns",{{"Skip", type text}}),

fieldselect = "&$top=5000", // all fields with no expansion

//fieldselect = "&$top=5000&$select = Id,Title,Person,Date", // list desired fields (no expansion)

//fieldselect = "&$top=5000&$select=Id,Title,Choice,LookupColumn/Title,LookupColumn/Project,LookupColumn/ProjectStatus,Date,Person/LastName,Person/FirstName,Person/EMail&$expand=LookupColumn,Person",

Custom1 = Table.AddColumn(#"Changed Type", "Items", each Json.Document(Web.Contents(baseurl& "/items?$skipToken=Paged=TRUE%26p_ID=" & [Skip] & fieldselect, [Headers=[Accept="application/json"]]))),

#"Expanded Items" = Table.ExpandRecordColumn(Custom1, "Items", {"value"}, {"value"}),

#"Expanded value" = Table.ExpandListColumn(#"Expanded Items", "value")

in

#"Expanded value"

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!