FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Share your thoughts on DirectQuery for Power B...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Share your thoughts on DirectQuery for Power BI datasets and Azure Analysis Services (preview)

Hit Reply and let us know what you think of the DirectQuery for Power BI datasets and Azure Analysis Services. To learn more about this feature, please visit this blog post or our documentation.

Here are some areas that we'd like to hear about in particular:

- Performance

- Query editor experience--the remote model query doesn't show up in the query editor and only in the data source settings dialog. What are your thoughts?

- Navigator experience

- Thoughts around governance and permissions for models that leverage this feature

- Nesting models, i.e. building a composite model on top of a composite model

- Automatic page refresh for live connect in composite models

Thanks and we look forward to hearing your feedback!

- The Power BI Modeling Team

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RLS is not being applied _at the moment_ as we are still in preview. It will be applied soon. RLS rules on central model will be applied even for B

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wanted to let you know that I posted additional details pertaining to my team's errors here.

@Zarek @frostystoo : I believe these are the same errors you are also seeing:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Been waiting for this capability and looking forward to it ever since it was announced. My initial plan of use was to break apart some larger complex datasets into 'child' datasets that could then be combined back into the larger parent dataset but still allow other organizational reporting areas to leverage some of the specific content of the child datasets to prevent the need from adding a bunch of tables that would be unnecessary to the application.

Initially this was looking good. However, I ran into a limitation with the model being directquery, namely the limitation of passing more than one million records to an external source.

My setup was two separate Power BI datasets with a one to many relationship (I also tried bidirectional just to see) between a fact table from each. The visual renders fine until a dimension from the second dataset is added to the first. If filters are applied to the second dataset so that the resulting record count is below 1,000,000 records everything is good. Even though the first dataset has far fewer than 1,000,000 records, it would seem the second is trying to pass 1,000,000+ across the relationship and thus erroring out due to the directquery limitation.

This is unfortunately something that would likely frequently be an issue for us when trying to build out a robust enterprise model leveraging datasets from different functional areas to more effectively and efficiently leverage our premium capacity.

I think this can still be useful for some targeted uses but not as much from a large governed data model.

Unless I'm doing something wrong - then I'm all ears!

Another issue I came across was that I can no longer get directquery dataflows to work whereas I could before.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @clementskr !

See my blog post - DirectQuery gives weired queries with enormous big DAX...

Go for "Import"!

“Composite” arrived – CAUTION!

Regards

Oliver

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I share your sentiment sir!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

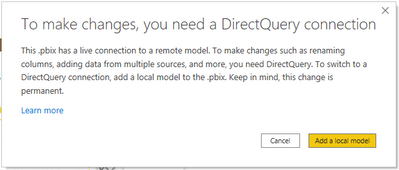

Thanks for your reply. I pretty much always use import. However, if HR has a Power BI dataset that they have built with all of the enterprise semantic layers built into measures and likewise Finance has a Power BI dataset (both Imported on their own), and I want to tie the two together with a relationship between the two without rebuilding all of the already approved measures, which is what this feature allows me to do, I'm limited by the direct query max rows limitation of the relationship is of high cardinality. Both sources are imported in themselves but tying them together forces it to a direct query source. Obviously, a single imported dataset of a full enterprise could be made, but that seems like a maintenance and refresh nightmare.

So as far as I can tell, connecting large functional specific datasets to other large functional specific datasets isn't really enabled (depending on what that relationship is and the cardinality of such) because of direct query limitations.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, I was not aware that also there are limits when the direct is to an tabular in memory...

I also had some other issues to connect datasets.

See:

Stacked datasets

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello again.

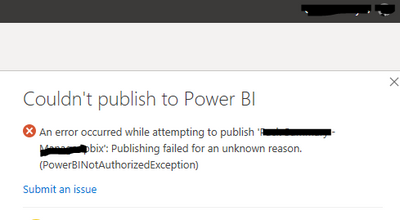

For some reason im getting this issue whenever I publish reports using the new Feature (Multiple PowerBI Dataset)

Can anyone help me whats going on?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm having the exactly same issue on my end. I believe it is related to problems with build permission. The publish work correctly when you connnect to datasets located in a workspace you are member of. The above exception occurs when you try to publish a report which utilizes Direct Query connection to a dataset you only have build permission to but are not a member of a workspace it resides in.

I have a support ticket opened on this but it is progressing painfully slow.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

not sure, does this happen with other "normal" datasets as well?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @tessahurr!

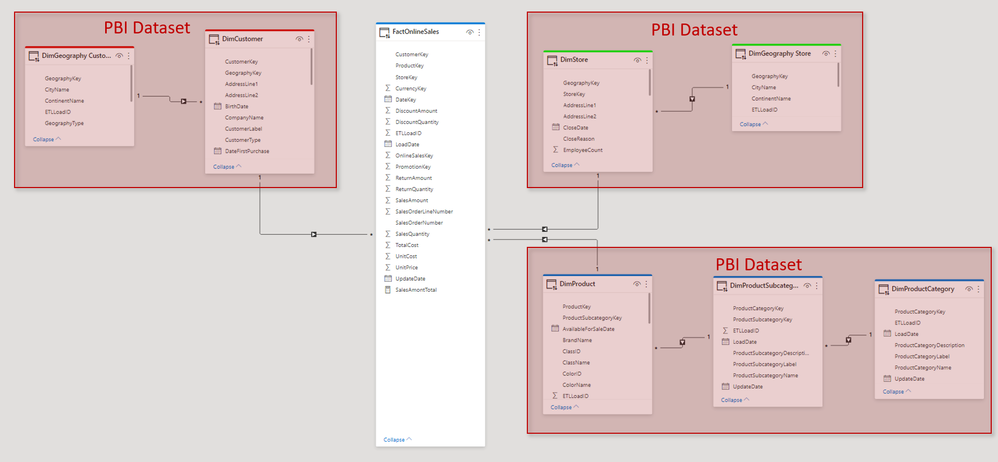

You asked for stacked datasets, I tried 😉

Let's do not think about if this makes any sense for real purpose...

I did build up 3 seperate datasets and then joinded them with an imprted table:

1) works fine

2) Access permissions to each single dataset no problem

3) DAX query is fine

4) Of course you have some how to handle duplicated dimension tables... (Geo)

Then I made of this complete model a dataset again.

This shoud be the base of a user report.

But I got some access issue... "Single Sign-on"?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, got it.

Yes that would give a strange model - exactly what I try to point out in my BLOG, that design will get even more important, what to join, how to join, which data to use...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

A great innovation long awaited.

But also a risk, as even on perfect designed models and small dataset it gives weired queries with 3.000 lines or even 50.000 lines of DAX!!!

Read this:

“Composite” arrived - CAUTION!

Regards

Oliver

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello. I have a use case for needing more than 3 chain lengths for direct query to AS. Our Operations department uses multiple applications in their day to day activities. We have a data warehouse where we have slowly connected most of these applications and modeled their data according to our processes for easy reporting. We still have some applications not yet added to our data warehouse and some data sources which will never be added to our DWH so we have utilized data flows to access these data sources. We have created about 4 certified datasets for the data from these applications connected to our DWH and shared these within our organization for employess to use if they desire. Now our Operations department wants a set of KPIs in a single report based on data from all of the various applications they use. Up to now, we have had to develop a single BIG dataset containing the same tables from all of the certified dataset plus the data from the above mentioned data flows. This is so we could create the single report with multiple bookmarks, buttons, and slicers. This BIG dataset has been a nightmare to maintain (over 100 measures, over 60 tables) and we would much rather compartmentalize the data into these smaller certified dataset which would be easier to maintain and manage changes. I attempted to create the same BIG model using the new DQ for AS feature but since it contained 4 datasets and about 15 dataflow tables, it would not publish to the service. Please @tessahurr increase the maximum number of chains!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are in a similar situation. We have one large dataset in order to support cross-departmental reports. However, this leads to difficulties with refresh scenarios.

Preferably, we could have process specific data-marts that refresh individually and could be joined together in a composite model when needed. Better still would be if those marts could be fed by dataflows. However, in this scenario, we would have already used up most/all of the chain before the user ever gets to it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks for sharing your use case, this is great input!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For those that might have missed it:

Update December 18th 2020: we have just released Power BI Desktop version 2.88.702.0 which fixes the two most common issues we have seen being reported:

- As part of this feature, significant changes were made to the authentication flow for live connections to Analysis Services which applies even when you do not have this preview feature enabled in Power BI Desktop. Users were not able to recover from entering incorrect credentials or selecting the incorrect credential type and would see error messages such as 'Unable to connect'. You can now either select 'Retry' or use the Data Source Settings dialog to update your credentials.

- Converting from a model that is live connected to a DirectQuery model failed if the model contained a measure-only table and the following error message was displayed: 'The given key was not present in the dictionary'.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, I think thats it. I was connecting to a dataset in "My Workspace" which I see isn't supported. Next error is this "An error occurred while loading the model. Verify that the connection information is correct and that you have permissions to access the data source.". The dataset I am trying to connect to uses Import from an AWS Redshift datasource and is refreshed via the Gateway.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hitting the same wall with the "An error occurred while loading the model. Verify that the connection information is correct and that you have permissions to access the data source." message, also have AWS Redshift as a data source on the published PBI files.

Were you able to resolve it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@jeroenterheerdt we are also still getting this error (just loaded the latest PBI version today too). I even tried publishing a dataset to the Power BI Service that is loading a very simple excel file and am still get this error when trying to Live Connect to it and then add a local model. Is this still due to the deployment delays, or do you have any additional thoughts on what might be going on? Thank you for any assistance!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @MBonnett - deployment should be done now (except China). So try it again and if it still doesn't work, let me know.

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!