Fabric Data Days starts November 4th!

Advance your Data & AI career with 50 days of live learning, dataviz contests, hands-on challenges, study groups & certifications and more!

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM. Register now.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Remove duplicates not working.. Can't create r...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Remove duplicates not working.. Can't create relationship

Hello,

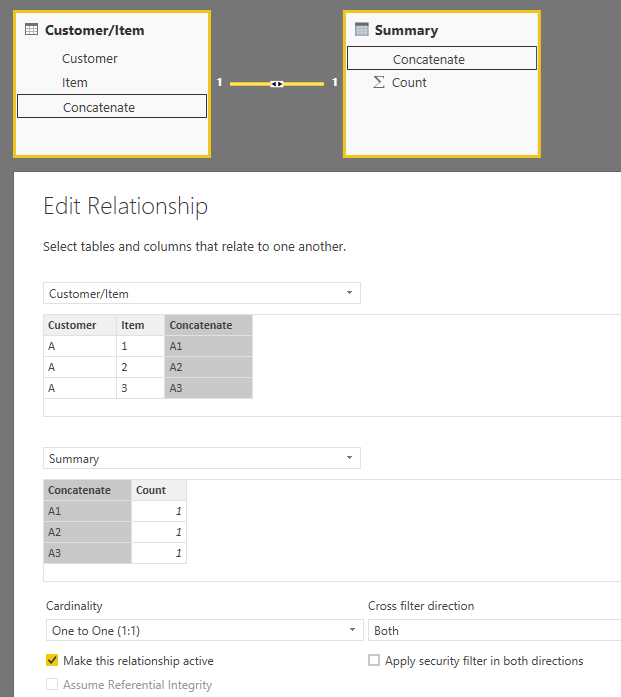

I have a dataset with customer and a discount structure for that customer per part. I created a concatenate in the edit query screen of the customer, and there are a couple duplicates. Highlighted the Concatenate column, and clicked remove duplicates.

Tried creating a relationship and it didn't work. Created a summary table of the concatenate and COUNTROWS, and lo and behold there are a handful that have a count of two. Why is this happening?

Thanks

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I double and triple checked. Everything was done the same way as in my example. Must be an error due to such a large data set.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For those who require a different solution, try using an R script to remove the duplicates.

I used the following script to solve the same problem:

output<-dataset[!duplicated(dataset$myColumn),]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Another thing to look for -

if you have "null" and blank values, it seems to treat them the same when you "remove duplicates".. but then it considers them not unique in the relationship view...

what i did that worked, was to click "remove duplicates" - then inthe "Home" tab in the ribbon, click on the drop-down in "Remove rows", and click on "Remove Blank Rows".

after removing those blank rows, everything worked great for me!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, this works for me (in Apr 2022).

Removing duplicates using PowerQuery's menus doesn't remove NULL values so "Remove Empty" on the row after removing duplicates solves the issue as mentioned by aar0n.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

If you are removing duplicates from a text column (costumer) verify if you dont have upper and lower case letters on the same field.

I.e: "Customer1" and "customer1". If you remove duplicates, power query will consider them as unique references and wont remove any of the them. But while creating relationships you will get an error of duplicate values.

BR

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found a work around to this...

1. Duplicate the query with "Duplicate values"

2. Remove all columns except the one you want to be unique.

3. Group by.

4. Do a "Left Outer" Merge with the original Query

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just had the same issue the error having duplicate values on the key I was using to connect two tables. I had removed duplicate values based on the key column and I was still not able to join the two tables together.

It turns out the problem was a null value in the key column. I did a row filter and removed the null value and the two tables joined together fine. The error message was misleading.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just had the same problem with joining two tables after I made sure to remove duplicates on the key column in one of the tables and getting the error message about duplicate values.

The issue actually was a NULL value in a single row in the table in my key column. Power BI was complaining about duplicates but in reality it was the null value that was preventing it from joining the two tables. I did a row filter and removed the NULL value and the two tables connected together just fine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Would have to see some sample data where the problem could be reproduced.

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The same exact steps in the edit query in this situation worked. They do not with my large data set (10 Million lines & about 20 duplicates).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would report this as an Issue: https://ideas.powerbi.com/forums/360879-issues

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Bummer.. Well I just ended up making another table to mirror the first, linked the summary table, and filtered all the ones. it worked, but with this many lines I'd rather have it be more efficient. Thanks for your help @Greg_Deckler

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

As I tested, the relationship can be created properly between two tables in your sample.

Have you get any error when creating relationships? Did you select correct columns?

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I double and triple checked. Everything was done the same way as in my example. Must be an error due to such a large data set.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm having the same issue but the data set is only around 2000 rows.

Note that there seems to be an inconsisencty in how case sensetivity is handled: the Remove Duplicates feature is case sensetive but the uniquemenss check done when created creating realationshiops between queries is not. To work around this I just format the key column in the query to UPPERCASE. But this is an aside, I'm getting the issue even though cases are the same

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you! This solution worked for me to transform the values to UPPERCASE.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Had the same problem. Saw the post about case sensitivity with duplicate removing. Transformed column to all UPPERCASE. Removed duplicates (3rd time)... and then the relationship worked. Make sure everyone reports it so we can get this fixed!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This really should get more upvotes. My issue was due to incorrect cases, too. I transformed all values to UPPERCASE and things worked out!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much for mentioning this issue with cases in remove duplicates vs joins! I had been struggling with this all day!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am getting this issue with the latest version of Desktop as well. My dataset is 4,700 rows of data. Remove Duplicates is applied in the query stage, but when the data is loaded into the data model there are duplicates in the data.

I cannot manually search for the duplicate values in the query because it says 'Limit of 1000 values reached'.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

Create a table and then export data to excel and do a duplicate check there.

Helpful resources

FabCon Global Hackathon

Join the Fabric FabCon Global Hackathon—running virtually through Nov 3. Open to all skill levels. $10,000 in prizes!

Power BI Monthly Update - October 2025

Check out the October 2025 Power BI update to learn about new features.

| User | Count |

|---|---|

| 79 | |

| 38 | |

| 31 | |

| 27 | |

| 27 |