FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! It's time to submit your entry. Live now!

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Refresh not running R script

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Refresh not running R script

Hi, I have data that is updated every day, but I want to store historical values so that they are not overwritten. The way I am trying to do this is to export my dataset and append it to a csv file, with an added column that inidcates the time of the extraction. This works when I hit "refresh preview" in the query editor. However, when I hit refresh in the regular desktop view it seems to be skipping the R script steps as the csv is not modified in any way. Is there some setting I am missing?

Also, has anyone tried this with scheduled refresh?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also having this issue, has a solution been found?

R query i'm using

install.packages("openxlsx")

library("openxlsx")

write.xlsx(dataset, "removed this but is a valid directory", asTable = TRUE, overwrite = TRUE)

When i refresh preview in power query, file is created/ overwritten correctly.

However when refreshing using powerBI Desktop the file is not created/overwritten

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @oslosa ,

Sorry for that, but we cannot reproduce this issue on my side with following steps, it export a csv file successfully by using refresh in the Power Query and desktop view.

Here are the steps,

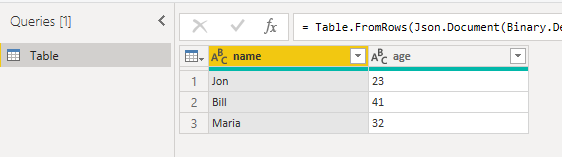

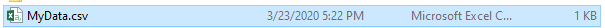

- enter a simple table in Power Query.

- Then we run the R script to export csv file,

df <- data.frame(name = c("Jon", "Bill", "Maria"),age = c(23, 41, 32))

write.csv(df,"D:\\MyData.csv", row.names = FALSE)

- In the Power Query, we test refresh.

And we test refresh in the Desktop view,

Could you please try to reproduce this issue with some simple R Script and the latest version Power BI Desktop?

Here are the queries we used, for reference:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45W8srPU9JRMjJWitWJVnLKzMkB8kwMwTzfxKLMRCDX2EgpNhYA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [name = _t, age = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"name", type text}, {"age", Int64.Type}}),

#"Run R script1" = R.Execute("df <- data.frame(name = c(""Jon"", ""Bill"", ""Maria""),age = c(23, 41, 32))#(lf)write.csv(df,""D:\\MyData.csv"", row.names = FALSE)",[dataset=#"Changed Type"]),

#"df1" = #"Run R script1"{[Name="df"]}[Value]

in

#"df1"

Best regards,

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@v-lid-msft , I tested your code and it works. It also works when I modify from write.csv to write.table and add append=TRUE, which is what I have used on my data. It seems to be refreshing and updating the csv both in query editor, desktop view and via scheduled refresh, although creating some duplicates.

For my dataset (which is only 130kb) I experience the following:

1. The csv file is updated when I refresh from the query editor

2. The csv file is updated every time I open the .pbix file (which is surprising and does not happen with your example)

3. The csv file is not updated when I refresh from desktop

4. The csv file is not updated when I run scheduled refresh in the online version.

Any ideas? It seems that the refreshes are stored as backlogs until I close and open the pbix file.

For reference I am running this:

# 'dataset' holds the input data for this script

df <- data.frame(dataset)

write.table(df,"H:/Power BI/ProdData.csv",sep = ",", col.names = !file.exists("H:/Power BI/ProdData.csv"), append = TRUE)

where the data source is a 130kb table from SAP Business Warehouse.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am also having this exact same issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Having exact same issue. Would appreciate a explanation and fix.

J

Helpful resources

| User | Count |

|---|---|

| 53 | |

| 40 | |

| 31 | |

| 24 | |

| 22 |

| User | Count |

|---|---|

| 135 | |

| 111 | |

| 57 | |

| 44 | |

| 38 |