Huge last-minute discounts for FabCon Vienna from September 15-18, 2025

Supplies are limited. Contact info@espc.tech right away to save your spot before the conference sells out.

Get your discount- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Score big with last-minute savings on the final tickets to FabCon Vienna. Secure your discount

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Query to Scrap URLs with a pause every xx URLs

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Query to Scrap URLs with a pause every xx URLs

Hi,

I am using a query to scrap a list of data from various URLs of the same website.

I am using the web.content code to get those data e.g: (Json.Document(Web.Contents("https:// ….).

I think that my query is now overloading the website with too many queries too fast.

I tried to use the Function.InvokeAfter but then the query is really too slow to load.

I am wondering if it is possible to code a wait (pause or sleep) function once xx rows were loaded.

Let’s say my query has to scrap 1000 URLs would, it be possible to have a pause of x minutes every 100 URLs ?

I have already read a lot of useful tips, but nothing which could solde this issue.

Many thanks for any help.

Regards,

G

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Many thanks for your help Angelia.

It took me some time to manage to do what I want, but finally I did it thanks to the link shared by Angelia and a few things from here and there.

I am going to summarize the various steps in case it can help some of you 😉

01 – First query: getting data list to scrap, and setting wait parameters:

===============================================================

I have a first query using an api which collect a list of data used to scrap an URL of the same website.

==> So this query collect a list of data

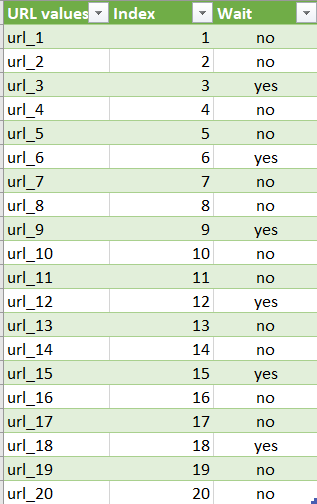

==> then I add an index column to get the row count from 1 to xxx

==> then I use a modulo formula to set a pause in the query every xx rows.

Here the sample of this first query:

let

Source = Json.Document(Web.Contents("https://api.xxxxxxx")),

…….

#"Index ajouté" = Table.AddIndexColumn(#"Column1 développé", "Index", 1, 1),

Row_Mod_Multiple = 3,

#"Personnalisée ajoutée" = Table.AddColumn(#"Index ajouté", "Wait", each if Number.Mod([Index], Row_Mod_Multiple) = 0 then "yes" else "no")

in

#"Personnalisée ajoutée"Thanks to the modulo formula with a row multiple of 3, every 3 rows the query will wait xxx seconds (or minutes) before to proceed with the next row.

02 – Second query: wait formula:

===============================================================

This second query is the wait function (this query is used to add a wait time of xxx seconds), make sure to name it “Wait_Seconds”:

let

Wait = (seconds as number, action as function) =>

if (List.Count(List.Generate(() => DateTimeZone.LocalNow() + #duration(0,0,0,seconds), (x) => DateTimeZone.LocalNow() < x, (x) => x)) = 0)

then null else action()

in

WaitThis wait function will be used in the third query.

03 – Third query: scrap urls and wait every xxx rows:

===============================================================

let

Source = #"Query1",

#"Personnalisée ajoutée" = Table.AddColumn(Source, "id", each if [Wait] = "yes" then Wait_Seconds(5, () => fnGetdata([URL values]) ) else fnGetdata ([URL values]) ),

……This last query will scrap the list of URLs and will wait 5 seconds every 3 rows.

As some website are blocking IP address which are making too many requests per minutes, this approach can help to avoid the issues.

Hope it can help 😉

Best,

G

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Gringoh,

Please reference to the link: https://blog.crossjoin.co.uk/2014/11/17/timing-power-query-queries/. And you'd better post Power Query question to corresponding forum: Power Query Forum.

Thanks,

Angelia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Many thanks for your help Angelia.

It took me some time to manage to do what I want, but finally I did it thanks to the link shared by Angelia and a few things from here and there.

I am going to summarize the various steps in case it can help some of you 😉

01 – First query: getting data list to scrap, and setting wait parameters:

===============================================================

I have a first query using an api which collect a list of data used to scrap an URL of the same website.

==> So this query collect a list of data

==> then I add an index column to get the row count from 1 to xxx

==> then I use a modulo formula to set a pause in the query every xx rows.

Here the sample of this first query:

let

Source = Json.Document(Web.Contents("https://api.xxxxxxx")),

…….

#"Index ajouté" = Table.AddIndexColumn(#"Column1 développé", "Index", 1, 1),

Row_Mod_Multiple = 3,

#"Personnalisée ajoutée" = Table.AddColumn(#"Index ajouté", "Wait", each if Number.Mod([Index], Row_Mod_Multiple) = 0 then "yes" else "no")

in

#"Personnalisée ajoutée"Thanks to the modulo formula with a row multiple of 3, every 3 rows the query will wait xxx seconds (or minutes) before to proceed with the next row.

02 – Second query: wait formula:

===============================================================

This second query is the wait function (this query is used to add a wait time of xxx seconds), make sure to name it “Wait_Seconds”:

let

Wait = (seconds as number, action as function) =>

if (List.Count(List.Generate(() => DateTimeZone.LocalNow() + #duration(0,0,0,seconds), (x) => DateTimeZone.LocalNow() < x, (x) => x)) = 0)

then null else action()

in

WaitThis wait function will be used in the third query.

03 – Third query: scrap urls and wait every xxx rows:

===============================================================

let

Source = #"Query1",

#"Personnalisée ajoutée" = Table.AddColumn(Source, "id", each if [Wait] = "yes" then Wait_Seconds(5, () => fnGetdata([URL values]) ) else fnGetdata ([URL values]) ),

……This last query will scrap the list of URLs and will wait 5 seconds every 3 rows.

As some website are blocking IP address which are making too many requests per minutes, this approach can help to avoid the issues.

Hope it can help 😉

Best,

G