FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

To celebrate FabCon Vienna, we are offering 50% off select exams. Ends October 3rd. Request your discount now.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Not enough memory to complete this operation

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not enough memory to complete this operation

I have loaded a 4MB excel into PBI (which concludes about 66,000 rows of data). And then I created a few calculated columns, and one of them got an error below:

There's not enough memory to complete this operation. Please try again later when there may be more memory available.

I searched online and tried some suggestions, for example,

- Turned off time intelligence

- Increased data cache

- Cleared cache

- Disabled data preview to download in the background

However, all trials above went in vain.

Some threads suggested it might be due to the poorly written DAX format.

I don't know whether my DAX is bad or not because it is quite simple. Below is the DAX having such "memory issue":

MaxLastHotTime =

CALCULATE(

MAX('new vessel'[New Off Chassis Time]),

ALLEXCEPT('new vessel', 'new vessel'[TRACTOR_NO], 'new vessel'[VoyageID]),

'new vessel'[New On Chassis Time] < EARLIER('new vessel'[New On Chassis Time])

)

I had a trial to take away the EARLIER() function. It works completely fine.

I had a trial to minimize the data file (as the excel is actually a combined log, having one month amount log being put together). It works completely fine as well.

Therefore, I know that the root cause is due to the EARLIER() function.

Can anyone help solve this issue?

For Your Information, it is the specification of my laptop:

Memory: 16GB

CPU: Intel i5 7th Gen

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Anonymous ,

ah sorry, I thought you get the error when you apply in PowerQuery.

Yes, in the calculated column I get the same error. From my point of view this comes from the EARLIER function, that can be quite expensive.

In general it's not recommended any more to use EARLIER as the function is quite confusing. The recommendation is to use variables instead.

Here from dax.guide:

It is recommended using variable (VAR) saving the value when it is still accessible, before a new row context hides the required row context to access the desired value.

As I didn't have the initial result, I can't really compare. But I think the following calculated column should produce the result you desire and without performance issues:

MaxLastHotTime_New =

VAR vTractorCurrentRow = 'new vessel'[TRACTOR_NO]

VAR vVoyageCurrentRow = 'new vessel'[VoyageID]

VAR vNewOnChassisCurrentRow = 'new vessel'[New On Chassis Time]

RETURN

CALCULATE(

MAX('new vessel'[New Off Chassis Time]),

ALL('new vessel'),

'new vessel'[TRACTOR_NO] = vTractorCurrentRow

&& 'new vessel'[VoyageID] = vVoyageCurrentRow

&& 'new vessel'[New On Chassis Time] < vNewOnChassisCurrentRow

)

Let me know if that works for you.

If you need any help please let me know.

If I answered your question I would be happy if you could mark my post as a solution ✔️ and give it a thumbs up 👍

Best regards

Denis

Blog: WhatTheFact.bi

Follow me: twitter.com/DenSelimovic

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

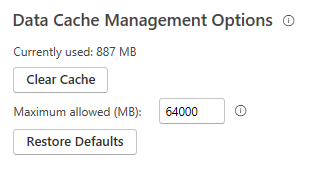

I was able to solve this error by increasing the Maximum allowed cache to 64000 MB.

"File"-->"Options and Settings"--> "Options"--> "Global-Data Load"--> "Data Cache Management Options"

Also, ensure that you either have enough RAM or increase the Virtual Memory to the same size.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello I have the same issue about the memory to complete the operation I changed all earlier formula as suggested but the issue remains.

I have also used maxx in DAX to calculate approximate value:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @Anonymous ,

how big is the size of your data model?

Can you post a picture of the metrics of the model or even better export them with DAX Studio?

Does this happen on your Desktop or in the service?

Best regards

Denis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, @selimovd

After loading into the PBI, it is about 4MB. Sorry that I don't have a DAX Studio currently. It will be good if it can be solved without touching DAX Studio.

I am using PBI Desktop.

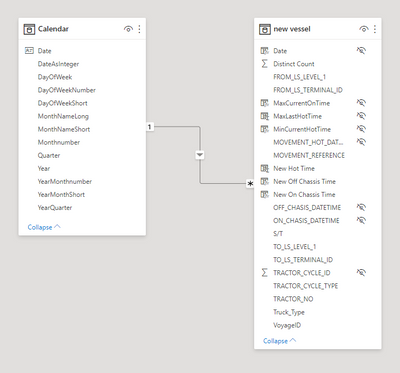

Below is the model and their relationship.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @Anonymous ,

that is very strange, 60K rows and a 4MB file size and you get a out of memory exception.

What is your data source? Do you do any crazy transformation in the file? Is it maybe possible to share your PBIX file?

Did you deavtivate the auto date time?

Best regards

Denis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @selimovd ,

Yes, it is very strange, and I really have no idea why it happens with this simple DAX.

The data source is just a 4MB excel file, and I didn't do any crazy transformation..... just very ordinary I can tell.

Below is my the shared PBIX file.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Anonymous ,

I cannot reproduce the behavior.

For me it takes about 5 seconds to refresh the whole file.

Can you reboot and try again? Or maybe you have another computer or a VM to try?

Best regards

Denis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried once right after rebooting my computer, but still can't get the values...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Anonymous ,

ah sorry, I thought you get the error when you apply in PowerQuery.

Yes, in the calculated column I get the same error. From my point of view this comes from the EARLIER function, that can be quite expensive.

In general it's not recommended any more to use EARLIER as the function is quite confusing. The recommendation is to use variables instead.

Here from dax.guide:

It is recommended using variable (VAR) saving the value when it is still accessible, before a new row context hides the required row context to access the desired value.

As I didn't have the initial result, I can't really compare. But I think the following calculated column should produce the result you desire and without performance issues:

MaxLastHotTime_New =

VAR vTractorCurrentRow = 'new vessel'[TRACTOR_NO]

VAR vVoyageCurrentRow = 'new vessel'[VoyageID]

VAR vNewOnChassisCurrentRow = 'new vessel'[New On Chassis Time]

RETURN

CALCULATE(

MAX('new vessel'[New Off Chassis Time]),

ALL('new vessel'),

'new vessel'[TRACTOR_NO] = vTractorCurrentRow

&& 'new vessel'[VoyageID] = vVoyageCurrentRow

&& 'new vessel'[New On Chassis Time] < vNewOnChassisCurrentRow

)

Let me know if that works for you.

If you need any help please let me know.

If I answered your question I would be happy if you could mark my post as a solution ✔️ and give it a thumbs up 👍

Best regards

Denis

Blog: WhatTheFact.bi

Follow me: twitter.com/DenSelimovic

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Excellent I was having this issue for several days and the issue was the EARLIER function. Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@selimovd I am sorry that I have left for a few days. And I am home just now.

Thanks for your alternatives, it works the exactly the same as what I expected. Though I still have no idea why EARLIER function doesn't be suggested (as I have gone through the articles and websites, they don't explicitly explain it well but only suggest using varaible is an alternatives), I might have recognized the root cause after understanding the principle of EARLER.

It creates an inner virtual table for every row. So my original DAX was actually creating a very large table to be mapped with which consumed a super big amount of memory.

It is my understanding but I don't really know if it is correct because.... from your alternatives, the difference between us was

1. Apply ALL() function to clear filters being applied

2. Discard EXCEPTALL() function and replace it by filtering (with VAR)

3. EARLIER() function is being replaced by creating VAR

The whole idea is the same, but would you like to explain a bit what makes yours different from mine?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, @selimovd

Really?! You mean you succeed to have values under the calculated column "MaxLastHotTime"?

Btw, what is your computer's specification?