FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Multiple lines text data extraction

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Multiple lines text data extraction

I have a column with several comments rows. Each row of this column contains several lines of text with a structure with product records like this:

2018-11-21 12:22:15 - Product x ( Comment)

Here can appear any comments

2018-11-20 13:49:41 - Product x (Comment)

Here again can appear any comments

2018-11-17 16:42:41 - Product y (Comment)

Here again can appear any comments

2018-11-16 11:29:11 - Product a (Comment)

Here again can appear any comments

So this column has multiple lines of comments but always there is a header with a date and Product name. There isn no limit to number of products records that can appear and also can appear repeated products (with different dates and times). Products can be related to two different categories, Category 1 (I have a column named Category 1 with all posible product values for this category) and Category 2 (I have another column named Category 2 with all possible product values for this category).

Latest category products, by record time, is always category 2, but some times may be only category 1 records.

What I need is to extract all product records (removing other comments), check moment when products records changes from Category 1 to Category 2 (it can happen only once) and create two colums, one with this last product record for Category 1 and other with product record for Category 2 (just records, no comments). I want to use this columns data to measure the time elapsed between product change from Category 1 to Category 2.

I hope to have been clear with my explanations and thank you so much for your help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

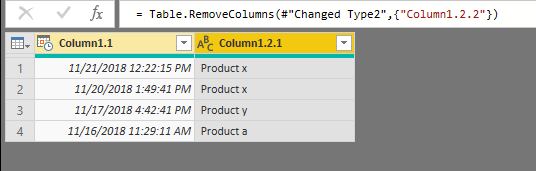

1.Split the column as the picture below.

2. Split the Column1.2 based on "C", then we can get the result as below.

M code for your reference.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("dcrNCkBAFAbQV/maFWXKd42/u/UC9pOFsESJ4u1tZ4qzPt4bydlY0gpBURFlCYv+2OdrOnEjQbev67KdqRmyoOdgoa5Vx7h/btZgpU7i/fztCqRKqwz3GO7hBQ==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Column1 = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Column1", type text}}),

#"Split Column by Delimiter" = Table.SplitColumn(#"Changed Type", "Column1", Splitter.SplitTextByEachDelimiter({"-"}, QuoteStyle.Csv, true), {"Column1.1", "Column1.2"}),

#"Changed Type1" = Table.TransformColumnTypes(#"Split Column by Delimiter",{{"Column1.1", type datetime}, {"Column1.2", type text}}),

#"Split Column by Delimiter1" = Table.SplitColumn(#"Changed Type1", "Column1.2", Splitter.SplitTextByEachDelimiter({"("}, QuoteStyle.Csv, false), {"Column1.2.1", "Column1.2.2"}),

#"Changed Type2" = Table.TransformColumnTypes(#"Split Column by Delimiter1",{{"Column1.2.1", type text}, {"Column1.2.2", type text}}),

#"Removed Columns" = Table.RemoveColumns(#"Changed Type2",{"Column1.2.2"})

in

#"Removed Columns"

If this post helps, then please consider Accept it as the solution to help the others find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much, but this is not what I need. I will paste a better example. In this table in column Work notes you can see that we have several lines with different support logs. We have two different support levels Level 1 and Level 2. I need to measure elapsed times every time that there is an assignment from Level 1 to Level 2 (I need to know assignment time). Level 2 people are from Stark house xD, so you can see what should appear in Last Level 1 log, Level 2 log and Elapsed time colums. In the first two rows, there are not yet Level 2 assigned (no Stark log) but in the last row there is a Sansa log so that are the values that should appear. To check if a person is from Level 1 or Level 2 support I have two tables tLvl1 and tLvl2. So I should search Work notes column looking for all support logs and find the moment where there is an escalation between Level 1 and Level 2.

Thank you so much.

Work notes Last Level 1 log Level 2 log Assignment time

| 2019-04-17 17:19:50 - Varys (Work notes) Dear Global Level 2 support team, Could you please assist? Thank you, Kind regards, Support 2019-04-17 17:03:47 - Jaime Lannister (Work notes) Ticket Escalated. Dear Local Level 1 support team, Could you please assist? Kindly Best regards, 2019-04-17 17:02:14 - Jaime Lannister (Work notes) Assigned to me. Short description and description fields updated. | 2019-04-17 17:19:50 - Varys | not yet assigned | |

| 2019-04-17 16:33:51 - Robert Baratheon (Work notes) Dear Global Level 2 support team, Could you please assist? Thank you for your efforts. Best Regards, 2019-04-17 16:24:10 - Theon Greyjoy (Work notes) Ticket escalated. -e-mail sent to user informing about the escalation. 2019-04-17 16:22:52 - Jaime Lannister (Work notes) Assigned to me. short description and description updated. KA used: No KBA found Troubleshooting: Escalated to Local Level 1 support team Suggested resolver group: Local Level 1 support team Next action: Escalation of the ticket. 2019-04-17 16:02:48 - Viserys Targaryen (Work notes) Assigned to Agent | 2019-04-17 16:33:51 - Robert Baratheon | not yet assigned | |

| 2019-04-16 16:07:34 - Sansa Stark (Work notes) Problems with the POS system. 2019-04-13 11:48:07 - Tyrion Lannister (Work notes) Dear Team, Price of item is incorrect Screenshot attached Could you please assist? Thank you, Kind regards, Local Level 1 support team 2019-04-13 11:32:32 -Khal Drogo (Work notes) Sent mail about escalation Set to L2 2019-04-13 11:31:42 - Melisandre (Work notes) KA used: No KBA Found Troubleshooting: Restarted services, Renamed transfer file, Integrated the transfer file, Restarted WST, Suggested resolver group: Local Level 1 support team Next action: Escalation | 2019-04-13 11:48:07 - Tyrion Lannister | 2019-04-16 16:07:34 - Sansa Stark | Elapsed time between Sansa log and Tyrion log |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello again.

If possible I would prefer to use a power query solution.

Thanks again.

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!

| User | Count |

|---|---|

| 143 | |

| 124 | |

| 101 | |

| 80 | |

| 55 |