Join the Fabric User Panel to shape the future of Fabric.

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Sign up now- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric certified for FREE! Don't miss your chance! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Local Grouping / Index Issues

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

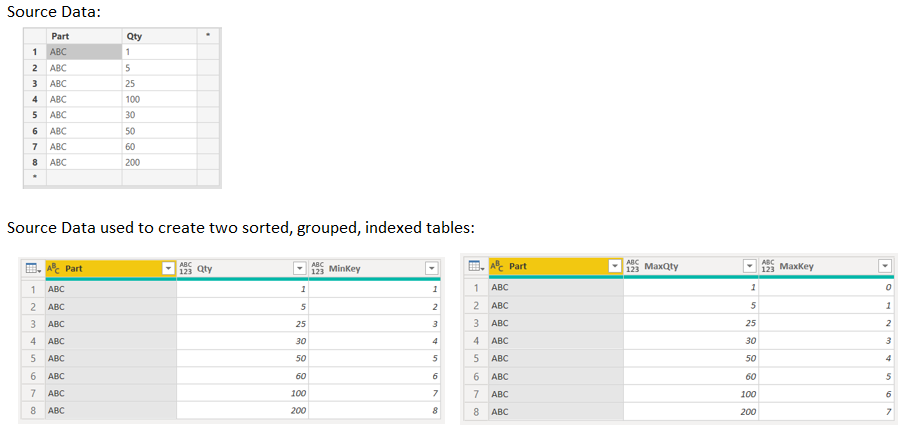

Local Grouping / Index Issues

Hey guys,

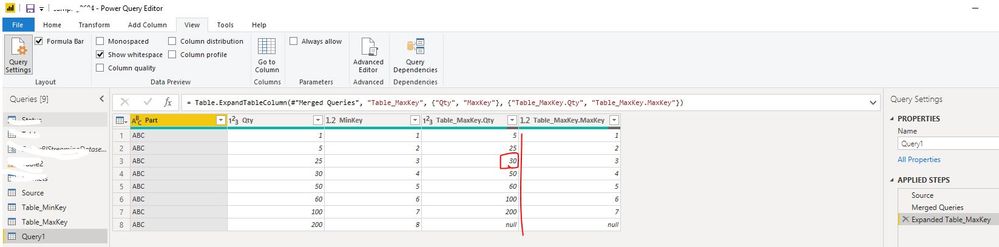

I have out-of-order source data that I'm performing a locally grouped index on. My index persists through every step except for a merge with another table (or, more specifically, the expansion after the merge). As soon as I expand the second table, my index column remains but the data reverts to source order so in effect, the index numbers are now wrong:

If you follow one Index number (i.e. MinKey = 4), you will see that the 4th Qty value is 30 until the final step where it changes to 100 which is incorrect.

To group and index my rows I'm using:

= Table.Group(#"Sorted Rows", {"Part"}, {{"Index Table", each Table.AddIndexColumn(_,"MinKey",1,1)}})= Table.Group(#"Sorted Rows", {"Part"}, {{"Index Table", each Table.AddIndexColumn(_,"MaxKey",0,1)}})

In my actual data there are multiple part numbers so it is essentially a local group/index but the above is a simplified version which still shows the behaviour.

Thank you for your help.

Bekah

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Add the GroupBy & Indexing steps AFTER the merge step, but BEFORE the expanding step. That way the ordering will not be reshuffeld to source data after expanding.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @BekahLoSurdo ,

Please try to update the code in group steps as blow:

= Table.Group(#"Sorted Rows", {"Qty"}, {{"Index Table", each Table.AddIndexColumn(_,"MinKey",1,1)}}) |

| = Table.Group(#"Sorted Rows", {"Qty"}, {{"Index Table", each Table.AddIndexColumn(_,"MaxKey",0,1)}}) |

If the above method not working, please provide your expected result with screen shot and explain more details on it. Thank you.

Best Regards

Rena

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous,

If I partition by Qty, each line will have an Index value of 1 instead of the correct 1-8. I am partitioning by Part because I need a local index for each Part in my data. I am working on another example now. Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@BekahLoSurdo can you just simply provide raw sample data in table format and expected output, not sure what you are trying to achieve, it is hard to understand these tiny images. Read this post to get your answer quickly.

https://community.powerbi.com/t5/Community-Blog/How-to-Get-Your-Question-Answered-Quickly/ba-p/38490

Subscribe to the @PowerBIHowTo YT channel for an upcoming video on List and Record functions in Power Query!!

Learn Power BI and Fabric - subscribe to our YT channel - Click here: @PowerBIHowTo

If my solution proved useful, I'd be delighted to receive Kudos. When you put effort into asking a question, it's equally thoughtful to acknowledge and give Kudos to the individual who helped you solve the problem. It's a small gesture that shows appreciation and encouragement! ❤

Did I answer your question? Mark my post as a solution. Proud to be a Super User! Appreciate your Kudos 🙂

Feel free to email me with any of your BI needs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @parry2k and @Anonymous,

I hope this makes it easy to duplicate.

Enter source data table:

| Part | Qty |

| ABC | 1 |

| ABC | 5 |

| ABC | 25 |

| ABC | 100 |

| ABC | 30 |

| ABC | 50 |

| ABC | 60 |

| ABC | 200 |

Using this Source, make two helper tables (Table_MinKey and Table_MaxKey):

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WcnRyVtJRMlSK1YGxTZHYRsgcQwMDJJ4xMscUmWOGzDECaYoFAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Part = _t, Qty = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Part", type text}, {"Qty", Int64.Type}}),

#"Sorted Rows" = Table.Sort(#"Changed Type",{{"Part", Order.Ascending}, {"Qty", Order.Ascending}}),

#"Grouped Rows" = Table.Group(#"Sorted Rows", {"Part"}, {{"Index Table", each Table.AddIndexColumn(_,"MinKey",1,1)}}),

#"Expanded Index Table" = Table.ExpandTableColumn(#"Grouped Rows", "Index Table", {"Qty", "MinKey"}, {"Qty", "MinKey"})

in

#"Expanded Index Table"

| Part | Qty | MinKey |

| ABC | 1 | 1 |

| ABC | 5 | 2 |

| ABC | 25 | 3 |

| ABC | 30 | 4 |

| ABC | 50 | 5 |

| ABC | 60 | 6 |

| ABC | 100 | 7 |

| ABC | 200 | 8 |

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WcnRyVtJRMlSK1YGxTZHYRsgcQwMDJJ4xMscUmWOGzDECaYoFAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Part = _t, Qty = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Part", type text}, {"Qty", Int64.Type}}),

#"Sorted Rows" = Table.Sort(#"Changed Type",{{"Part", Order.Ascending}, {"Qty", Order.Ascending}}),

#"Grouped Rows" = Table.Group(#"Sorted Rows", {"Part"}, {{"Index Table", each Table.AddIndexColumn(_,"MaxKey",0,1)}}),

#"Expanded Index Table" = Table.ExpandTableColumn(#"Grouped Rows", "Index Table", {"Qty", "MaxKey"}, {"MaxQty", "MaxKey"})

in

#"Expanded Index Table"

| Part | MaxQty | MaxKey |

| ABC | 1 | 0 |

| ABC | 5 | 1 |

| ABC | 25 | 2 |

| ABC | 30 | 3 |

| ABC | 50 | 4 |

| ABC | 60 | 5 |

| ABC | 100 | 6 |

| ABC | 200 | 7 |

Finally, combine the two tables:

let

Source = Table_MinKey,

#"Merged Queries" = Table.NestedJoin(Source, {"Part", "MinKey"}, Table_MaxKey, {"Part", "MaxKey"}, "Table_MaxKey", JoinKind.LeftOuter),

#"Expanded Table_MaxKey" = Table.ExpandTableColumn(#"Merged Queries", "Table_MaxKey", {"MaxQty", "MaxKey"}, {"MaxQty", "MaxKey"})

in

#"Expanded Table_MaxKey"

| Part | Qty | MinKey | MaxQty | MaxKey |

| ABC | 1 | 1 | 5 | 1 |

| ABC | 5 | 2 | 25 | 2 |

| ABC | 25 | 3 | 100 | 3 |

| ABC | 100 | 4 | 30 | 4 |

| ABC | 30 | 5 | 50 | 5 |

| ABC | 50 | 6 | 60 | 6 |

| ABC | 60 | 7 | 200 | 7 |

| ABC | 200 | 8 | null | null |

Expected result:

| Part | Qty | MinKey | MaxQty | MaxKey |

| ABC | 1 | 1 | 5 | 1 |

| ABC | 5 | 2 | 25 | 2 |

| ABC | 25 | 3 | 30 | 3 |

| ABC | 30 | 4 | 50 | 4 |

| ABC | 50 | 5 | 60 | 5 |

| ABC | 60 | 6 | 100 | 6 |

| ABC | 100 | 7 | 200 | 7 |

| ABC | 200 | 8 | null | null |

If you "step through" the applied steps in the last table, you can see the data switch from the expected result to the wrong result at the Expanded Table_MaxKey step. How do I avoid that? Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @BekahLoSurdo ,

I updated the applied step code for table Table_MinKey ,Table_MaxKey and combine query, it can get the desired result. You can also update your codes to get the desired result based on below codes:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WcnRyVtJRMlSK1YGxTZHYRsgcQwMDJJ4xMscUmWOGzDECaYoFAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Part = _t, Qty = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Part", type text}, {"Qty", Int64.Type}}),

#"Sorted Rows" = Table.Sort(#"Changed Type",{{"Part", Order.Ascending}, {"Qty", Order.Ascending}}),

#"Added Index" = Table.AddIndexColumn(#"Sorted Rows", "MinKey", 1, 1)

in

#"Added Index"let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WcnRyVtJRMlSK1YGxTZHYRsgcQwMDJJ4xMscUmWOGzDECaYoFAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Part = _t, Qty = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Part", type text}, {"Qty", Int64.Type}}),

#"Sorted Rows" = Table.Sort(#"Changed Type",{{"Part", Order.Ascending}, {"Qty", Order.Ascending}}),

#"Added Index" = Table.AddIndexColumn(#"Sorted Rows", "MaxKey", 0, 1)

in

#"Added Index"let

Source = Table_MinKey,

#"Merged Queries" = Table.NestedJoin(Source, {"Part", "MinKey"}, Table_MaxKey, {"Part", "MaxKey"}, "Table_MaxKey", JoinKind.LeftOuter),

#"Expanded Table_MaxKey" = Table.ExpandTableColumn(#"Merged Queries", "Table_MaxKey", {"Qty", "MaxKey"}, {"Table_MaxKey.Qty", "Table_MaxKey.MaxKey"})

in

#"Expanded Table_MaxKey"Best Regards

Rena

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous,

Thank you for your effort but I need to group them as I have more than one part number (this was a simplified example, as stated in the original post) so my local index will read 1,2,3,4,5,6,7,1,2,3,1,2,...etc. Unfortunately, it is the grouping and expanding of said group that breaks it which is why your solution is working.

I found a workaround by adding two index columns at the same time to one table, expanding both (essentially making a Cartesian product) and filtering down to where the two indices are equal. It seems like a lot of extra row creation to work around what looks to be a bug in the table expansion step but it works. Thanks for your help!

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WcnRyVtJRMlSK1YGxTZHYRsgcQwMDJJ4xMscUmWOGzDECaYoFAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Part = _t, Qty = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Part", type text}, {"Qty", Int64.Type}}),

#"Sorted Rows" = Table.Sort(#"Changed Type",{{"Part", Order.Ascending}, {"Qty", Order.Ascending}}),

#"Grouped Rows" = Table.Group(#"Sorted Rows", {"Part"}, {{"Index Min", each Table.AddIndexColumn(_,"MinKey",1,1)},{"Index Max", each Table.AddIndexColumn(_,"MaxKey",0,1)}}),

#"Expanded Index Min" = Table.ExpandTableColumn(#"Grouped Rows", "Index Min", {"Qty", "MinKey"}, {"Qty", "MinKey"}),

#"Expanded Index Max" = Table.ExpandTableColumn(#"Expanded Index Min", "Index Max", {"Qty", "MaxKey"}, {"Qty.1", "MaxKey"}),

#"Filtered Rows" = Table.SelectRows(#"Expanded Index Max", each ([MinKey] = [MaxKey]))

in

#"Filtered Rows"

| Part | Qty | MinKey | Qty.1 | MaxKey | |

| ABC | 1 | 1 | 1 | 0 | <-- Keep this row (MinKey = MaxKey) |

| ABC | 1 | 1 | 5 | 1 | |

| ABC | 1 | 1 | 25 | 2 | |

| ABC | 1 | 1 | 30 | 3 | |

| ABC | 1 | 1 | 50 | 4 | |

| ABC | 1 | 1 | 60 | 5 | |

| ABC | 1 | 1 | 100 | 6 | |

| ABC | 1 | 1 | 200 | 7 | |

| ABC | 5 | 2 | 1 | 0 | |

| ABC | 5 | 2 | 5 | 1 | |

| ABC | 5 | 2 | 25 | 2 | <-- Keep this row (MinKey = MaxKey) |

| ABC | 5 | 2 | 30 | 3 | |

| ABC | 5 | 2 | 50 | 4 | |

| ABC | 5 | 2 | 60 | 5 | |

| ABC | 5 | 2 | 100 | 6 | |

| ABC | 5 | 2 | 200 | 7 | |

| ABC | 25 | 3 | 1 | 0 | |

| ABC | 25 | 3 | 5 | 1 | |

| ABC | 25 | 3 | 25 | 2 | etc. |

Helpful resources

Join our Fabric User Panel

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

| User | Count |

|---|---|

| 63 | |

| 62 | |

| 42 | |

| 19 | |

| 16 |

| User | Count |

|---|---|

| 118 | |

| 106 | |

| 38 | |

| 28 | |

| 27 |