Join the Fabric User Panel to shape the future of Fabric.

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Sign up now- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric certified for FREE! Don't miss your chance! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Learning: Expanded Concurrent Usage

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Learning: Expanded Concurrent Usage

Hi,

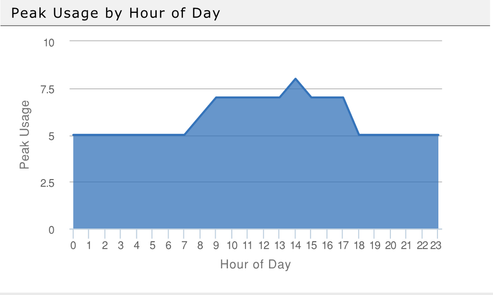

I am trying to find the peak concurrent usage of a specific application.

I do know the begin time and end time a specific machine which used said application.

We do not know which day has the highest peak usage. We do not know which hour had the highest peak usage.

I am trying to learn how to count the number of machines which are actively using the same program throughout the day.

Which would produce a graph similar to the following photo.

I have a sample of data like this:

luidmachineidbegin_timeend_time

| 2100 | 589 | 3/5/2020 21:47 | 3/5/2020 21:47 |

| 2100 | 2642 | 3/5/2020 15:10 | 3/5/2020 21:49 |

| 2100 | 589 | 3/5/2020 21:51 | 3/5/2020 21:51 |

| 2100 | 2634 | 3/5/2020 19:37 | 3/5/2020 22:30 |

| 2100 | 2904 | 3/5/2020 14:51 | 3/5/2020 22:54 |

| 2100 | 589 | 3/5/2020 22:13 | 3/5/2020 22:15 |

| 2100 | 589 | 3/5/2020 23:54 | 3/5/2020 23:55 |

| 2100 | 2930 | 3/5/2020 20:06 | 3/5/2020 23:57 |

| 2100 | 589 | 3/5/2020 23:57 | 3/6/2020 0:02 |

| 2100 | 589 | 3/6/2020 0:04 | 3/6/2020 0:04 |

| 2100 | 589 | 3/6/2020 0:06 | 3/6/2020 0:06 |

| 2100 | 2892 | 3/5/2020 14:16 | 3/5/2020 23:01 |

| 2100 | 2464 | 3/5/2020 14:17 | 3/6/2020 3:11 |

| 2100 | 2634 | 3/5/2020 19:02 | 3/5/2020 19:09 |

| 2100 | 2634 | 3/5/2020 19:21 | 3/5/2020 19:21 |

| 2100 | 589 | 3/5/2020 19:22 | 3/5/2020 19:22 |

| 2100 | 589 | 3/5/2020 19:24 | 3/5/2020 19:25 |

| 2100 | 589 | 3/5/2020 19:25 | 3/5/2020 19:25 |

| 2100 | 589 | 3/5/2020 19:25 | 3/5/2020 19:25 |

| 2100 | 2593 | 3/6/2020 12:03 | 3/6/2020 13:45 |

| 2100 | 2642 | 3/5/2020 15:29 | 3/6/2020 13:46 |

| 2100 | 2892 | 3/5/2020 15:35 | 3/6/2020 13:56 |

| 2100 | 2642 | 3/6/2020 13:54 | 3/6/2020 13:58 |

| 2100 | 2892 | 3/6/2020 14:58 | |

| 2100 | 589 | 3/6/2020 15:43 | |

| 2100 | 2634 | 3/6/2020 15:46 | |

| 2100 | 2838 | 3/6/2020 14:49 | 3/6/2020 14:50 |

| 2100 | 2904 | 3/6/2020 14:52 | 3/6/2020 14:52 |

| 2100 | 2904 | 3/6/2020 14:53 | 3/6/2020 14:53 |

| 2100 | 2506 | 3/6/2020 14:53 | 3/6/2020 14:55 |

| 2100 | 2506 | 3/6/2020 14:57 | 3/6/2020 14:57 |

| 2100 | 2838 | 3/6/2020 14:58 | 3/6/2020 14:58 |

| 2100 | 2506 | 3/6/2020 14:57 | 3/6/2020 14:59 |

| 2100 | 2838 | 3/6/2020 14:58 | 3/6/2020 15:01 |

| 2100 | 2838 | 3/6/2020 15:24 | 3/6/2020 15:24 |

| 2099 | 2517 | 3/5/2020 21:28 | 3/5/2020 21:33 |

| 2099 | 1833 | 3/5/2020 21:48 | 3/5/2020 21:48 |

| 2099 | 1833 | 3/5/2020 21:51 | 3/5/2020 21:52 |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Anonymous

my browser is not happy with the link you have pasted, not happy at all. It throws a lot of security warnings. Is there somewhere else you could share your file?

Cheers,

Sturla

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure thing! Thanks for informing me. I had much more data than 20k characters. I edited my original post to have a sample of data smaller than 20k.

Helpful resources

Join our Fabric User Panel

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

| User | Count |

|---|---|

| 63 | |

| 62 | |

| 42 | |

| 19 | |

| 16 |

| User | Count |

|---|---|

| 118 | |

| 106 | |

| 38 | |

| 28 | |

| 27 |