Huge last-minute discounts for FabCon Vienna from September 15-18, 2025

Supplies are limited. Contact info@espc.tech right away to save your spot before the conference sells out.

Get your discount- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Score big with last-minute savings on the final tickets to FabCon Vienna. Secure your discount

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- How to find all tables used in .pbix?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to find all tables used in .pbix?

I have a .pbix that consumes LIVE from an SSAS cube, that has 50 tables.

The .pbix has 7 pages and several visuals/KPIs per page...

I need to know all the tables used in the .pbix (my guess is only 8 out of the 50 tables from the SSAS cube are used).

Is there a way of doing this; other than clicking every visual and seeing the "ticks" on the right?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

Use SQL Server Profiler can track the tables you used in power bi. But there is a premise that your SSAS database can be accessed through SQL Server .

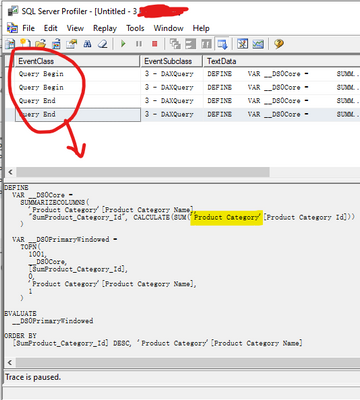

(1)Refresh in power bi desktop ,the query will be sent to SSAS, so the SQL Server Profiler Will be record the query .(I have two visuals in the desktop)

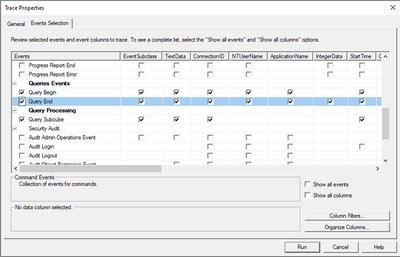

(2)Select all events related to Query in the Events Selection and run .Like this

(3)Then you will get the details of the query, it will contain the name of the table related to the query .

Best Regards

Community Support Team _ Ailsa Tao

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To anyone who encounters the same problem, in 2025, you can use this Python library to automatically list all table names (as well as other useful metadata) from a report (pbix file)

https://github.com/Hugoberry/pbixray

from pbixray import PBIXRay model = PBIXRay('path/to/your/file.pbix')

# Table name in the report, may not be the actual table name in the database

tables = model.tables print(tables)

# If you want the actual table name from database, you could exact it from power query using regex

power_query = model.power_query print(power_query)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

Based on @Askrr

To use, unzip the PBIX file and update the location of the Layout file in the code below.

Haven't spent much time on this, so use at your own risk 🙂

import json

def process_card(query):

for projection in query['singleVisual']['projections']['Values']:

print(projection['queryRef'])

def process_textbox(query):

if ('values' in query['singleVisual']['objects']):

for object in query['singleVisual']['objects']['values']:

if ('Aggregation' in object['properties']['expr']['expr']):

for measure in object['properties']['expr']['expr']['Aggregation']['Expression']['Column']['Expression']['Subquery']['Query']['Select']:

print (measure['Name'])

def process_slicer(query):

for projection in query['singleVisual']['projections']['Values']:

print(projection['queryRef'])

def process_pivot_table(query):

for projection in query['singleVisual']['projections']['Values']:

print(projection['queryRef'])

def process_table_ex(query):

for projection in query['singleVisual']['projections']['Values']:

print(projection['queryRef'])

filepath = r"Layout"

with open(filepath, "r", encoding = 'utf-16 le') as f:

data = json.load(f)

pages = data['sections']

print(f"Total Page count: {len(pages) }\n")

for page in pages:

print(f"Page: { page["displayName"] }")

visualContainers = page['visualContainers']

print(f"Visuals on page: { len(visualContainers)}")

for visualContainer in visualContainers:

config = json.loads(visualContainer['config'])

if ('singleVisual' in config):

print(f"\tVisualisation Type: { config['singleVisual']['visualType'] }")

visualType=config['singleVisual']['visualType']

if (visualType=='card'):

process_card(config)

if (visualType=='textbox'):

process_textbox(config)

if (visualType=='slicer'):

process_slicer(config)

if (visualType=='pivotTable'):

process_pivot_table(config)

if (visualType=='tableEx'):

process_table_ex(config)

Kind regards,

Ben.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've recently ran into this same issue (400+ reports and 300+ tables in my workspace) and couldn't find a simple solution to find which reports are utilizing which tables. I wrote a simple Python script to help with this. I posted it on my GitHub and hope that someone else that searches these forums finds it useful. I have directions for it in the README.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Askrr , your solution seems really handy (haven't tried it, only looked through the code). Would be good to enhance it so that we enter a report name and it finds all the dependant tables / entities.

Kind regards,

Ben.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Which cloud where you using? I'm using gcp. Do you think that this could be a good methodology to find does tables. The amount of dashboards I'm using is enormous.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I run this locally, but it could be modified to point to cloud storage as well (gcp, aws, azure, etc) and do the same thing. However, you would need to have write permissions as part of your IAM policy in GCP if you were to use it that way.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

Use SQL Server Profiler can track the tables you used in power bi. But there is a premise that your SSAS database can be accessed through SQL Server .

(1)Refresh in power bi desktop ,the query will be sent to SSAS, so the SQL Server Profiler Will be record the query .(I have two visuals in the desktop)

(2)Select all events related to Query in the Events Selection and run .Like this

(3)Then you will get the details of the query, it will contain the name of the table related to the query .

Best Regards

Community Support Team _ Ailsa Tao

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous

For this you would need to install DAXSTUDIO, once intalled try following these steps:

- Launch your powerbi report

- Launch DAXSTUDIO and select your model

- Run this query

SELECT * FROM $System.DBSCHEMA_TABLES WHERE TABLE_TYPE='SYSTEM TABLE'

Did it work ? Mark it as a solution to help spreading knowledge.

A kudos would be appreciated 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous

If it worked please don't forget to accept it as a solution 👍

Did it work ? Mark it as a solution to help spreading knowledge.

A kudos would be appreciated

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Once I open the model, the query returns all the 50 tables in the model and not just the ones used in the Pbix...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Actually you will have to deep dive in that resulted table, here you will find the steps you must follow in order to identify the used/referenced tables in your report.

Did it work ? Mark it as a solution to help spreading knowledge.

A kudos would be appreciated