FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Calling all Data Engineers! Fabric Data Engineer (Exam DP-700) live sessions are back! Starting October 16th. Sign up.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Help simplifying my query as its running out o...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Help simplifying my query as its running out of memory

Hi

I need help simplyfiying my query. Error message "There's not enough memory to complete this operation. Please try again later when there may be more memory available."

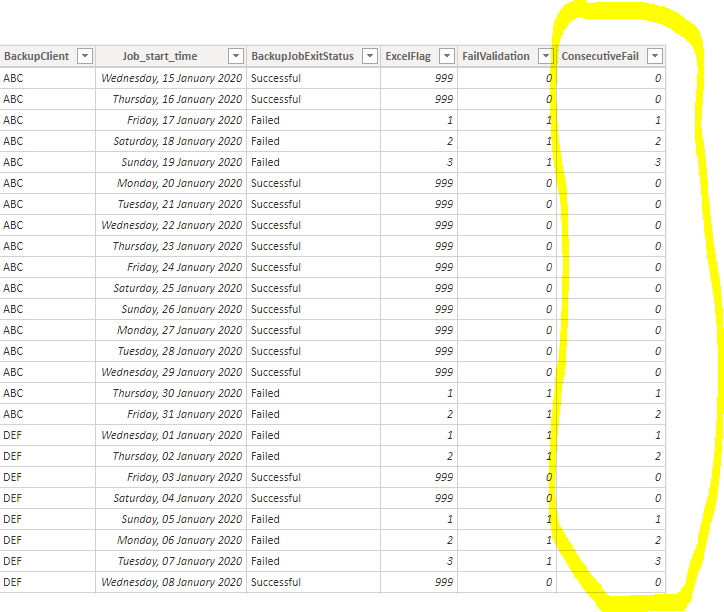

The below ConsecutiveFail indicator i am creating is on over 6 million records where it is returning failed consecutive backups:

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

no need to open a file. Simply paste the following code into the advanced editor of a new query and adjust to the location of your Excel-file:

let

Source = Excel.Workbook(

File.Contents("C:\Users\xxxYourFilePath...\continousStreakRank.xlsx"),

null,

true

),

Sheet1_Sheet = Source{[Item = "Sheet1", Kind = "Sheet"]}[Data],

#"Promoted Headers" = Table.PromoteHeaders(Sheet1_Sheet, [PromoteAllScalars = true]),

#"Changed Type" = Table.TransformColumnTypes(

#"Promoted Headers",

{

{"BackupClient", type text},

{"Job_start_time", type date},

{"BackupJobExitStatus", type text},

{"Fail Validation", Int64.Type},

{"Needed", Int64.Type}

}

),

GroupWithGroupKindLocal = Table.Group(

#"Changed Type",

{"BackupClient", "BackupJobExitStatus"},

{{"PartitionLocal", each Table.AddIndexColumn(_, "PQ", 1, 1)}},

GroupKind.Local

),

ExpandColumns = Table.ExpandTableColumn(

GroupWithGroupKindLocal,

"PartitionLocal",

{"Job_start_time", "Fail Validation", "Needed", "PQ"},

{"Job_start_time", "Fail Validation", "Needed", "PQ"}

),

ReplaceNonFailed = Table.ReplaceValue(

ExpandColumns,

each [PQ],

each if [Fail Validation] = 0 then 0 else [PQ],

Replacer.ReplaceValue,

{"PQ"}

)

in

ReplaceNonFailedYou'll find some instructions for it in the links in my footnotes.

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@dmakan , Can paste the same data in table format. Also, Can I assume all dates are there? To make it fast I am thinking of using

date = earlier(Date) -1

That means we need all consecutive dates are there

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

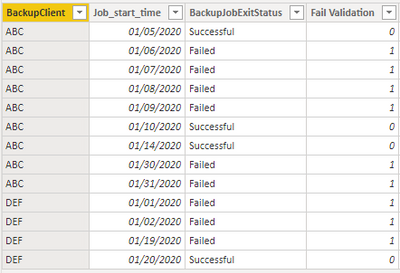

Hi @amitchandak

There may be missing dates as sometimes backups dont run like the below table:

Again, the last column is my result output i require.

| BackupClient | Job_start_time | BackupJobExitStatus | Fail Validation | |

| ABC | 05-Jan-20 | Successful | 0 | 0 |

| ABC | 06-Jan-20 | Failed | 1 | 1 |

| ABC | 07-Jan-20 | Failed | 1 | 2 |

| ABC | 08-Jan-20 | Failed | 1 | 3 |

| ABC | 09-Jan-20 | Failed | 1 | 4 |

| ABC | 10-Jan-20 | Successful | 0 | 0 |

| ABC | 14-Jan-20 | Successful | 0 | 0 |

| ABC | 30-Jan-20 | Failed | 1 | 1 |

| ABC | 31-Jan-20 | Failed | 1 | 2 |

| DEF | 01-Jan-20 | Failed | 1 | 1 |

| DEF | 02-Jan-20 | Failed | 1 | 2 |

| DEF | 19-Jan-20 | Failed | 1 | 3 |

| DEF | 20-Jan-20 | Successful | 0 | 0 |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @dmakan

Based on your description, I created data to reproduce your scenario. The pbix file is attached in the end.

Table:

You may create a calculated column as below.

Result =

var _client = 'Table'[BackupClient]

var _starttime = 'Table'[Job_start_time]

var _failvalidation = 'Table'[Fail Validation]

var _lastsuccesttdate =

CALCULATE(

MAX('Table'[Job_start_time]),

FILTER(

ALL('Table'),

'Table'[BackupClient] = _client&&

'Table'[Job_start_time]<_starttime&&

'Table'[Fail Validation] = 0

)

)

return

IF(

_failvalidation = 0,

0,

IF(

_failvalidation = 1,

CALCULATE(

DISTINCTCOUNT('Table'[Job_start_time]),

FILTER(

ALL('Table'),

'Table'[BackupClient] = _client&&

'Table'[Job_start_time]>_lastsuccesttdate&&

'Table'[Job_start_time]<=_starttime

)

)

)

)

Result:

Best Regards

Allan

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @v-alq-msft

Thanks for the help. This has resoloved my issue if run against the sample data. I am still getting an "out of memory error when applying to the original dataset which is over 6 million records. Is there any way i can create a function within edit queries or apply this to the T-SQL query in the import step?

Regards,

Dipesh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

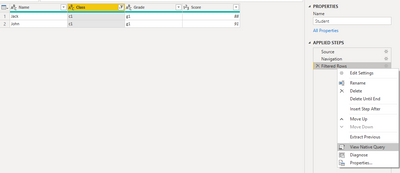

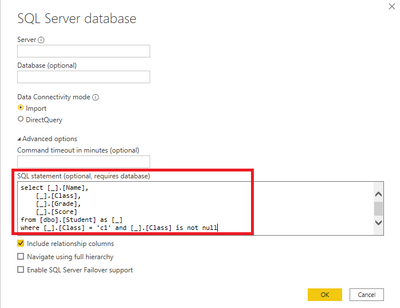

Hi, @dmakan

You may go to 'Query Editor', right-click the step you want to apply when connecting to the database, click 'View Native Query', copy the codes.

Then you need to connect to the same database with the codes.

Result:

Best Regards

Allan

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@dmakan , Please find the attached pbix after the signature. I doubt it faster then what you have done. But you can check and tell. I created two columns to get this.

Please let me know your findings.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @amitchandak

This solution seems to work witout the memory error i was getting previously.

The only issue is that it is now counting multiple failures per day. I assume that the backup application retries again after failed attempts which are being logged in the data. Any ideas how i could get around this?

Thanks for the help!

Regards,

Dipesh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I expect this kind of operation to be faster in the query editor (also see attached file):

let

Source = Table.FromRows(

Json.Document(

Binary.Decompress(

Binary.FromText(

"i45WcnRyVtJRMjBV8ErMK00sqlQwMjAyAIoElyYnpxYXp5XmgKShGEzF6sB1maHrckvMzElNATIModgITCNpMcerxQiOkbRY4NViDNVijKzFEq8WE6gWEyQthgbkhIChCTm6jDHswgg3E7RwMzYkGG4m8HBzcXUD2YhfCwIjaTEiaIshmi2G+IPaGE4jtBiREtSxAA==",

BinaryEncoding.Base64

),

Compression.Deflate

)

),

let

_t = ((type text) meta [Serialized.Text = true])

in

type table[

BackupClient = _t,

Job_start_time = _t,

BackupJobExitStatus = _t,

#"Fail Validation" = _t,

Needed = _t,

part = _t,

#"final Needed" = _t

]

),

#"Changed Type" = Table.TransformColumnTypes(

Source,

{

{"BackupClient", type text},

{"Job_start_time", type date},

{"BackupJobExitStatus", type text},

{"Fail Validation", Int64.Type},

{"Needed", Int64.Type},

{"part", Int64.Type},

{"final Needed", Int64.Type}

}

),

GroupWithGroupKindLocal = Table.Group(

#"Changed Type",

{"BackupClient", "BackupJobExitStatus"},

{{"PartitionLocal", each Table.AddIndexColumn(_, "PQ", 1, 1)}},

GroupKind.Local

),

ExpandColumns = Table.ExpandTableColumn(

GroupWithGroupKindLocal,

"PartitionLocal",

{"Job_start_time", "Fail Validation", "Needed", "PQ"}

),

ReplaceNonFailed = Table.ReplaceValue(

ExpandColumns,

each [PQ],

each if [Fail Validation] = 0 then 0 else [PQ],

Replacer.ReplaceValue,

{"PQ"}

)

in

ReplaceNonFailed

First I create a nested index ( https://www.youtube.com/watch?v=-3KFZaYImEY ) - but with a twist that it resets after every change in the column "Fail Validation". Using the 4th parameter in the GroupWithGroupKindLocal-step does that for me ( https://blog.crossjoin.co.uk/2014/01/03/aggregating-by-local-groups-in-power-query/ ). Both elements are essential to realize the performance improvement. (Further reading on this here: https://www.thebiccountant.com/2017/05/29/performance-tip-partition-tables-crossjoins-possible-power... )

Then I replace these nested indices by 0 for those rows, where the validation didn't fail ( https://www.thebiccountant.com/2017/07/23/transforming-a-column-with-values-from-another-column-in-p... ) ... but that's not essential here, you could just have added another column with a condition and removed the old one.

Problem with the DAX-solution it that it has to reference the whole table in each row, while my PQ-logic will chunk up the table first (using the group-algorithms) before applying the expensive operation (that's what's EARLIER doing in DAX): Here, just adding an index-column.

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ImkeF

I would like to incoporate this Logic to my excel source.

I tried to download you pbi file but doesn't Open: "We weren't able to restore the saved dtabase model"

Could you advise on how to incorporate the logic to an excel source.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

no need to open a file. Simply paste the following code into the advanced editor of a new query and adjust to the location of your Excel-file:

let

Source = Excel.Workbook(

File.Contents("C:\Users\xxxYourFilePath...\continousStreakRank.xlsx"),

null,

true

),

Sheet1_Sheet = Source{[Item = "Sheet1", Kind = "Sheet"]}[Data],

#"Promoted Headers" = Table.PromoteHeaders(Sheet1_Sheet, [PromoteAllScalars = true]),

#"Changed Type" = Table.TransformColumnTypes(

#"Promoted Headers",

{

{"BackupClient", type text},

{"Job_start_time", type date},

{"BackupJobExitStatus", type text},

{"Fail Validation", Int64.Type},

{"Needed", Int64.Type}

}

),

GroupWithGroupKindLocal = Table.Group(

#"Changed Type",

{"BackupClient", "BackupJobExitStatus"},

{{"PartitionLocal", each Table.AddIndexColumn(_, "PQ", 1, 1)}},

GroupKind.Local

),

ExpandColumns = Table.ExpandTableColumn(

GroupWithGroupKindLocal,

"PartitionLocal",

{"Job_start_time", "Fail Validation", "Needed", "PQ"},

{"Job_start_time", "Fail Validation", "Needed", "PQ"}

),

ReplaceNonFailed = Table.ReplaceValue(

ExpandColumns,

each [PQ],

each if [Fail Validation] = 0 then 0 else [PQ],

Replacer.ReplaceValue,

{"PQ"}

)

in

ReplaceNonFailedYou'll find some instructions for it in the links in my footnotes.

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks works 100%

Now need to convert this to SQL Source.

😁

Helpful resources

FabCon Global Hackathon

Join the Fabric FabCon Global Hackathon—running virtually through Nov 3. Open to all skill levels. $10,000 in prizes!

Power BI Monthly Update - September 2025

Check out the September 2025 Power BI update to learn about new features.