Fabric Data Days starts November 4th!

Advance your Data & AI career with 50 days of live learning, dataviz contests, hands-on challenges, study groups & certifications and more!

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: (Help) Working with large sets of multi dimens...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

(Help) Working with large sets of multi dimensional JSON files from DocumentDB (Cosmos DB)

Hello

I'm currently trying to analyse quite a large set of multi dimensional JSON documents from a DocumentDB source.

Because of the not so flat documents I'm trying to split it up in multiple tables (simple example below):

{

"id": 123,

"success": false,

"data": {

"stats": {

"data1": 123,

"data2": 123,

"data3": 123,

"data4": 123,

"data5": 123

}

},

"tests":[

{

"data": "item1"

},

{

"data": "item2"

}

]

}In this particular example, I would like to split the document up in 3 related tables, with id as key.

"Record" with id and the success coulumn.

"Stats" with id and data1 to data5

"Tests" with id and data

The problem I'm having is that for each table it queries the database, witch takes quite a while.

What I have tried to do is make one query and then create the tables by referencing the one query, but it seems that it still wants to make one query for each table.

Is there something I have missed, or is there a mutch better and faster way to do this?

Any help is greatly appreciated!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @otjena,

You can refer to below steps to manual analysis these data and split them to new tables:

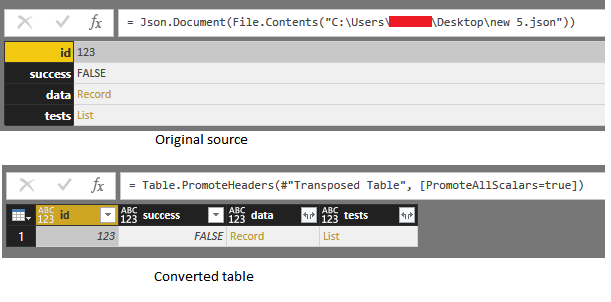

1. Convert json source to table.

Query:

let

Source = Json.Document(File.Contents("C:\Users\xxxxx\Desktop\new 5.json")),

#"Converted to Table" = Record.ToTable(Source),

#"Transposed Table" = Table.Transpose(#"Converted to Table"),

#"Promoted Headers" = Table.PromoteHeaders(#"Transposed Table", [PromoteAllScalars=true])

in

#"Promoted Headers"

2. Use Table.SelectColumns function to create the split tables.

3. Analysis and expand these data.

Full query:

Record:

let

Source = Table.SelectColumns(SourceTable,{"id","success"})

in

Source

Stats:

let

Source = Table.SelectColumns(SourceTable,{"id","data"}),

#"Added Custom" = Table.SelectColumns(Table.AddColumn(Source, "stats", each Record.FieldValues([data][stats])),{"id","stats"}),

#"Expanded stats" = Table.ExpandListColumn(#"Added Custom", "stats")

in

#"Expanded stats"

Tests:

let

Source = Table.SelectColumns(SourceTable,{"id","tests"}),

#"Expanded tests" = Table.ExpandRecordColumn(Table.ExpandListColumn(Source, "tests"), "tests", {"data"}, {"data"})

in

#"Expanded tests"

Regards,

Xiaoxin Sheng

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @otjena,

You can refer to below steps to manual analysis these data and split them to new tables:

1. Convert json source to table.

Query:

let

Source = Json.Document(File.Contents("C:\Users\xxxxx\Desktop\new 5.json")),

#"Converted to Table" = Record.ToTable(Source),

#"Transposed Table" = Table.Transpose(#"Converted to Table"),

#"Promoted Headers" = Table.PromoteHeaders(#"Transposed Table", [PromoteAllScalars=true])

in

#"Promoted Headers"

2. Use Table.SelectColumns function to create the split tables.

3. Analysis and expand these data.

Full query:

Record:

let

Source = Table.SelectColumns(SourceTable,{"id","success"})

in

Source

Stats:

let

Source = Table.SelectColumns(SourceTable,{"id","data"}),

#"Added Custom" = Table.SelectColumns(Table.AddColumn(Source, "stats", each Record.FieldValues([data][stats])),{"id","stats"}),

#"Expanded stats" = Table.ExpandListColumn(#"Added Custom", "stats")

in

#"Expanded stats"

Tests:

let

Source = Table.SelectColumns(SourceTable,{"id","tests"}),

#"Expanded tests" = Table.ExpandRecordColumn(Table.ExpandListColumn(Source, "tests"), "tests", {"data"}, {"data"})

in

#"Expanded tests"

Regards,

Xiaoxin Sheng

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Anonymous

First, thank you for your help, great guide and very easy to follow!

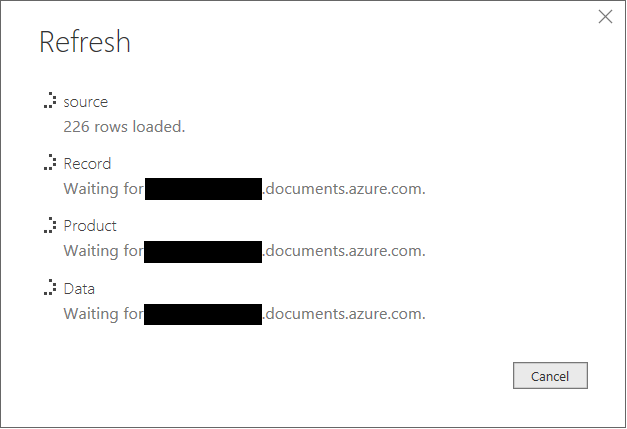

When I do it this way, and all other ways I can think of it seems that all of the tables actually queries my source. (see below)

Is it actually query the documentDB for each table or is it missleading? (it feels quite a bit slower than just running one table)

Best regards

Otjena

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

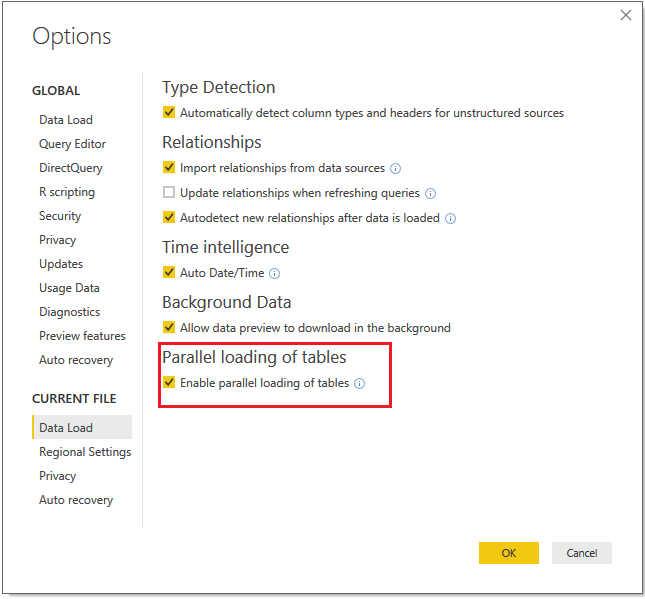

Hi @otjena,

I'd like to suggest you turn on the "paralla loading of tables" feature, it may increase the refresh preformance.

Notice: all of expand tables are based on source table, so these tables will waiting for refreshing of source table .

Regards,

Xiaoxin Sheng

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Anonymous

That one was already active, but thanks for the tip!

It might be as you say, that the oter tables is in fact waiting for the source one and I am misslead to beleave that they all contact the database.

I will mark this one as answered, thanks for all the help you have been giving me!

Regards

Otjena

Helpful resources

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!

Power BI Monthly Update - October 2025

Check out the October 2025 Power BI update to learn about new features.