FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Grouped Average

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Grouped Average

Hey,

I need to get the sum amount values (per Group and per Month and per Week_Timestamp)

I am just not able to get the values as "grouped". Hope this make sense.

Please ask if there is anything not clear.

Sample of what I have,

| Group | Update_Overdue | Month | Week_Timestamp |

| Group1 | 0 | 1 Month | 1 |

| Group1 | 0 | 1 Month | 1 |

| Group1 | 0 | 1 Month | 1 |

| Group1 | 1 | 2 Months | 1 |

| Group2 | 1 | 3Months | 1 |

| Group2 | 1 | 2 Months | 1 |

| Group2 | 1 | 3Months | 1 |

| Group3 | 0 | 2 Months | 1 |

| Group3 | 1 | 3Months | 1 |

| Group3 | 0 | 1 Month | 1 |

| Group3 | 0 | 1 Month | 1 |

| Group3 | 1 | 1 Month | 1 |

| Group3 | 1 | 2 Months | 1 |

| Group2 | 1 | 3Months | 2 |

| Group1 | 0 | 2 Months | 2 |

| Group3 | 0 | 3Months | 2 |

| Group3 | 1 | 2 Months | 2 |

| Group1 | 1 | 3Months | 2 |

And need it like this

| Group | Month | Count | Week_Timestamp |

| Group1 | 1 Month | 3 | 1 |

| Group1 | 2 Months | 1 | 1 |

| Group2 | 2 Months | 1 | 1 |

| Group2 | 3Months | 2 | 1 |

| Group3 | 1 Month | 3 | 1 |

| Group3 | 2 Months | 2 | 1 |

| Group3 | 3Months | 1 | 1 |

| Group1 | 2 Months | 1 | 2 |

| Group1 | 3Months | 1 | 2 |

| Group2 | 3Months | 1 | 2 |

| Group3 | 2 Months | 1 | 2 |

| Group3 | 3Months | 1 | 2 |

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a little rule that says if you have the option of doing it in TSQL before you get to Power BI then thats usually better since the model will be faster but assuming you cant do that:

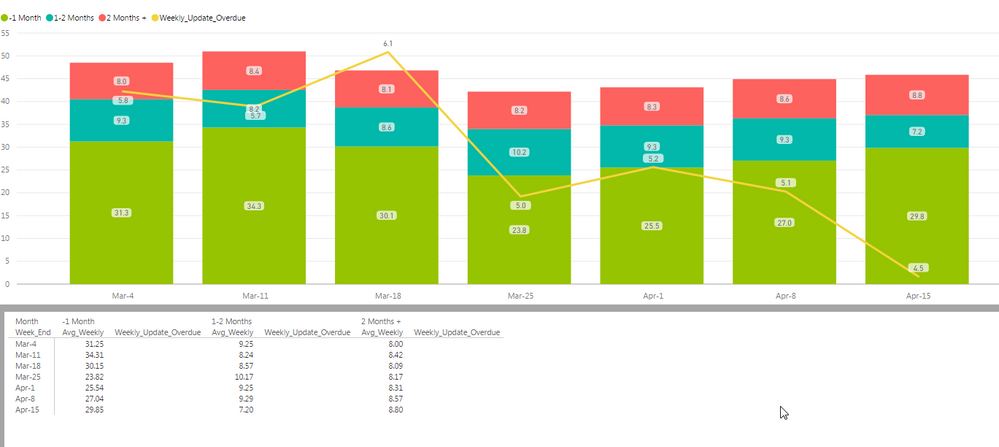

so you've mentioned a COUNT a SUM and an AVERAGE but It looks like you just use Average - is that like a daily average? so in the first column you had on average 31.3 items overdue each day?

You could just build one measure the calculates "Daily Average"

Something like

Overdue Items = COUNTROW(Table)

Days = DISTINCTCOUNT(Table[Date]) -- note but be a date not a datetime

Daily Average = DIVIDE([Count of Overdue],[Days],0)

Then you put that measure in Values, Week_End in the Axis, Group in the Legend.

for each Week and Group it will determine how many items divided by how many distinct days??

is that what you are after?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @PowerBiNoob,

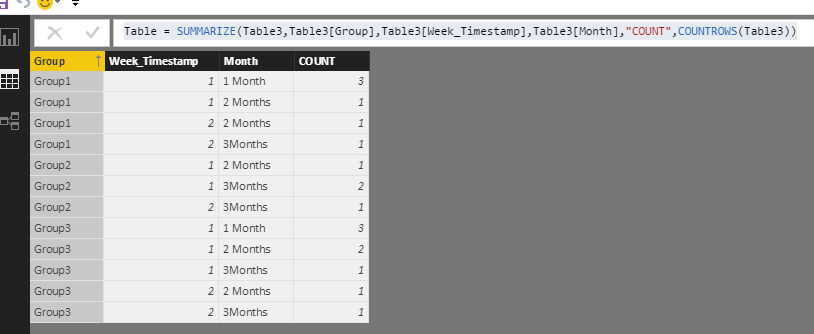

Besides the solution @dearwatson posted by using Power Query to group by. You can also create DAX to a new table which display your espected result.

Click "New Table" under modeling on home page, type the following formula.

Table = SUMMARIZE(Table3,Table3[Group],Table3[Week_Timestamp],Table3[Month],"COUNT",COUNTROWS(Table3))

Then right click group header->Sort Ascending, please see the following screenshot.

Best Regards,

Angelia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Noob 🙂

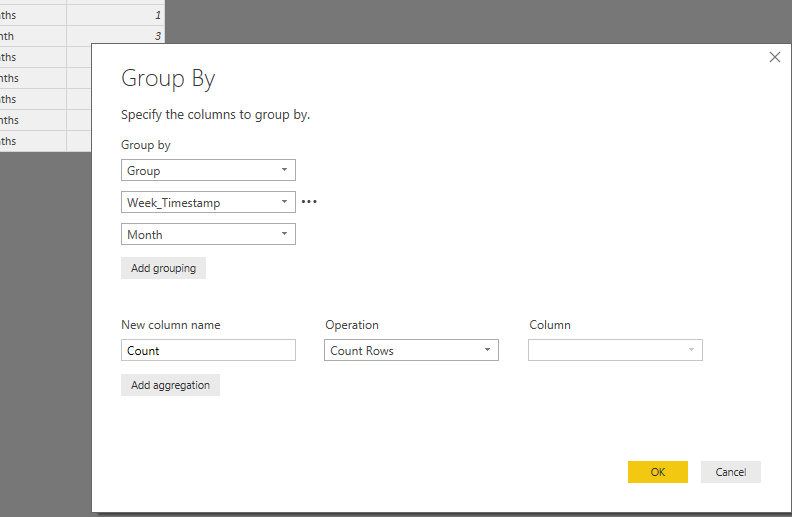

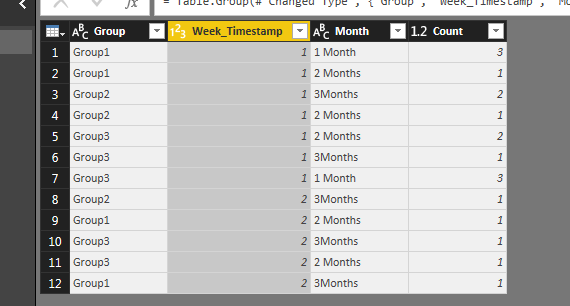

You can use Power Query "group By" to do this is a couple of clicks:

open the query editor for the data and and click "Group By" under Transform

Remember to add all the columns you want to see in the "Group by" section, and the default Count column will do what you want - i.e. Count the number of rows that are the same for the "group by" columns.

click OK and Viola!

I think this is right... let me know.

Cheers

Greg

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks this trick helped a lot; I made my grouped column then merged it back to my main table. Maybe not the best way but now I can make morelculations with it 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This definitely works and display the data like I want it to show , but if I can achieve the same via Measure or similar that would be great , as I'm using the current data in other queries , the solution you gave doesn't play well with my other graphs etc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

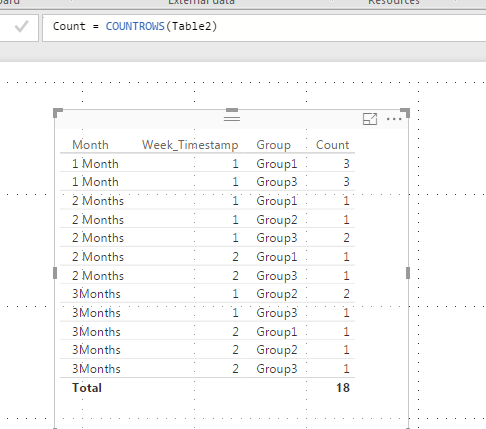

Hi Noob,

It's hard to give any other solution without more detail.. for instance you could acheve the same result in a measure that just counts the rows of the table:

Count = COUNTROWS(Table)

Then create a table with the columns in the values field:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah I understand what you saying, The end result we want to achieve for the stacked bar chart is displaying the Avg for the months( 1 Month,2 Months,3 Months) where the horizontal axis will be the "End of the week" date. The way the data is being captured is daily the files get loaded and timestamp added, the data is a combination of muliple groups each with a count of Over_Due files broken up into ( 1 Month,2 Months,3 Months).

So in the screenshot you will see , I added all the records (per day ,per group,) that is grouped by the Months ( 1 Month,2 Months,3 Months) and the the avg is worked out. Currently it is mostly being done in the backend on Tsql , but we want to rather load the whole table (Select * From ... ) into Power Bi and do the calculations and aggregations etc, in Power Bi and also to minimise the amount of datasources we have currently. Is this a good way of doing it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a little rule that says if you have the option of doing it in TSQL before you get to Power BI then thats usually better since the model will be faster but assuming you cant do that:

so you've mentioned a COUNT a SUM and an AVERAGE but It looks like you just use Average - is that like a daily average? so in the first column you had on average 31.3 items overdue each day?

You could just build one measure the calculates "Daily Average"

Something like

Overdue Items = COUNTROW(Table)

Days = DISTINCTCOUNT(Table[Date]) -- note but be a date not a datetime

Daily Average = DIVIDE([Count of Overdue],[Days],0)

Then you put that measure in Values, Week_End in the Axis, Group in the Legend.

for each Week and Group it will determine how many items divided by how many distinct days??

is that what you are after?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks dearwatson, sorry for the delay in reply. Was Out of the office for a while.

Cheers

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!

| User | Count |

|---|---|

| 40 | |

| 35 | |

| 34 | |

| 31 | |

| 28 |

| User | Count |

|---|---|

| 136 | |

| 102 | |

| 68 | |

| 66 | |

| 58 |