FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Join the Fabric FabCon Global Hackathon—running virtually through Nov 3. Open to all skill levels. $10,000 in prizes! Register now.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Decompress and load multiple .gz files from mu...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Decompress and load multiple .gz files from multiple folders

I was able to decompress a single .gz file with a single csv in it by following Chris Webb's blog.

Ive also seen these blogs but was not able to successfully load and decompress files:

http://sql10.blogspot.sg/2016/06/reading-zip-files-in-powerquery-m.html

http://www.excelandpowerbi.com/?p=155

basically, my folder structure is like this

Parent_folder

- Folder_A

- Folder_A_1

- file.gz

- Folder_A_2

- file.gz

- Folder_A_1

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It would be useful to get a peek at one of the files that you are trying to unzip.

Meanwhile you can check that you use the right compression on the files. The MSDN article mentions two compression mechanisms. Have you tried both?

| Compression.GZip |

| Compression.Deflate |

Chris in his blog refers more to GZip, whilst Ken and Mark refer both to Deflate.

Another thing to mention. GZip will be compressing only single file, while ZIP archives would embed entire folder structures. What's your case?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

R Script is an another option to load .gz file in Power BI Desktop. Below is the code snippet.

install.packages -> data.table

library('data.table')

data <- fread(file="C:/Test/Employees.csv.gz")

data

See more details using this link

I know this is an old post but it can help someone with other options.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It would be useful to get a peek at one of the files that you are trying to unzip.

Meanwhile you can check that you use the right compression on the files. The MSDN article mentions two compression mechanisms. Have you tried both?

| Compression.GZip |

| Compression.Deflate |

Chris in his blog refers more to GZip, whilst Ken and Mark refer both to Deflate.

Another thing to mention. GZip will be compressing only single file, while ZIP archives would embed entire folder structures. What's your case?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much! I just followed followed the instruction in one of the comment from Chris' blog. Then invoked it.

(zip) =>

let

Source =

Binary.Decompress(

File.Contents(

zip),

Compression.GZip

),

#"Imported" =

Csv.Document(

Source,

[Delimiter=":", Columns=2, Encoding=1252])

in

#"Imported"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jerald,

When invoking the function, did you select Content as your zip field? I'm doing that and running getting an error that reads"

An error occurred in the "" query. Expression.Error: We cannot convert a value of type Binary to type Text. Details: Value = Binary Type=Type

Did you run into this? Any suggestions on how to get around it?

Thank you,

Katie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same issue as Katie.

I have this function that works:

(zip) =>

let

Source =

Binary.Decompress(

File.Contents(

zip),

Compression.GZip

),

#"Imported" =

Csv.Document(

Source,

[Delimiter="|", Columns=30, Encoding=1252])

in

#"Imported"Tested it here to make sure. I get 30 columns of text extracted.

let

Source = UnpackGzip("C:\Users\Michael\CPU-1\disk\var\log\app.20180418002723.log.gz")

in

Source

Great.

But when I try to use on a batch of files like this, I get an error on the Table.Add Column saying it cant convert Binary to text. There are 120 .gz files in the folder.

let

path = "C:\Users\Michael\CPU-1\disk\var\log\",

Source = Folder.Files(path),

#"Filtered Rows to only .gz" = Table.SelectRows(Source, each ([Extension] = ".gz")),

#"Added Custom UnzipContents" = Table.AddColumn(#"Filtered Rows to only .gz", "Custom", each UnpackGzip([Content])),

#"Expanded Attributes" = Table.ExpandRecordColumn(#"Added Custom UnzipContents", "Attributes", {"Content Type", "Kind", "Size"}, {"Attributes.Content Type", "Attributes.Kind", "Attributes.Size"})

in

#"Expanded Attributes"

Michael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Katie and Michael,

Did you ever get this approach to work, I too am attempting to use the provided function and getting the error in regards to not being able to convert binary into text.

Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

(zip) =>

let

Source =

Binary.Decompress(

File.Contents(

zip),

Compression.GZip

),

#"Imported" =

Table.FromColumns({Lines.FromBinary(Source,null,null,1252)})

in

#"Imported"let

path = "C:\Users\Michael\CPU-1\disk\var\log\",

Source = Folder.Files(path),

#"Expanded Attributes1" = Table.ExpandRecordColumn(Source, "Attributes", {"Size"}, {"Attributes.Size"}),

#"Sorted Rows" = Table.Sort(#"Expanded Attributes1",{{"Attributes.Size", Order.Ascending}}),

#"Added Custom" = Table.AddColumn(#"Sorted Rows", "LogFile", each Text.Start([Name],3)),

FilteredGzRows = Table.SelectRows(#"Added Custom", each ([Extension] = ".gz")),

UnpackGzips = Table.AddColumn(FilteredGzRows, "Custom", each fnUnpackGzipLinesFromBinary([Folder Path]&[Name])),

in

UnpackGzips- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous thanks for the code. I realise where I had been going wrong, I had been trying to call the fnUnpackGzipLinesFromBinary on the Contents column, rather than the file directly itself. I used a slightly different function that will parse the CSV.

For anyone looking for this in the future, create a function "fnUnpackGzipLinesFromBinary":

(zip) =>

let

Source =

Binary.Decompress(

File.Contents(

zip),

Compression.GZip

),

#"Imported" =

Csv.Document(Source,[Delimiter=",", Columns=28, Encoding=1252, QuoteStyle=QuoteStyle.None]),

#"Promoted Headers" = Table.PromoteHeaders(#"Imported", [PromoteAllScalars=true])

in

#"Promoted Headers"

Then call the function from your load scripts:

let

Source = Folder.Files("C:\Users\user\files"),

#"Filtered Rows" = Table.SelectRows(Source, each ([Extension] = ".gz")),

UnpackGzips = Table.AddColumn(#"Filtered Rows", "Custom", each fnUnpackGzipLinesFromBinary([Folder Path]&[Name])),

#"Expanded Custom" = Table.ExpandTableColumn(UnpackGzips, ...insert column list here...)

in

#"Expanded Custom"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So I was able to make this work for me.

let

Source = Folder.Files("\\blahblah.com\Canned\DNIS_Number\MonthlyGZ"),

UnpackGzips = Table.AddColumn(Source, "Custom", each fnUnpackGzipLinesFromBinary([Folder Path]&[Name]))

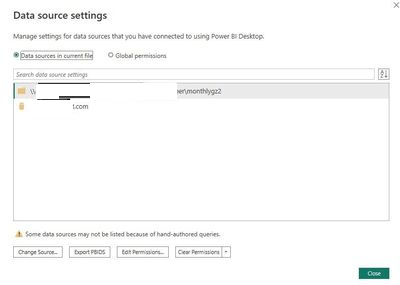

It works great, from my laptop. I refreshed 2 .gz files from the folder path I listed. Great. I published the pbix report to my workspace, went to refresh, error. Go to the settings and I see this.

I guess since I'm using the function fnUnpackGzipLinesFromBinary to get the folder name and file name it's dynamic and PBI cannot do that. I can refresh all day from PBI desktop but not when I publish it to a workspace.

Data refresh in Power BI - Power BI | Microsoft Learn

A dynamic data source is a data source in which some or all of the information required to connect can't be determined until Power Query runs its query, because the data is generated in code or returned from another data source. Examples include: the instance name and database of a SQL Server database; the path of a CSV file; or the URL of a web service.

If you or anyone out there has some great work around to this please let me know!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

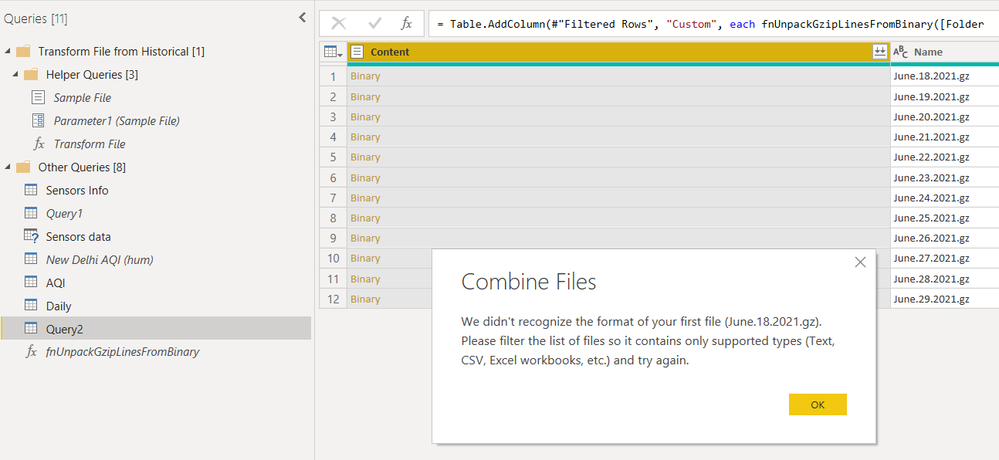

I have tried these codes and they did not work for me. This is what I ended up:

Any idea?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need to go to the last column called Custom that was created from the UnpackGzips step to combine the files. Custom is where the function is called and it will unpack the gzip files.