Join us at FabCon Vienna from September 15-18, 2025

The ultimate Fabric, Power BI, SQL, and AI community-led learning event. Save €200 with code FABCOMM.

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Enhance your career with this limited time 50% discount on Fabric and Power BI exams. Ends September 15. Request your voucher.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- DataFormat.Error: We reached the end of the buffer...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DataFormat.Error: We reached the end of the buffer.

Hi,

So I am puzzled by this problem, and I am now at my wits' end.

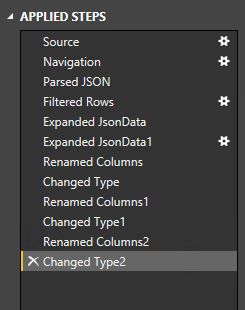

I have a PBI Desktop report with tables from an on-prem SQL Server and an Azure SQL database using import. I tried to add two more tables from the same Azure database. Both tables have a column with a JSON object in it. The root table is the same in Azure SQL, but I get two different selections of it in Power BI. I go through these steps with each table:

Everything works fine in query editor, but as soon as I try to apply the changes, I get an error on one of the tables. The error reads: DataFormat.Error: We reached the end of the buffer.

What I have tried:

- Loading only one of the new tables at a time. Only one of them fails.

- I have the newest version of Power BI Desktop.

- Restarting PBI Desktop and the server I remoting to.

- Checking resource usage on the Azure SQL DB. The queries are not consuming a lot of resources.

Any suggestions are welcome!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

So, apparently just waiting and trying again the day after solved the problem. A bit frustrating since I used a couple of hours trying to troubleshoot.

Anyways, now it's working!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had this issue when there was a strange Unicode character in one of the JSON files.

Removing the character resolved the issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was able to isolate this issue to the line that extracts json.

If Json.Document get 0 bytes, it throws the "DataFormat.Error: We reached the end of the buffer".

To test this:

let

Source = Json.Document("") //will throw DataFormat.Error: We reached the end of the buffer

in

Source

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

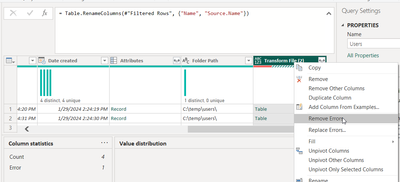

this is exactly what happened to me when I tried to import multiple json files where one of these was empty. Its maby to quick but I solved it by adding an extra step by removing errors before expanding the json as a table

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous,

Which version of Desktop did you use? The 32-bit or 64-bit? I think this issue could be temporary. Maybe we can use the 64-bit Desktop to overcome this issue. Some references are as follows.

Best Regards,

Dale

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

So, apparently just waiting and trying again the day after solved the problem. A bit frustrating since I used a couple of hours trying to troubleshoot.

Anyways, now it's working!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not 100% sure but it seems in my case R script parsing empty (not null) text cells into a new column produced this error as well.