FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Calculating Orders Frequency

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Calculating Orders Frequency

Hi,

i`m quite new to PowerBi and I need some help...

I have a database with orders, with info of CUSTOMER, ORDER.DATE, ORDERS.QTY., and ITEM.ID. What I`m trying to achieve here is: figure it out the frequency each customer buy, including what items and their quantities...

For example, I`d like to know that `Customer A` buy every two weeks, or 13 days (on average), and buy 3 units of product X and 2 units of products Y (on average as well).

Can anyone help me out?? Thanks !!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @chernni,

For the two questions, they all based on how to make groups.

1. Frequency of order. Add 'Orders Fre'[Customer] = EARLIER ( 'Orders Fre'[Customer] ) inside the Filter to make the formula be based on Customer group. Then it will get the next date within the same customer group not the next physical row.

Frequency of order =

DATEDIFF (

CALCULATE (

MAX ( 'Orders Fre'[Date] ),

FILTER (

'Orders Fre',

'Orders Fre'[Date] < EARLIER ( 'Orders Fre'[Date] )

&& 'Orders Fre'[Customer] = EARLIER ( 'Orders Fre'[Customer] )

)

),

'Orders Fre'[Date],

DAY

)

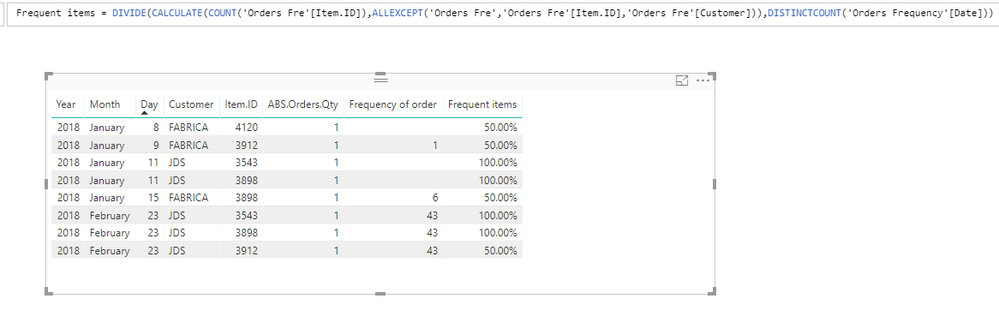

2. Frequent items. Same issue. In my prior expression I'm using ALLEXCEPT ( 'Orders Fr', 'Orders Fr'[Item.ID] ) to make the formula be based on only Item.ID group. That's why all the same Item.ID got the same percentage. So to resolve your issue, add one more condition in ALLEXCEPT().

Frequent items =

DIVIDE (

CALCULATE (

COUNT ( 'Orders Fre'[Item.ID] ),

ALLEXCEPT ( 'Orders Fre', 'Orders Fre'[Item.ID], 'Orders Fre'[Customer] )

),

DISTINCTCOUNT ( 'Orders Frequency'[Date] )

)

Little tips: the most important point in your requirement is to make groups for your data. And generally in DAX, we can use EARLIER() or ALLEXCEPT() function to ahieve this. EARLIER() is used in calculated column and ALLEXCEPT() can be use in both measure and calculated column.

I think I have shown you the right direction. Please make more effort and try to tune the formula on yourself. ![]()

Thanks,

Xi Jin.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sorry I can not apply this, can you check what it might be wrong from the formula? i whant to calculate the frequency in month.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Share a sample dataset and for that sample show the expected result.

Regards,

Ashish Mathur

http://www.ashishmathur.com

https://www.linkedin.com/in/excelenthusiasts/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

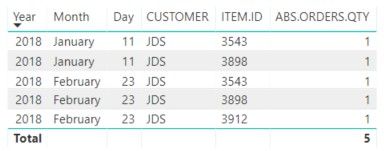

Hi @Ashish_Mathur, thanks for your response. Check below the request:

In the image below we have a simple example with a single customer "JDS", with two orders.

Order 1: Jan 11st, including two products (ITEM.ID), one unit each.

Order 2: Feb 23rd, including three products (ITEM.ID), one unit each.

As a result I'd like something like:

Frequency of order: (Feb 23rd) - (Jan 11st) = 43 days.

Frequent items: 3543 (100% of orders), 3898 (100% of orders) and 3912 (50%) of orders.

Something like that.

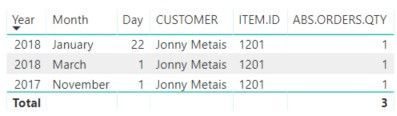

Another example, with three orders:

As a result here, I'd have as a result:

Frequency of order: (38+82)/2= 60 days (details on image below)

Frequent items: 1201 (100% of orders).

Hope i made myself clear... If not, please let me know! thanks for the support!!!

Btw, if needed I can give more complex examples, with more orders, or multiple items, but my goal is to identify the average frequency of order and average items requested by a given customer.

Thanks!!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @chernni,

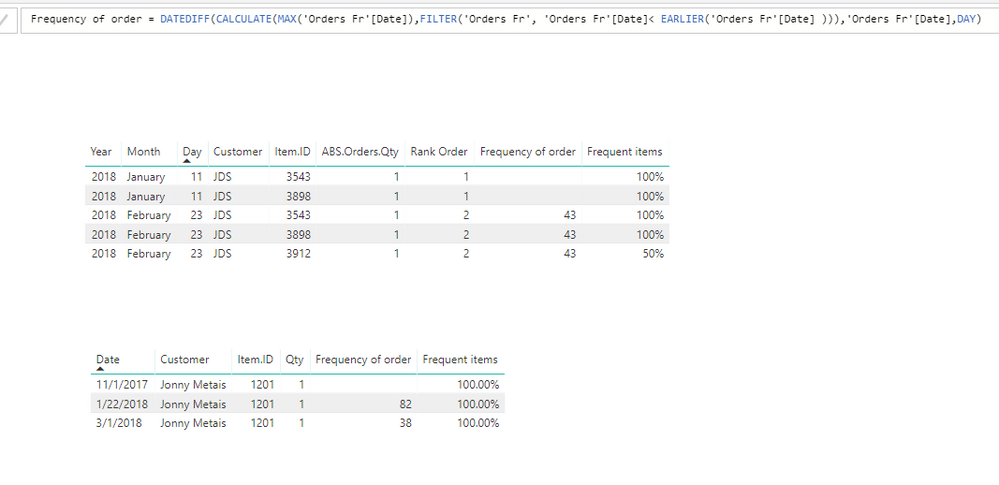

To achieve your requirement, you can refer to following method:

We can create calculated column with Earlier() function to get the prior row value as in your scenario, to get the prior date value. So the Frequency of order expression can be:

Frequency of order =

DATEDIFF (

CALCULATE (

MAX ( 'Orders Fr'[Date] ),

FILTER ( 'Orders Fr', 'Orders Fr'[Date] < EARLIER ( 'Orders Fr'[Date] ) )

),

'Orders Fr'[Date],

DAY

)Then the expression of Frequent items can be:

Frequent items =

CALCULATE (

COUNT ( 'Orders Fr'[Item.ID] ),

ALLEXCEPT ( 'Orders Fr', 'Orders Fr'[Item.ID] )

)

/ DISTINCTCOUNT ( 'Orders Fr'[Date] )The result shows like:

Thanks,

Xi Jin.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is Not working , How you make 👍 ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@v-xjiin-msft Thanks for the solution... but i`m struggling to understanding it.

I cannot use the function EARLIER properly, can you please share the power bi report that you`ve took the screen shot below? it might be easier for me to understand... tks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @chernni,

Sure, check Page2 in my shared report.

https://1drv.ms/u/s!AlqSnZZUVHmsg3ZnKpzvcwZ5HeSH

Thanks,

Xi Jin.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@v-xjiin-msft thank you so much for you patience! but unfortunately, I cannot see the desired solution...

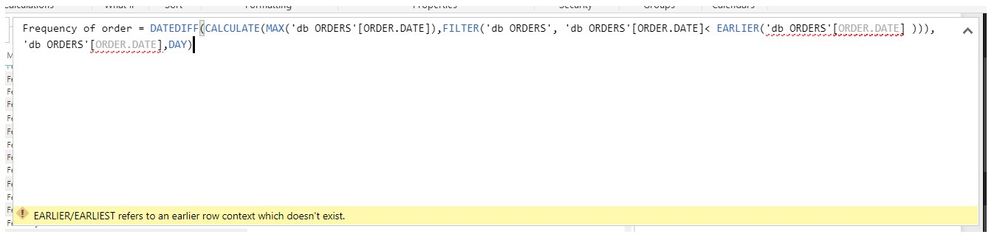

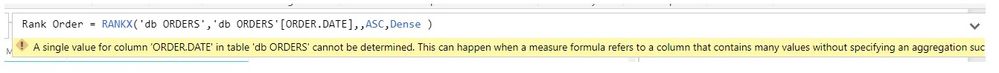

I can`t apply the `RANK ORDER` nor `FREQUENCY OF ORDER` measures... as images below...

For the `FREQUENT ITEMS` formula, i used the following:

Frequent items = CALCULATE(COUNT('db ORDERS'[ITEM.ID]),ALLEXCEPT('db ORDERS','db ORDERS'[ITEM.ID]))/DISTINCTCOUNT('db ORDERS'[ORDER.DATE])

but the result is a bunch of `infinity` for each row of my table... as the image below...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @chernni,

=> I can`t apply the `RANK ORDER` nor `FREQUENCY OF ORDER` measures... as images below...

You are using measure. Right? As I said before, I'm using calculated column not measure. Generally, earlier() function is used in calculated column. Same to Rankx. So to resolve your issue, you just need to change the measure to calculated column.

Then for your Frequent items returns infinity. It seems like there exists 0 value in DISTINCTCOUNT('db ORDERS'[ORDER.DATE]). Did you apply any filter on your table?

To troubleshoot your issue, you can separate the formula to two parts. See if there exists 0 values. And still use calculated column instead of measure. Also you can try Divide() function.

Thanks,

Xi Jin.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@v-xjiin-msft awesome! it worked! But it might not be exactly what i need... What`s showing me in the image below is the following: `Frequency of order` shows me the difference, in days, from the last order indepently of the customer which can be a valuable information as well. But what i`m actually looking for is, the difference, in days, between orders from the same customer.

Let`s take as an example customer `FABRICA`, the line from Jan 15th. What i`d like to see in the `Frequency of order` would be jan 15 - Jan 9 = 6 days (instead of Jan 15 - Jan 11 = 4 days). Is it possible to do something like that?

Also, it appears the percentages in the `Frequent items` columns are global... related to the whole database... take as an example ITEM.ID = 3898, in every line is 87.32%, as per my understanding it means in 87.32% of the orders this item goes into. Which is a nice info, but i`m also looking for the info by customer... For customer A it can be 50%, customer B 100%, C 90% and from that on...

Can you please help ou with that last detail? Thank you so much !!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @chernni,

For the two questions, they all based on how to make groups.

1. Frequency of order. Add 'Orders Fre'[Customer] = EARLIER ( 'Orders Fre'[Customer] ) inside the Filter to make the formula be based on Customer group. Then it will get the next date within the same customer group not the next physical row.

Frequency of order =

DATEDIFF (

CALCULATE (

MAX ( 'Orders Fre'[Date] ),

FILTER (

'Orders Fre',

'Orders Fre'[Date] < EARLIER ( 'Orders Fre'[Date] )

&& 'Orders Fre'[Customer] = EARLIER ( 'Orders Fre'[Customer] )

)

),

'Orders Fre'[Date],

DAY

)

2. Frequent items. Same issue. In my prior expression I'm using ALLEXCEPT ( 'Orders Fr', 'Orders Fr'[Item.ID] ) to make the formula be based on only Item.ID group. That's why all the same Item.ID got the same percentage. So to resolve your issue, add one more condition in ALLEXCEPT().

Frequent items =

DIVIDE (

CALCULATE (

COUNT ( 'Orders Fre'[Item.ID] ),

ALLEXCEPT ( 'Orders Fre', 'Orders Fre'[Item.ID], 'Orders Fre'[Customer] )

),

DISTINCTCOUNT ( 'Orders Frequency'[Date] )

)

Little tips: the most important point in your requirement is to make groups for your data. And generally in DAX, we can use EARLIER() or ALLEXCEPT() function to ahieve this. EARLIER() is used in calculated column and ALLEXCEPT() can be use in both measure and calculated column.

I think I have shown you the right direction. Please make more effort and try to tune the formula on yourself. ![]()

Thanks,

Xi Jin.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Share the link from where i can download your base data

Regards,

Ashish Mathur

http://www.ashishmathur.com

https://www.linkedin.com/in/excelenthusiasts/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Share your raw data - not your PBI report.

Regards,

Ashish Mathur

http://www.ashishmathur.com

https://www.linkedin.com/in/excelenthusiasts/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Ashish_Mathur please check if you can access here: https://drive.google.com/open?id=1mRNO21ew3QZoDDfol2HVWWjyb-vQ_n_m

Thanks.

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!

| User | Count |

|---|---|

| 39 | |

| 37 | |

| 33 | |

| 32 | |

| 29 |

| User | Count |

|---|---|

| 133 | |

| 88 | |

| 85 | |

| 68 | |

| 64 |