FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Calculate Days between OrderDate per Customer ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Calculate Days between OrderDate per Customer where Order date is in the same Column???

I'll start with Please Help!!

After Hours of trialing theories i need some help,

I have a data set which is purely sales data. containing Customer ID, CustomerName, OrderDate, Items Ordered etc...

What i am trying to do is calculate the difference in days per order per customer,

Then have a further measure which shows the Average days per order, per customer once i have the days between previous order.

I have created a Today Column and a Days Since Ordering Column to give me a calculation of Days Since Last Order.

What i want to do for the end result is,

Have Days Since Last Order - "Average Days Per Order" (Which i cant create) to give me a Numeric Value of How long on average it will be before the customer orders again...

The end result is so we can proactively sell to customers, so when the difference between Average days per order and days since last order is at 1 we can call the customer and say hey,

Any help will be appreciated beyond belief,

Thanks,

Josh

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry Matt,

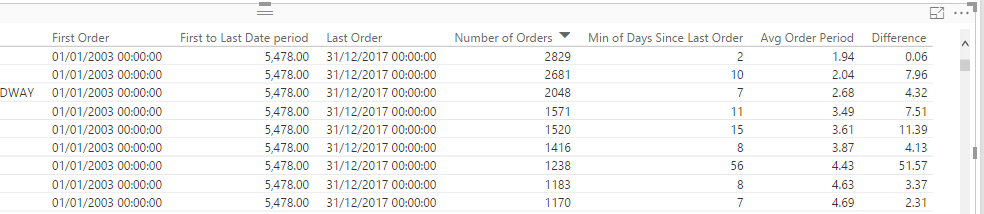

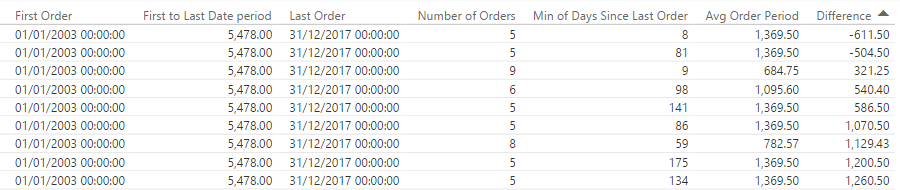

Got it working not sure if it was me having the .Date on the date column and i selected CustomerID for the Values bit, but got rid of the .Date and ID so its like the columns below and done manual calculations to verify the data its outputing and its working now

So this is what i ended up with;

First Order = CALCULATE(FIRSTDATE(TableName[Date]),VALUES(Tablename[Date]))

Last Order = CALCULATE(LASTDATE(TableName[Date]),VALUES(Tablename[Date]))

Number of Orders = DISTINCTCOUNT(TableName[OrderID])

First to Last Date period = ((TableName[Last Order] - TableName[First Order])*1)

Today = Today()

Days Since Last Order = TableName[Today].[Date] - TableName[Order Date].[Date] * 1

Avg Order Period = divide(TableName[First to Last Date period],TableName[Number of Orders])

&

Difference = CALCULATE(MIN([Days Since Last Order]) - [Avg Order Period])

Then when Difference gets to 0 its time to call,

Seeing it like the above makes it so clear, Thanks Matt, Great Work !!!

Thanks,

J

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I realise this seems easy, but it is not so easy - hope that makes you feel any better :-). Power Pivot is not really built to handle relative comparison between rows in a table. You can do it, but it is not super easy. But as I think about your problem in a different way, I think there may be an easier way to solve the problem that might meet your needs.

Break the problem into pieces

Using Adventure Works as an example, you could write measures like this. If you put customer name on a visual (say table) with these measures, I think it will give you what you need.

Last Order=CALCULATE(LASTDATE(Sales[OrderDate]),VALUES(Customers[CustomerKey]))

First Order=CALCULATE(FIRSTDATE(Sales[OrderDate]),VALUES(Customers[CustomerKey]))

Total Orders=DISTINCTCOUNT(Sales[SalesOrderNumber])

First to Last Date period=[Last Order] - [First Order]

Edit (sorry, previous formula was wrong): Avg Order Period=divide([First to Last Date period],[Total Orders] -1 )

* Matt is an 8 times Microsoft MVP (Power BI) and author of the Power BI Book Supercharge Power BI.

I will not give you bad advice, even if you unknowingly ask for it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Matt,

I realize I am following up after 5 years... but any chance you have a demo file you can upload? Trying to solve a simillar problem and getting no results.

thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Matt,

Sorry I'm lat to the party, but I noticed tht you mentioned:

Power Pivot is not really built to handle relative comparison between rows in a table.

However such requirement is pretty much everything I'm doing with a data dump (e.g. find difference between two rows based on same value of some column). Does it mean that I should re-format the raw dump to better suit Power Pivot?

Thanks in advance!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Possibly, it is hard to say without seeing the details. Generally the order of rows in a DB are not important. If you need to compare change in status between rows (for example), then you can do it in DAX and or restructure the data. Both approaches have their strengths and weaknesses. It depends.

* Matt is an 8 times Microsoft MVP (Power BI) and author of the Power BI Book Supercharge Power BI.

I will not give you bad advice, even if you unknowingly ask for it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Matt, I found it particularly to do anything for row operations without a convoluted formula and I'm wondering if I'm choosing the wrong tool.

For example:

I have the following table:

Transaction ID | Event Type | IP Address | Datetime | Email Address |

I have a bunch of these transactions, and an [Event Type] could be a Purchase (transfer real money for virtual coins), or a Virtual Purchase (transfer virtual coins for web contents). Now for each Virtual Purchase I'm missing the email address, so I would like to set up a Calculated Column, say named [Possible Email Address] for each row that has [Event Type] = "Virtual Purchase", with the following rule:

Search all previous rows, find a row that has a matching IP Address, and use the [Email Address] of that row to populate [Possible Email Address] of this row.

Example:

Transaction ID | Event Type | IP Address | Datetime | Email Address |

100001 | Purchase | 1.1.1.1 | 2018-01-11 | abc@hotmail.com

100002 | Purchase | 1.4.5.6 | 2018-01-11 | cdfg@gmail.com

...(after many rows)

100356 | Virtual Purchase | 1.1.1.1 | 2018-01-13 | UNKNOWN

So after adding the calculated column Transaction ID 100356 should become this:

100356 | Virtual Purchase | 1.1.1.1 | 2018-01-13 | abc@hotmail.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You beauty Matt! I've just spent two hours trying to crack that - so happy I found your post!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dont get me wrong though for our longest standing customers its working well,

Just how to make it see "Their" first order and then drop the -1 on the Average?

Thanks though this is excellent if it will work!

Was almost going to pull it into excel manually do the averages and then have a relationship between two data tables to show it in one graph visual.

Thanks,

Josh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have lots of replies and thoughts here and I am not really sure the current status of open issues. Can you do a single reply explaining what open issues you have?

* Matt is an 8 times Microsoft MVP (Power BI) and author of the Power BI Book Supercharge Power BI.

I will not give you bad advice, even if you unknowingly ask for it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry Matt,

Got it working not sure if it was me having the .Date on the date column and i selected CustomerID for the Values bit, but got rid of the .Date and ID so its like the columns below and done manual calculations to verify the data its outputing and its working now

So this is what i ended up with;

First Order = CALCULATE(FIRSTDATE(TableName[Date]),VALUES(Tablename[Date]))

Last Order = CALCULATE(LASTDATE(TableName[Date]),VALUES(Tablename[Date]))

Number of Orders = DISTINCTCOUNT(TableName[OrderID])

First to Last Date period = ((TableName[Last Order] - TableName[First Order])*1)

Today = Today()

Days Since Last Order = TableName[Today].[Date] - TableName[Order Date].[Date] * 1

Avg Order Period = divide(TableName[First to Last Date period],TableName[Number of Orders])

&

Difference = CALCULATE(MIN([Days Since Last Order]) - [Avg Order Period])

Then when Difference gets to 0 its time to call,

Seeing it like the above makes it so clear, Thanks Matt, Great Work !!!

Thanks,

J

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Josh,

I realize I am following up after 5 years... but any chance you have a demo file you can upload? Trying to solve a simillar problem and getting no results.

thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also the First Date Measure First Order=CALCULATE(FIRSTDATE(Sales[OrderDate]),VALUES(Customers[CustomerKey]))

That is showing the same date for a lot of customers,

But i know these customer wont have ordered all on the 01/01/2013... Yet the number of orders is most likely correct,

Is there a way to have the first order be for a customer based on there unique ID and not just the first date in the dataset?

Thanks again,

Josh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the measures seem to be working,

Just need to include a daterange in the measures cause i have 13 years worth of data, but realistically a 4 year calculations should be enough to keep the information relevant and accurate.

Is it possible to include a daterange / date period wrap in the measure so they only look at the last 4 years?

Thanks for the help though, looking better already!!!

Thanks,

Josh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, ill give this a try tonight / tomorrow evening and let you know how i get on.

Hopefully fix it! Been trying different ways for a few weeks now without sucsess

Will keep you posted ASAP,

Thanks,

Josh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I also saw your responce as a mention but ill also try that and let everyone know the calculation and if it works or not,

Thanks for the help guys,

Cheers,

Josh

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!