FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Add a list ranked by category using M Query.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Add a list ranked by category using M Query.

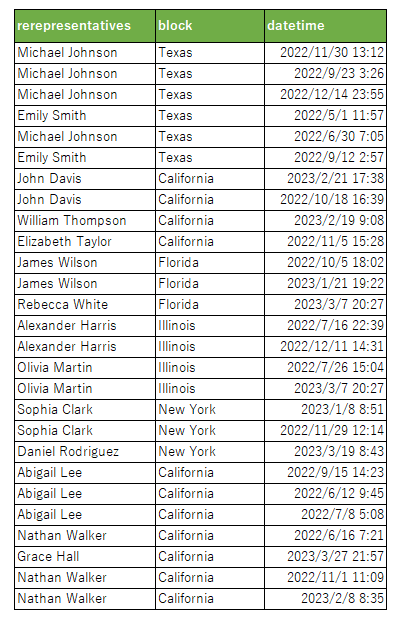

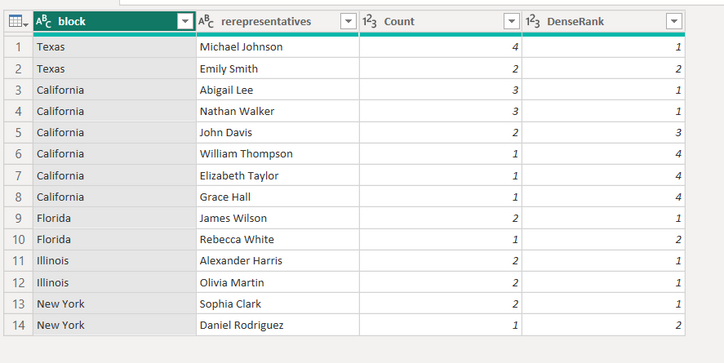

In the data, there are representatives for each block, and the same person appears multiple times depending on the date and time. I'd like to create a column that groups by block and representative, counting the number of representatives for each block and ranking them.

I will provide the source that was entered in "Data Input," so please feel free to use it if you like.

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("jZRba9xADIX/itjnwHg0Hl/mreTSC00KycJSQh60u5NYZGwHr5s2+fXV2NlQUoMNBhv8jXR0dOzb29Ul7yryAb61VXNom9XJau3/0EHumCAqrZVJQBuncXV3MouXCg0Yh9kSWMuVAhpn7YCf1xxe4KbmvvqIWqVBa2fzJXWzKDl3yWzVUiQAHqvGanBGzxzfn1Lg+7ZrmEbYKFQoGnJninlaBkuULkBnzpQDvuEQmGpYV239NGqeaqFLKF0ydjgP/Epb31ewppfQdtN9tLKgrcM3VVT7A0izscWFHOP9v6IELlyCs7BRepi3dDjC137rdzuCTcW9/582KgdMHI5Wfgpic7P3HXyhrhss+irzNy2/e58rnQHi0Z8FJ2JcRFHqjB6O/Aj8zASX1PXcTHbALFqTpAvwjxPctE+V0KeBukchrvxv+NkOj2/mFFA4q+fZYUVYgkanRyFn1LCE97rdd/zwy79OlDcxCIVLzejNlh+IA3z3fjIDEmMbfcFleBZTX7rULqJzmdQeI3lFfUUNbCg8+uk8ZnGtucPRmc8d7bxsNITJvEvixfP3z3q+ulg5/AeSctGB+EXFPRkZ9e4v", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [rerepresentatives = _t, block = _t, datetime = _t])

This is a display of the final data that has been processed in Excel.

If anyone knows of any good ideas or methods to achieve a final result like this, could you please share them with me?

Best regards,

roxy

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @roxy12 ,

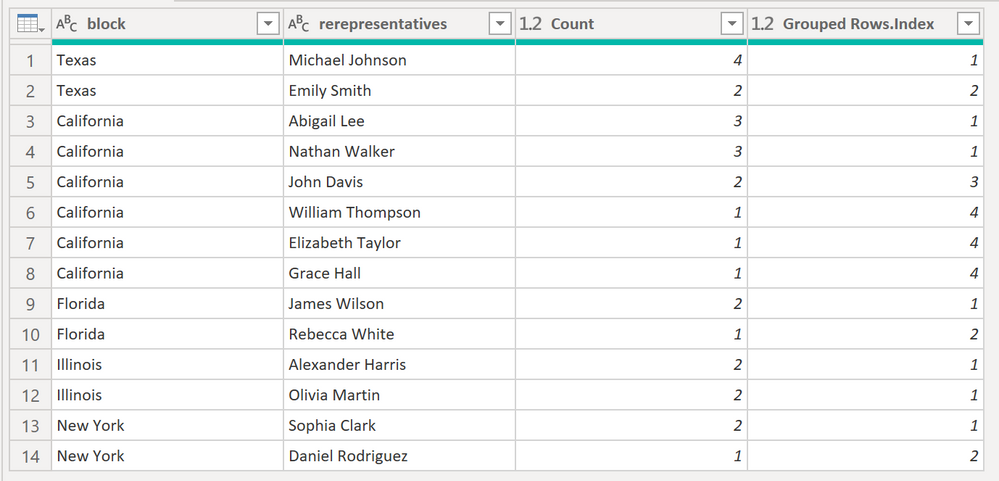

How about this:

Here the code in M:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("jZRba9xADIX/itjnwHg0Hl/mreTSC00KycJSQh60u5NYZGwHr5s2+fXV2NlQUoMNBhv8jXR0dOzb29Ul7yryAb61VXNom9XJau3/0EHumCAqrZVJQBuncXV3MouXCg0Yh9kSWMuVAhpn7YCf1xxe4KbmvvqIWqVBa2fzJXWzKDl3yWzVUiQAHqvGanBGzxzfn1Lg+7ZrmEbYKFQoGnJninlaBkuULkBnzpQDvuEQmGpYV239NGqeaqFLKF0ydjgP/Epb31ewppfQdtN9tLKgrcM3VVT7A0izscWFHOP9v6IELlyCs7BRepi3dDjC137rdzuCTcW9/582KgdMHI5Wfgpic7P3HXyhrhss+irzNy2/e58rnQHi0Z8FJ2JcRFHqjB6O/Aj8zASX1PXcTHbALFqTpAvwjxPctE+V0KeBukchrvxv+NkOj2/mFFA4q+fZYUVYgkanRyFn1LCE97rdd/zwy79OlDcxCIVLzejNlh+IA3z3fjIDEmMbfcFleBZTX7rULqJzmdQeI3lFfUUNbCg8+uk8ZnGtucPRmc8d7bxsNITJvEvixfP3z3q+ulg5/AeSctGB+EXFPRkZ9e4v", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [rerepresentatives = _t, block = _t, datetime = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"rerepresentatives", type text}, {"block", type text}, {"datetime", type datetime}}),

#"Grouped Rows 1" = Table.Group(#"Changed Type", {"rerepresentatives", "block"}, {{"Count", each Table.RowCount(_), Int64.Type}}),

#"Grouped Rows 2" = Table.Group(#"Grouped Rows 1", {"block"}, {{"AllRows", each SubGroupAddIndex(_), type table [block=nullable text, rerepresentatives=text, Count=number, Index=number]}}),

SubGroupAddIndex = (Table as table) as table =>

let

#"Sorted Rows" = Table.Sort(Table,{{"Count", Order.Descending}}),

#"Added Index" = Table.AddIndexColumn(#"Sorted Rows", "Index", 1, 1, Int64.Type)

in

#"Added Index",

#"Expanded AllRows" = Table.ExpandTableColumn(#"Grouped Rows 2", "AllRows", {"rerepresentatives", "Count", "Index"}, {"rerepresentatives", "Count", "IndexDense"}),

#"Grouped Rows 3" = Table.Group(#"Expanded AllRows", {"block", "Count"}, {{"Index", each List.Min([IndexDense]), type nullable number}}),

#"Merged Queries" = Table.NestedJoin(#"Expanded AllRows", {"block", "Count"}, #"Grouped Rows 3", {"block", "Count"}, "Grouped Rows", JoinKind.LeftOuter),

#"Expanded Grouped Rows" = Table.ExpandTableColumn(#"Merged Queries", "Grouped Rows", {"Index"}, {"Grouped Rows.Index"}),

#"Removed Columns" = Table.RemoveColumns(#"Expanded Grouped Rows",{"IndexDense"})

in

#"Removed Columns"

Let me know if this helps 🙂

/Tom

https://www.tackytech.blog/

https://www.instagram.com/tackytechtom/

| Did I answer your question❓➡️ Please, mark my post as a solution ✔️ |

| Also happily accepting Kudos 🙂 |

| Feel free to connect with me on LinkedIn! | |

| #proudtobeasuperuser |  |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

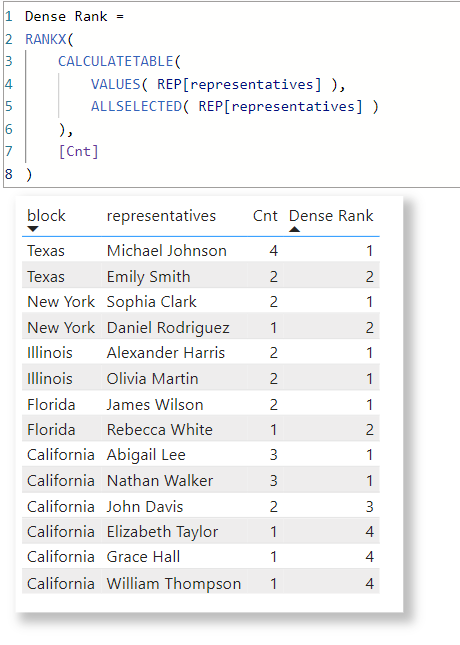

Genearlly speaking, PQ was designed for data cleansing instead of calculation. DAX solution is way more elegant.

| Thanks to the great efforts by MS engineers to simplify syntax of DAX! Most beginners are SUCCESSFULLY MISLED to think that they could easily master DAX; but it turns out that the intricacy of the most frequently used RANKX() is still way beyond their comprehension! |

DAX is simple, but NOT EASY! |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @roxy12 ,

How about this:

Here the code in M:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("jZRba9xADIX/itjnwHg0Hl/mreTSC00KycJSQh60u5NYZGwHr5s2+fXV2NlQUoMNBhv8jXR0dOzb29Ul7yryAb61VXNom9XJau3/0EHumCAqrZVJQBuncXV3MouXCg0Yh9kSWMuVAhpn7YCf1xxe4KbmvvqIWqVBa2fzJXWzKDl3yWzVUiQAHqvGanBGzxzfn1Lg+7ZrmEbYKFQoGnJninlaBkuULkBnzpQDvuEQmGpYV239NGqeaqFLKF0ydjgP/Epb31ewppfQdtN9tLKgrcM3VVT7A0izscWFHOP9v6IELlyCs7BRepi3dDjC137rdzuCTcW9/582KgdMHI5Wfgpic7P3HXyhrhss+irzNy2/e58rnQHi0Z8FJ2JcRFHqjB6O/Aj8zASX1PXcTHbALFqTpAvwjxPctE+V0KeBukchrvxv+NkOj2/mFFA4q+fZYUVYgkanRyFn1LCE97rdd/zwy79OlDcxCIVLzejNlh+IA3z3fjIDEmMbfcFleBZTX7rULqJzmdQeI3lFfUUNbCg8+uk8ZnGtucPRmc8d7bxsNITJvEvixfP3z3q+ulg5/AeSctGB+EXFPRkZ9e4v", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [rerepresentatives = _t, block = _t, datetime = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"rerepresentatives", type text}, {"block", type text}, {"datetime", type datetime}}),

#"Grouped Rows 1" = Table.Group(#"Changed Type", {"rerepresentatives", "block"}, {{"Count", each Table.RowCount(_), Int64.Type}}),

#"Grouped Rows 2" = Table.Group(#"Grouped Rows 1", {"block"}, {{"AllRows", each SubGroupAddIndex(_), type table [block=nullable text, rerepresentatives=text, Count=number, Index=number]}}),

SubGroupAddIndex = (Table as table) as table =>

let

#"Sorted Rows" = Table.Sort(Table,{{"Count", Order.Descending}}),

#"Added Index" = Table.AddIndexColumn(#"Sorted Rows", "Index", 1, 1, Int64.Type)

in

#"Added Index",

#"Expanded AllRows" = Table.ExpandTableColumn(#"Grouped Rows 2", "AllRows", {"rerepresentatives", "Count", "Index"}, {"rerepresentatives", "Count", "IndexDense"}),

#"Grouped Rows 3" = Table.Group(#"Expanded AllRows", {"block", "Count"}, {{"Index", each List.Min([IndexDense]), type nullable number}}),

#"Merged Queries" = Table.NestedJoin(#"Expanded AllRows", {"block", "Count"}, #"Grouped Rows 3", {"block", "Count"}, "Grouped Rows", JoinKind.LeftOuter),

#"Expanded Grouped Rows" = Table.ExpandTableColumn(#"Merged Queries", "Grouped Rows", {"Index"}, {"Grouped Rows.Index"}),

#"Removed Columns" = Table.RemoveColumns(#"Expanded Grouped Rows",{"IndexDense"})

in

#"Removed Columns"

Let me know if this helps 🙂

/Tom

https://www.tackytech.blog/

https://www.instagram.com/tackytechtom/

| Did I answer your question❓➡️ Please, mark my post as a solution ✔️ |

| Also happily accepting Kudos 🙂 |

| Feel free to connect with me on LinkedIn! | |

| #proudtobeasuperuser |  |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!

| User | Count |

|---|---|

| 38 | |

| 36 | |

| 33 | |

| 33 | |

| 29 |

| User | Count |

|---|---|

| 134 | |

| 96 | |

| 78 | |

| 67 | |

| 65 |