Join the Fabric User Panel to shape the future of Fabric.

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Sign up nowGet Fabric certified for FREE! Don't miss your chance! Learn more

- Data Factory forums

- Forums

- Get Help with Data Factory

- Dataflow

- Re: Dataflow Gen2 - Online Power Query Experience ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflow Gen2 - Online Power Query Experience Works / Loading to Lakehouse Fails

Hi everyone...

I will try to be as specific as possible. I followed the tutorial provided by Microsoft, creating a Fabric Workspace, creating a new Lakehouse, and then creating various Dataflows Gen2 to load to the newly created Lakehouse. Instead of using Microsoft's example data (i.e., Contoso), I copied existing queries that have been running in dataflows in my Power Apps online, which I access from the Power BI service (PPU Licensee). Pretty simple, the queries work in Power Apps, load tables there and those are used in Power BI. Should be a no brainer. Apparently not.

Every query fails when attempting to load to the staging Lakehouse. In the Lineage view, I can see this error:

The import NavigationTable.CreateTableOnDemand matches no exports. Did you miss a module reference?

Looking at the tables created I can see this:

The tables all populate data, just as they do in Power Apps dataflows. When I save and close, it tries to update and then fails.

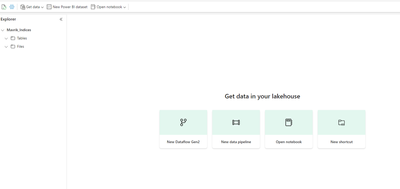

This is what I see in the Lakehouse I created:

No data loaded.

Has anyone had this issue and found a fix? I have been working with Support for 2 weeks and they haven't been able to resolve this. They have said there is a known bug, with no ETA provided to me. So far, my trial period has gone 14 days and I haven't even been able to evaluate Fabric because nothing is loaded to the Lakehouse.

My only idea so far is to delete everything include the new Fabric Workspace and start all over.

Any help is appreciated.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for responding. I received a text from support indicating there was an updated Gateway available to download that would correct the bug. I downloaded the new Gateway (ver 3000.178.9) and then refreshed my Dataflows Gen2 that were not working. They published to the Lakehouse! So that seemed to solve the problem.

Except, they did not bring the date columns in my Dataflows Gen2 into the tables in the Lakehouse. So, after some trial and error, I discovered that I needed to delete the published tables in the Lakehouse, then re-publish them.

So if anyone else has had my issue, the newest release of the Gateway resolves the issue. But, you will need to delete existing tables in your Lakehouse and re-publish the Dataflows as a new table in the Lakehouse.

Hope that helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for responding. I received a text from support indicating there was an updated Gateway available to download that would correct the bug. I downloaded the new Gateway (ver 3000.178.9) and then refreshed my Dataflows Gen2 that were not working. They published to the Lakehouse! So that seemed to solve the problem.

Except, they did not bring the date columns in my Dataflows Gen2 into the tables in the Lakehouse. So, after some trial and error, I discovered that I needed to delete the published tables in the Lakehouse, then re-publish them.

So if anyone else has had my issue, the newest release of the Gateway resolves the issue. But, you will need to delete existing tables in your Lakehouse and re-publish the Dataflows as a new table in the Lakehouse.

Hope that helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When the schema changes, you have to edit the settings for your Output Destination (which allows you to edit the mappings). You can also delete the table and start afresh as you suggested.

There isn't a mode that drops the table to automatically create a new schema. Even if such a mode is added, it could be disruptive to downstream artifacts. However, we are considering such a mode for convenience.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I forgot to mention, the date columns did not load originally as they were Date. I needed to change them to Date/Time. Only date columns that are Date/Time load correctly to the Lakehouse.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the ask .

I think you are working with the right team . As this needs more digging , they should be able to help . If you share the SR# , we will keep an eye on the ticket also .

Thanks

HImanshu

Helpful resources

Join our Community Sticker Challenge 2026

If you love stickers, then you will definitely want to check out our Community Sticker Challenge!

Fabric Monthly Update - January 2026

Check out the January 2026 Fabric update to learn about new features.