Jumpstart your career with the Fabric Career Hub

Find everything you need to get certified on Fabric—skills challenges, live sessions, exam prep, role guidance, and more.

Get startedGrow your Fabric skills and prepare for the DP-600 certification exam by completing the latest Microsoft Fabric challenge.

- Data Factory forums

- Forums

- Get Help with Data Factory

- Data Pipelines

- Re: Notebook in Pipeline: Please attach a lakehous...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Notebook in Pipeline: Please attach a lakehouse to proceed

Hello,

When trying to run a notebook as part of a pipeline I am frequently getting the following error message:

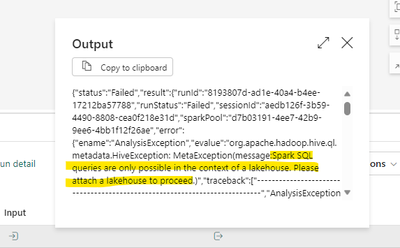

Spark SQL queries are only possible in the context of a lakehouse. Please attach a lakehouse to proceed.

I do have a lakehouse attached to the notebooks. I can run the individual notebooks successfully and occassionally they will run in the pipeline, but 9 out of 10 times it fails with this error message. Any ideas would be greatly appreciated!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @WomanToBlame ,

Save a copy will not work. You should open that notebook. Edit it to trigger auto save. You can also re-attach lakehouse again in notebook ux

Can you please try and let me know if it works?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This looks like a product bug. In case you dont receive the needed response here, the best way to proceed further is to log a support ticket.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @WomanToBlame ,

Thanks for using Fabric Community and reporting this.

Apologies for the issue you have been facing. I would like to check are you still facing this issue?

It's difficult to tell what could be the reason for this performance. I would request you to wait for sometime and try again.

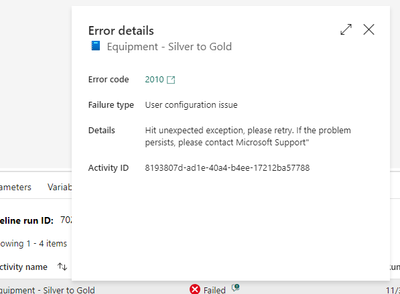

If the issue still persists, can you please share few more details about the nature of the failure like screenshots? error? sessionID? repro steps?

I would be able to guide you better once you provide these details.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @v-gchenna-msft,

Thanks for your response. The issue still occurs. It happens frequently enough that it is very easy to reproduce (it fails more often than succeeds).

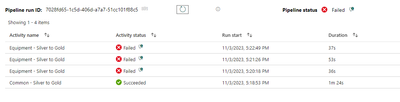

I have 4 notebooks that I have added to a pipeline. I have them set to run on the success of the previous one:

Here are some screen shots (I have the retry set to 3):

sessionId":"aedb126f-3b59-4490-8808-cea0f218e31d"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @WomanToBlame ,

Thanks for using Fabric Community and reporting this.

Apologies for the issue you have been facing. We are reaching out to the internal team to get more information related to your query and will get back to you as soon as we have an update.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @WomanToBlame ,

Thanks for using Fabric Community and reporting this,

You haven't done anything wrong. We've identified this as a bug in the front-end that was affecting the creation of notebooks from Lakehouse or Data Factory.

A simple workaround for this issue is to save all the notebooks once more and try again.

In case if it doesn't resolve your issue, can you please share the session id?

Hope this is helpful. Please let me know in case of further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, @v-gchenna-msft , unfortunately that did not work. I saved a copy of the notebook and included it in the pipeline, but I still get the same error.

rrunId: 5XXX0f6-XXXX-4cc2-XXXX-448cXXXX5833

sessionId: 372XXXX9-XXXX-463b-XXXX-60b4XXXXc5a0

message: Spark SQL queries are only possible in the context of a lakehouse. Please attach a lakehouse to proceed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @WomanToBlame ,

Apologies for the issue you have been facing. We are reaching out to the internal team to get more information related to your query and will get back to you as soon as we have an update.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @WomanToBlame ,

Save a copy will not work. You should open that notebook. Edit it to trigger auto save. You can also re-attach lakehouse again in notebook ux

Can you please try and let me know if it works?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Where do you get the option on attaching lakehouse to a notebook ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Haha, it worked! Thank you! My goodness, in my next life, I want to be a Buddhist, a philosophy teacher, or something less weird... Just kidding! But seriously, thanks a lot!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

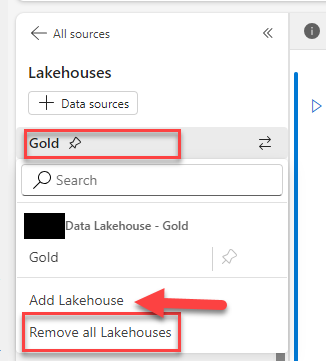

Thank you, @v-gchenna-msft, it works! Leaving the notebook in the pipeline, I detached and reattached the lakehouse. Just editing did not seem to work. Also, my original mistake was thinking the notebook needed to be removed from the pipeline, edited, and re-added.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This error still exists. Just editing did not seem to work.

Workaround is to detach and reattached the lakehouse.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the notebook that is throwing the error, in the panel on the left, left click on your lakehouse name. A context menu will appear with the option to "Remove all Lakehouses". Select that option, then do "Add Lakehouse" to re-add it. Once you select "Add Lakehouse" you will have the option to add an existing Lakehouse.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @WomanToBlame ,

Glad to know that your query got resolved. Please continue using Fabric Community for any help regarding your queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm getting the same error "Spark SQL queries are only possible in the context of a lakehouse. Please attach a lakehouse to proceed." on a notebook that I've been using once a month without problems. I have tried the remove all Lakehouses and adding it back option like 5 or 6 times and is still not working, I'm also noticing from the screenshots shared that when I re-add the lakehouse I don't see it listed and with the default pinned icon.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This bug seems to have resurfaced, I'm trying to run OPTIMIZE command from notebook against a lakehouse table, the lakehouse explorer is open at the side and I can see the tables and files

When I run OPTIMIZE it returns the "Spark SQL queries are only possible in the context of a lakehouse. Please attach a lakehouse to proceed." error.

If I click on "Lakehouse" above the "Tables" folder on the explorer, it says no results, when I open menu to add a lakehouse the lakehouse which is already attached is greyed out

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried again today and it was working as expected with the datalake attached. Seems that it was a random bug yesterday.