Join us at FabCon Vienna from September 15-18, 2025

The ultimate Fabric, Power BI, SQL, and AI community-led learning event. Save €200 with code FABCOMM.

Get registeredAsk the Fabric Databases & App Development teams anything! Live on Reddit on August 26th. Learn more.

- Data Factory forums

- Forums

- Get Help with Data Factory

- Data Pipeline

- Re: The copy from sql server on premise has been r...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

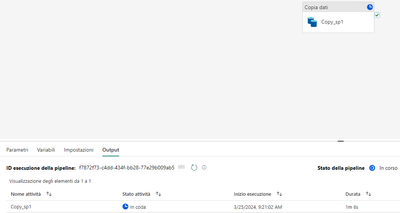

The copy from sql server on premise has been released but it doesn't work

On-premise SQL Server copy task remains in queued state indefinitely

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I finally solved it myself:

For those who use proxies, in addition to the files:

- C:\Program Files\On-premises data gateway\enterprisegatewayconfigurator.exe.config

- C:\Program Files\On-premises data gateway\Microsoft.PowerBI.EnterpriseGateway.exe.config

- C:\Program Files\Local Data Gateway\m\Microsoft.Mashup.Container.NetFX45.exe.config

with proxy configuration:

<system.net>

<defaultProxy useDefaultCredentials="true">

<proxy

autoDetect="false"

proxyaddress="http://192.168.1.10:3128"

bypassonlocal="true"

usesystemdefault="false"

/>

</defaultProxy>

</system.net>

The 2 integration runtime files must also be updated:

- C:\Program Files\On-premises data gateway\FabricIntegrationRuntime\5.0\Shared\Fabrichost.exe.config

- C:\Program Files\On-premises data gateway\FabricIntegrationRuntime\5.0\Shared\Fabricworker.exe.config

Now it finally works

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I finally solved it myself:

For those who use proxies, in addition to the files:

- C:\Program Files\On-premises data gateway\enterprisegatewayconfigurator.exe.config

- C:\Program Files\On-premises data gateway\Microsoft.PowerBI.EnterpriseGateway.exe.config

- C:\Program Files\Local Data Gateway\m\Microsoft.Mashup.Container.NetFX45.exe.config

with proxy configuration:

<system.net>

<defaultProxy useDefaultCredentials="true">

<proxy

autoDetect="false"

proxyaddress="http://192.168.1.10:3128"

bypassonlocal="true"

usesystemdefault="false"

/>

</defaultProxy>

</system.net>

The 2 integration runtime files must also be updated:

- C:\Program Files\On-premises data gateway\FabricIntegrationRuntime\5.0\Shared\Fabrichost.exe.config

- C:\Program Files\On-premises data gateway\FabricIntegrationRuntime\5.0\Shared\Fabricworker.exe.config

Now it finally works

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Issue still persists in mid May. SQL Server 2019 OnPrem copy activity is stuck on "in progress".

Gateway is latest as at 13.05.2024 (3000.218.9)..

Hoping this will be resolved soon as we're trying to do a POC of Fabric being a viable alternative to status quo setup. Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I experienced this same issue initially.

My pipeline was configured to use an Azure data lake gen 2 storage account for staging and the connection I used was configured to authenticate with an organisational account or service principal (can't remember which).

After re-configuring it to authenticate using an account key instead, the task proceeded beyond the queued state and I was finally able to reach the next big blocker that prevents the copy activity from working: the bypassing of the configured on-prem gateway when connecting the staging storage account.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I downloaded the latest version of gateway(3000.218.9) but the copy still queues endlessly. I tried to enable the also for the destination (adlg gen 2) connection via gateway and token SAS but nothing. With the Azure Synapse integration runtime I don't have all these problems

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the same issue. Whenever I use any data source which uses an on-premises data gateway, the pipeline gets stuck in 'Queued' with no helpful error messages. This occurs whether I'm using an ODBC connection on the on-prem server or a SQL Server. Destination is Azure SQL. The process gets stuck even if only selecting one row (which shows correctly in the preview and the auto-mapping works fine).

Using the same connection in DataFlow Gen 2 works fine but I was hoping to use Pipelines for this task.

Any ideas appreciated!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I have the same problem, attempting to copy data from on-prem SQL Server to lakehouse via data pipelines just sits indefinitely at status "In Progress".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @marcoG

Thanks for using Microsoft Fabric Community.

As I understand you are facing an issue with an on-premise SQL Server copy task getting stuck in the queued state within Microsoft Fabric.

Could you please share the screenshot of the error message you are seeing or some additional information about the activity that you are using in the Microsoft Fabric portal for the queued task?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I dont get any errors... it stays tha way:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The connections test are ok...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @marcoG

I successfully replicated the reported scenario and was able to run the copy activity without encountering any difficulties. For your reference, I have attached the screenshots.

It appears to be an intermittent issue with the data pipeline run. To ensure reliable data processing, I recommend you to attempt the activity again after a brief period.

Try to delete the activity, refresh the browser, add the activity again, and run the pipeline, this might help you.

Try to retry a data pipeline run this might help you, for further details on retrying data pipeline runs, kindly refer to retry a data pipeline run.

If the issue still persists please do let us know.

I hope this information helps.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am experiencing the exact same issue.

As suggested, I deleted my "Copy Data" activity and recreated it but the same issue occurred. I also tried re creating the Copy Data activity using the "Copy Assistant", but again I got the same result.

Any help would be greatly appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JFTxJ

In order to understand your specific data copy scenario, Could you please share some more details about your copy data activity. This will help me to understand the query and guide in better way. If possible, could you provide information on the following:

Source and Sink Details: Can you elaborate on the types of data stores involved in the copy operation?

Data Transformation Specifications: Are there any transformations being applied to the data during the copy process?

Screenshots: If feasible, sharing screenshots of the source and sink configuration within your data copy activity would be highly beneficial for visualization purposes.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

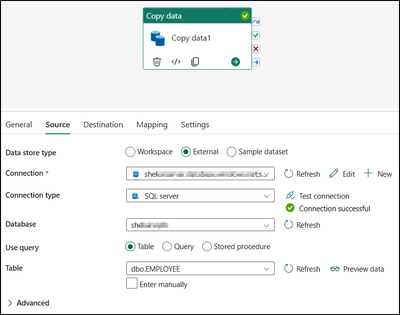

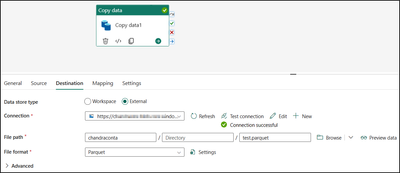

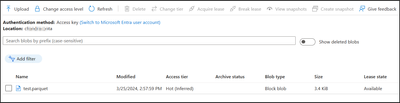

Aboslutely! The Copy Data will copy data from an On-Prem SQL Server hosted on AWS on an EC2 and Copy this data to a Lakehouse in the same workspace as the Pipeline. The source is a SQL Server 2019 standard.

There are no transformation to the data at all; the purpose is to do a strait copy of the data from On-Prem to the Fabric Lakehouse.

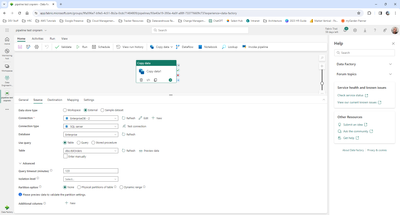

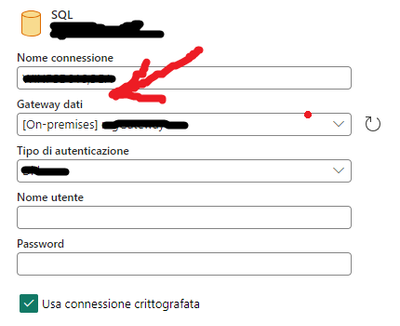

Here is the details of the source:

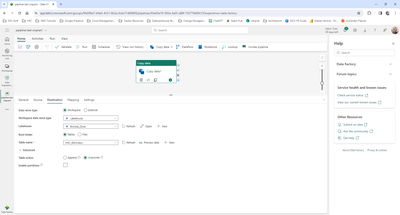

Here are the details of the sink:

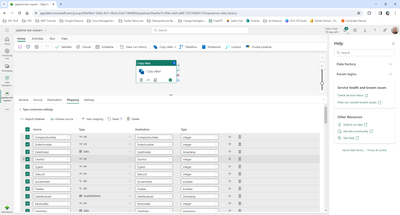

Here are the mapping configs:

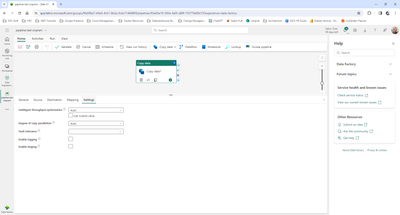

And settings are defaults:

Let me know if you need anythign else.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you used the gateway too?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @marcoG

Following up to see if you have a resolution yet or still facing the issue. In case if you have any resolution please do share that same with the community as it can be helpful to others.

If you have any question relating to the current thread, please do let us know and we will try out best to help you.

In case if you have any other question on a different issue, we request you to open a new thread.

If the issue still persists, please refer : Set new firewall rules on server running the gateway

For more details please refer : Currently supported monthly updates to the on-premises data gateways

I hope this information helps.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it still does not work

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @marcoG

Could you please share me the screenshots of the error that you are getting along with the copy activity details. This will help me in understanding the issue and help you in better way.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As I wrote previously I don't get any errors... it remains in the queued state indefinitely

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @marcoG

Monitor Pipeline Runs: Use the Azure Data Factory monitoring tools to view activity logs. These logs might provide detailed information about the issue, pinpointing specific errors or bottlenecks causing the queueing.

For details please refer : Monitor data pipeline runs.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your general answers.

If I have a pipeline with only 1 activity that copies a table of 2 rows I cannot take an infinite amount of time to complete, do you agree?

So displaying the monitoring hub which tells me that the task is in progress, what do I need it for?