FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!Special holiday offer! You and a friend can attend FabCon with a BOGO code. Supplies are limited. Register now.

- Data Factory forums

- Forums

- Get Help with Data Factory

- Pipelines

- Re: Ingesting on premise data with notebooks

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ingesting on premise data with notebooks

Hi, I have an on premise database with a running PowerBI gateway and this is how we connected to these sources previously. Now, the requierement is that we would recreate our ETL process in Microsoft Fabric using notebooks and Spark, but currently from what I read it seems like there is no way to do this due to notebooks note being compatible with the on premise gateway.

If this is the case, are there any other alternatives that I have, other than using Dataflows which are very slow and have performance issues. Ideally I would want to use a work around with Notebooks, that can retrieve this data.

Thanks in advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to ingest from an API that has certificae based authentication using on-prem gateway? For rest api the only authentication method available are anonymous, basic and service principal.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Catching up to this thread. I can use an on-prem DG connection to a Folder in a pipeline, but I can't use a dynamic connection name. When I choose dynamic connection name, it forces me to use one of three types of connections: Lakehouse, Warehouse, and KQL DB. I'd like to see the other connection types added.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you should be able to use and on-prem gateway to build a connection to connect to your local sql server. Why would you want a dynamic connection name?

The connector we use for this can talk to any DB and its tables, we have given it access to on the on prem sql server.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Asking me "why" is not helpful, but the answer is that I want to use different connections in different environments (dev, test, prod)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And...I'm not just connecting to sql server sources. I have a Folder connection

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Asking why is very helpful, because I am trying to understand what you want to achieve. We have an identical setup (3 environments) and a folder connection. You will have to use 1 connector for each.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's my point in my orig post. Dynamic connectors don't work for anything but the 3 fabric data stores.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We ingest data from an on prem MSSQL server by having created a view on the on-prem server that gets us the information we need.

That view is than called from a pipeline, using a gateway, which allows us to enter the data into a "lakehouse raw" table and next we do the ETL from there. We use the sql endpoint for the ETL, but I assume you could also use a notebook to do it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So as of today, there is no way to ingest on premise data using notebook,

The only way is to use data factory and copy activty to ingest on-premise data to a lake house. Am i right?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I'm coming back to this topic to understand if the integration of On-prem gateways in Fabric Spark is on the roadmap for future implementation.

It would be really nice to be able to script ingestion of On-Prem sources (SQL server in my case) through notebooks. Right now I have a complex metadata driven pipeline with multiple steps which are really hard to maintain and could be all replaced by a notebook, if this feature gets implemented.

Thanks 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree

I want to do my data ingestion using notebooks (and not data factory)

Is it still nog possbile to reference an on premise data gateway from a notebook?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are no insights to gain, the functionality doesn't exist, hopefully it's "yet" that it doesn't and will be added soon.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @egons11

Thanks for using Fabric Community.

Currently, Microsoft Fabric notebooks do not directly support connecting to on-premise databases through the Power BI Gateway. Fabric Spark doesn’t do the integration with PBI gateway.

As a workaround you can create a Shortcut in Fabric Lakehouse for your external data. This way you can query the data using Notebooks. For more information regarding Shortcuts please refer to this link:

https://learn.microsoft.com/en-us/fabric/onelake/create-onelake-shortcut

Hope this helps. Please let me know if you have any further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How would shortcuts help me in this case? It can externally connect only to S3 and ADLS. The data is stored on local databases.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Multiple local PG instances

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @egons11

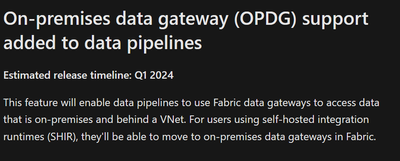

You should use a Data Pipeline to copy the data to lakehouse. Fabric data pipelines will be added to the On Prem data gateway in the next few months.

What's new and planned for Data Factory in Microsoft Fabric - Microsoft Fabric | Microsoft Learn

If you need something sooner, you should use Azure Data Factory Pipelines with the Self-Hosted Integration Runtime. For more information please refer to these links:

Copy on-premises data using the Azure Copy Data tool - Azure Data Factory | Microsoft Learn

How to ingest data into Fabric using the Azure Data Factory Copy activity - Microsoft Fabric | Micro...

Hope this helps. Please let me know if you have any further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So we have to manually create pipeline activities for each table we want? There's really no way to do it in code?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Create views on your on prem server, joining the tables you need, if there is a relation between then of course.

Next use a copy data action in a pipeline to call that view and ingest it into a lakehouse. Thats how we do it for multiple tables at my work.

I'm not realy sure where the advantage would be to do it with a notebook, since you would (depending on your database structure) have to either call multiple tables in a notebook, use multiple notebooks, or create a join between tables in a notebook.

Cheers

Hans

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I know about the roadmap, but it's still couple month, we need it now.

In regards to the COPY activity, I am pretty sure you can't use it to build incremental ETL (to append), it re-copies all the data every time you run it, so this is not suitable for us.