FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!Special holiday offer! You and a friend can attend FabCon with a BOGO code. Supplies are limited. Register now.

- Data Engineering forums

- Forums

- Get Help with Data Engineering

- Data Engineering

- Fabric I have created a Dataframe in Notebook usin...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Fabric I have created a Dataframe in Notebook using pyspark. now I want to create a Delta PARQUET

Fabric I have created a Dataframe in Notebook using pyspark. now I want to create a Delta PARQUET

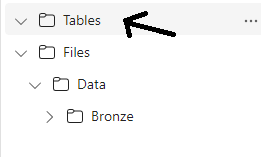

And I assume it is going into here

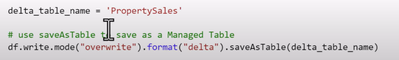

I use the Code:

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @DebbieE ,

Inorder to create table, you need use the below code..

Method 1:

df.write.mode("overwrite").format("delta").saveAsTable("abc5")

In your case, you just missed to include quotes.

Method 2:

Hope this is helpful. Please let me know incase of further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Depending on your desired result, there are different methods. The method you are using will write a parquet file to the Files location. If you want to create a Delta table, you should use the saveAsTable function with "delta" as the format.

Keep in mind that table names can only contain alphanumeric characters and underscores.

From the documentation:

# Keep it if you want to save dataframe as CSV files to Files section of the default Lakehouse

df.write.mode("overwrite").format("csv").save("Files/ " + csv_table_name)

# Keep it if you want to save dataframe as Parquet files to Files section of the default Lakehouse

df.write.mode("overwrite").format("parquet").save("Files/" + parquet_table_name)

# Keep it if you want to save dataframe as a delta lake, parquet table to Tables section of the default Lakehouse

df.write.mode("overwrite").format("delta").saveAsTable(delta_table_name)

# Keep it if you want to save the dataframe as a delta lake, appending the data to an existing table

df.write.mode("append").format("delta").saveAsTable(delta_table_name)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

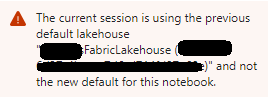

I am confused with this

df.write.mode("overwrite").format("delta").saveAsTable(delta_table_name)NameError: name 'DimContestant' is not defined It doesnt exist as its beand new so this doesnt work for me

Ahhhh I found out you have to do this

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @DebbieE ,

Inorder to create table, you need use the below code..

Method 1:

df.write.mode("overwrite").format("delta").saveAsTable("abc5")

In your case, you just missed to include quotes.

Method 2:

Hope this is helpful. Please let me know incase of further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @DebbieE ,

Glad to know that your query got resolved. Please continue using Fabric Community on your further queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @DebbieE ,

We haven’t heard from you on the last response and was just checking back to see if your query was answered. Otherwise, will respond back with the more details and we will try to help.