FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!Get Fabric certified for FREE! Don't miss your chance! Learn more

- Data Engineering forums

- Forums

- Get Help with Data Engineering

- Data Engineering

- Re: Duplicated rows between notebook and SQL Endpo...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Duplicated rows between notebook and SQL Endpoint

I'm having troubles when trying to count the number of rows in my table from Lakehouse.

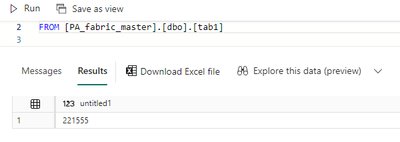

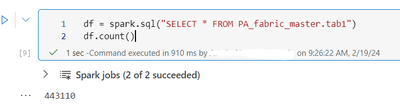

If I count the rows from SQL Endpoint, I get 221555 rows, if I read the table from notebook and then I count the rows (df.count()) I get that number twice: 443110

How can this be possible?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all, there's apparently a bug where the metadata on which parquet file is the latest and greatest can get hosed up between the lakehouse SQL endpoint and the notebooks. I worked a ticket with Microsoft and they had me run the following code. After running it, wait 30 minutes or so to retry running the notebook. In my case this perfectly fixed the issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all, there's apparently a bug where the metadata on which parquet file is the latest and greatest can get hosed up between the lakehouse SQL endpoint and the notebooks. I worked a ticket with Microsoft and they had me run the following code. After running it, wait 30 minutes or so to retry running the notebook. In my case this perfectly fixed the issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does this mean that when reading lakehouse tables from a notebook that I need to run these 3 lines of code for every table I read??

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Scott_Powell

Thanks for sharing the solution here. Please continue using Fabric Community for any help regarding your queries.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I created an idea: https://ideas.fabric.microsoft.com/ideas/idea/?ideaid=cea60fc6-93f2-ee11-a73e-6045bd7cb2b6

Please vote if you want this issue to be fixed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure, when I get the number of rows from SQL endpoint:

And when I count them through notebook:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @amaaiia

I tried checking the same with my Lakehouse tables, but the count is same. I checked for two tables.

The discrepancy in row counts between SQL Endpoint and notebook could be due to several reasons:

Data Duplication: There might be duplicate rows in your data. When you read the data into a DataFrame and use df.count(), it counts all rows, including duplicates. If this is the case, you can remove duplicates using the df.dropDuplicates() function.

Data Inconsistency: There might be inconsistencies between the data in your SQL Endpoint and the data in your notebook. This could be due to issues with data ingestion, data updates, or data synchronization. You can verify this by comparing a subset of your data in both SQL Endpoint and notebook.

Caching Issues: Sometimes, Spark might cache the DataFrame, and if the underlying data changes, the cached DataFrame might not reflect these changes2. You can try to clear the cache using the spark.catalog.clearCache() function in your notebook.

Hope this helps. Please let me know if you have any further questions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @amaaiia

We haven’t heard from you on the last response and was just checking back to see if your query got resolved. Otherwise, will respond back with the more details and we will try to help.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I didn't find a solution. As I was in dev environment trying a demo I just deleted an laoded data again. I'm not having duplicates now. I hope this won't happen again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@amaaiia Thanks for sharing!

Did you use Dataflow Gen2 to ingest data into your Lakehouse?

Here is a similar issue:

https://community.fabric.microsoft.com/t5/General-Discussion/Duplicated-Rows-In-Tables-Built-By-Note...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm experiencing a similar issue:

My dataflow Gen2 is storing data in lakehouse. Once in a while the 'replace' table setting in the dataflow Gen2 doesn't seem to work and it results in having the same data copied twice. It only seems to be affecting smaller tables. If I delete the table it works for a few days but then suddenly there are duplicates again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @amaaiia

Thanks for using Fabric Community.

Can you please provide the screenshots for the SQL code and the notebook code? This would help me to understand the question better.

Thanks

Helpful resources

Join our Fabric User Panel

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Fabric Monthly Update - February 2026

Check out the February 2026 Fabric update to learn about new features.

| User | Count |

|---|---|

| 18 | |

| 7 | |

| 4 | |

| 3 | |

| 3 |