FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Learn from the best! Meet the four finalists headed to the FINALS of the Power BI Dataviz World Championships! Register now

- Power BI forums

- Forums

- Get Help with Power BI

- DAX Commands and Tips

- Re: Yet Another Consecutive Values Question

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yet Another Consecutive Values Question

I've seen this question asked quite a bit, but I can't seem to find a solution that works for me or doesn't thoroughly confuse me.

I'd like to create a calculated column that counts consecutive "bad" indicators. I don't care so much about single instances, good or bad. I'd like an ouput similar to the Result column below.

If measure would work better, that's fine with me. But it's a calculation I'm going to use quite a bit and, ultimately, I'd like to be able to pull some numbers that say "we had X amount of 'consecutive bads' this month on Y amount of servers and the average number of consecutives (in terms of duration, 2.3 consecutive bads or whatever) was Z".

| Server | Date | Indicator | Result |

| A | 1/1/2021 | Good | Don't care |

| A | 1/2/2021 | Bad | Don’t care |

| A | 1/3/2021 | Good | Don't care |

| A | 1/4/2021 | Bad | 2 Bads |

| A | 1/5/2021 | Bad | 2 Bads |

| B | 1/1/2021 | Good | Don't care |

| B | 1/2/2021 | Good | Don't care |

| B | 1/3/2021 | Good | Don't care |

| B | 1/4/2021 | Bad | Don't care |

| B | 1/5/2021 | Good | Don't care |

| C | 1/1/2021 | Bad | Don't care |

| C | 1/2/2021 | Good | Don't care |

| C | 1/3/2021 | Bad | 3 Bads |

| C | 1/4/2021 | Bad | 3 Bads |

| C | 1/5/2021 | Bad | 3 Bads |

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

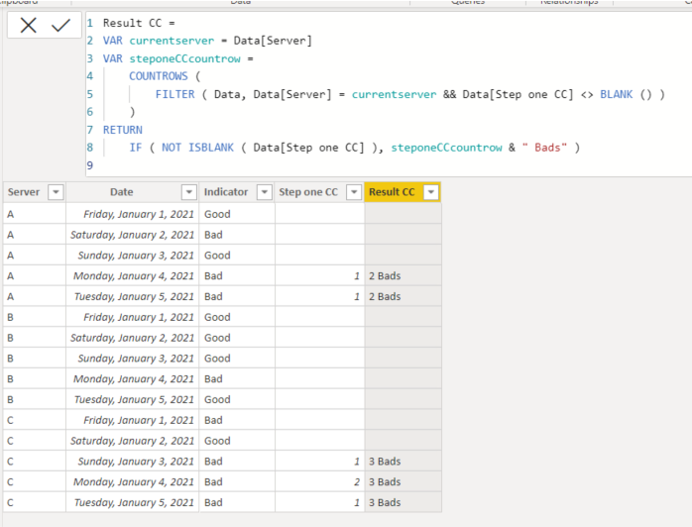

Hi, @RShackelford

Thank you for your feedback.

Please check the below.

If this post helps, then please consider accepting it as the solution to help other members find it faster, and give a big thumbs up.

Click here to visit my LinkedIn page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @RShackelford

Please check the below picture and the sample pbix file's link down below. It is for creating a new column.

If this post helps, then please consider accepting it as the solution to help other members find it faster, and give a big thumbs up.

Click here to visit my LinkedIn page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank You @Jihwan_Kim!

That worked for the dataset I provided in the example. However, I need to separate out the different consecutive instances within the same server (See server C in the table below). My apologies, I should have specified this in the original example.

| Server | Date | Indicator | Result |

| A | 1/1/2021 | Good | Don't care |

| A | 1/2/2021 | Bad | Don’t care |

| A | 1/3/2021 | Good | Don't care |

| A | 1/4/2021 | Bad | 2 Bads |

| A | 1/5/2021 | Bad | 2 Bads |

| B | 1/1/2021 | Good | Don't care |

| B | 1/2/2021 | Good | Don't care |

| B | 1/3/2021 | Good | Don't care |

| B | 1/4/2021 | Bad | Don't care |

| B | 1/5/2021 | Good | Don't care |

| C | 1/1/2021 | Bad | Don't care |

| C | 1/2/2021 | Good | Don't care |

| C | 1/3/2021 | Bad | 3 Bads |

| C | 1/4/2021 | Bad | 3 Bads |

| C | 1/5/2021 | Bad | 3 Bads |

| C | 1/6/2021 | Good | Don't care |

| C | 1/7/2021 | Bad | 2 Bads |

| C | 1/8/2021 | Bad | 2 Bads |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @RShackelford

Thank you for your feedback.

Please check the below.

If this post helps, then please consider accepting it as the solution to help other members find it faster, and give a big thumbs up.

Click here to visit my LinkedIn page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank You! That's exactly what I needed.

Your solution is much cleaner than many I've seen.

Helpful resources

Join our Fabric User Panel

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Power BI Monthly Update - February 2026

Check out the February 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 6 | |

| 3 | |

| 3 | |

| 3 | |

| 2 |

| User | Count |

|---|---|

| 8 | |

| 8 | |

| 8 | |

| 7 | |

| 7 |